Google Search Scraper API

Extract Google search data at scale without getting blocked. Our Google scraping API* combines 125M+ proxies, advanced anti-bot measures, and automatic retries, so you only pay for successful requests and get real-time data without a single hassle. Get started in minutes with our 7-day free trial.

* This scraper is now a part of Web Scraping API.

14-day money-back option

125M+

IPs worldwide

100+

ready-made templates

99.99%

success rate

195+

locations

7-day

free trial

Trusted by:

Awarded web data collection solutions provider

Users love Decodo’s Web Scraping API for exceptional performance, advanced targeting options, and the ability to effortlessly overcome CAPTCHAs, geo-restrictions, and IP bans.

Why the scraping community chooses Decodo

Decodo

Manual data collection

Other APIs

125M+ residential, mobile, datacenter, and ISP proxies

Manage proxy rotation yourself

Limited proxy pools

Advanced browser fingerprinting

Build CAPTCHA solvers

Frequent CAPTCHA blocks

Only pay for successful requests

Handle retries manually

Pay for failed requests

100+ ready-made scraping templates

Maintenance overhead

Complex documentation

Data in JSON, CSV, HTML, and Markdown formats

Days to implement

Limited output formats

Be ahead of the Google scraping game

What is a Google Search scraper?

A Google Search scraper is a tool that extracts data directly from Google’s search results.

With our Google Search scraping API, you can send a single API request and receive the data you need in HTML or structured formats like JSON and CSV. Even if a request fails, we’ll automatically retry it until your data is successfully delivered. You only pay for successful requests.

Designed by our experienced developers, this tool offers you a range of handy features:

- Built-in scraper and parser

- JavaScript rendering

- Easy API integration

- Vast country-level targeting options

- No CAPTCHAs or IP blocks

Extract data from Google Search

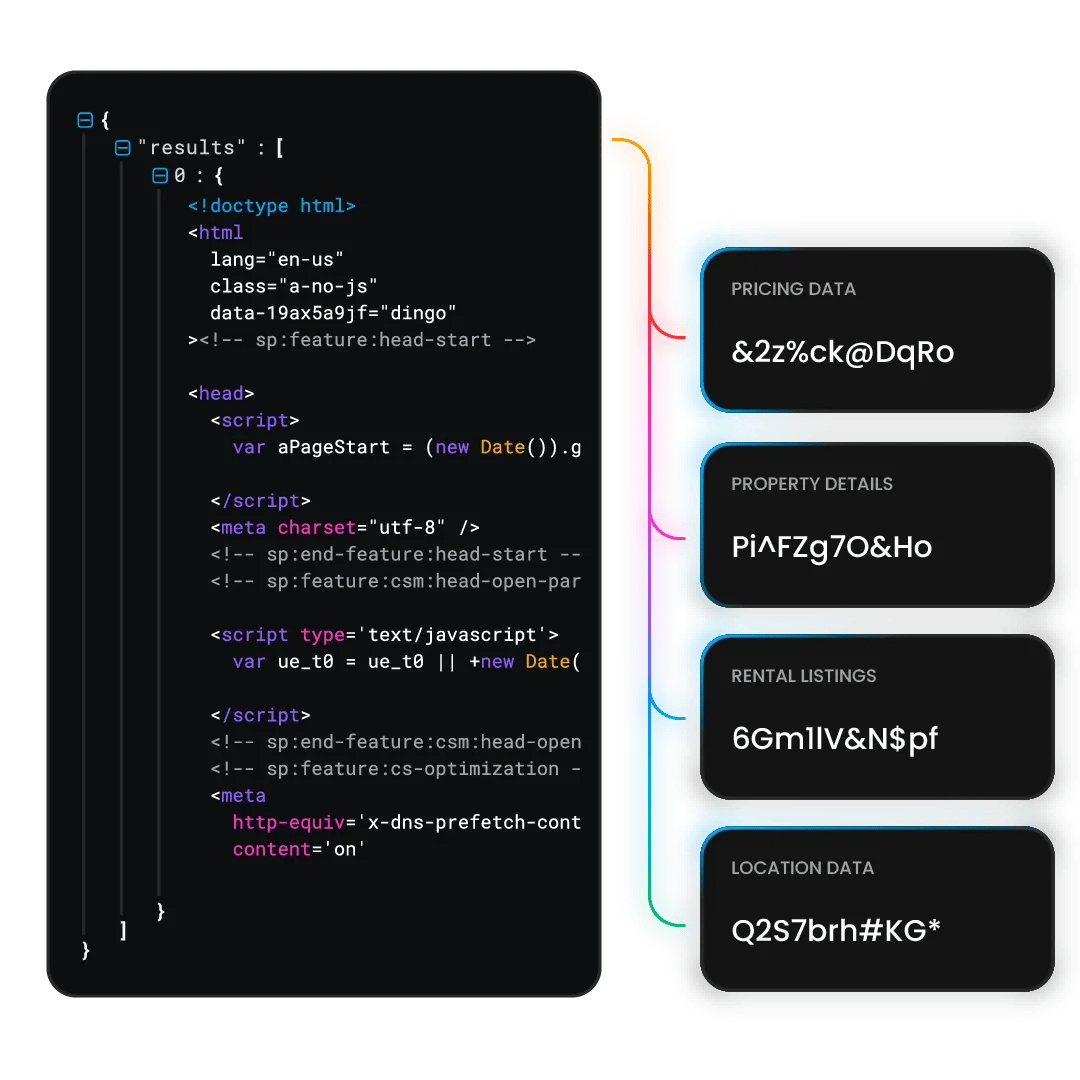

Web Scraping API is a powerful data extraction tool that combines a web scraper, a smart parser, and access to a pool of 125M+ residential, mobile, ISP, and datacenter proxies. This ensures reliable and scalable access to Google Search data in real time. With this scraper, you can extract valuable insights such as:

- Organic search results

- Featured snippets and People Also Ask boxes

- Search ads

- Keyword rankings and SERP positions

- Related search suggestions

- Local pack listings

- Autocomplete suggestions

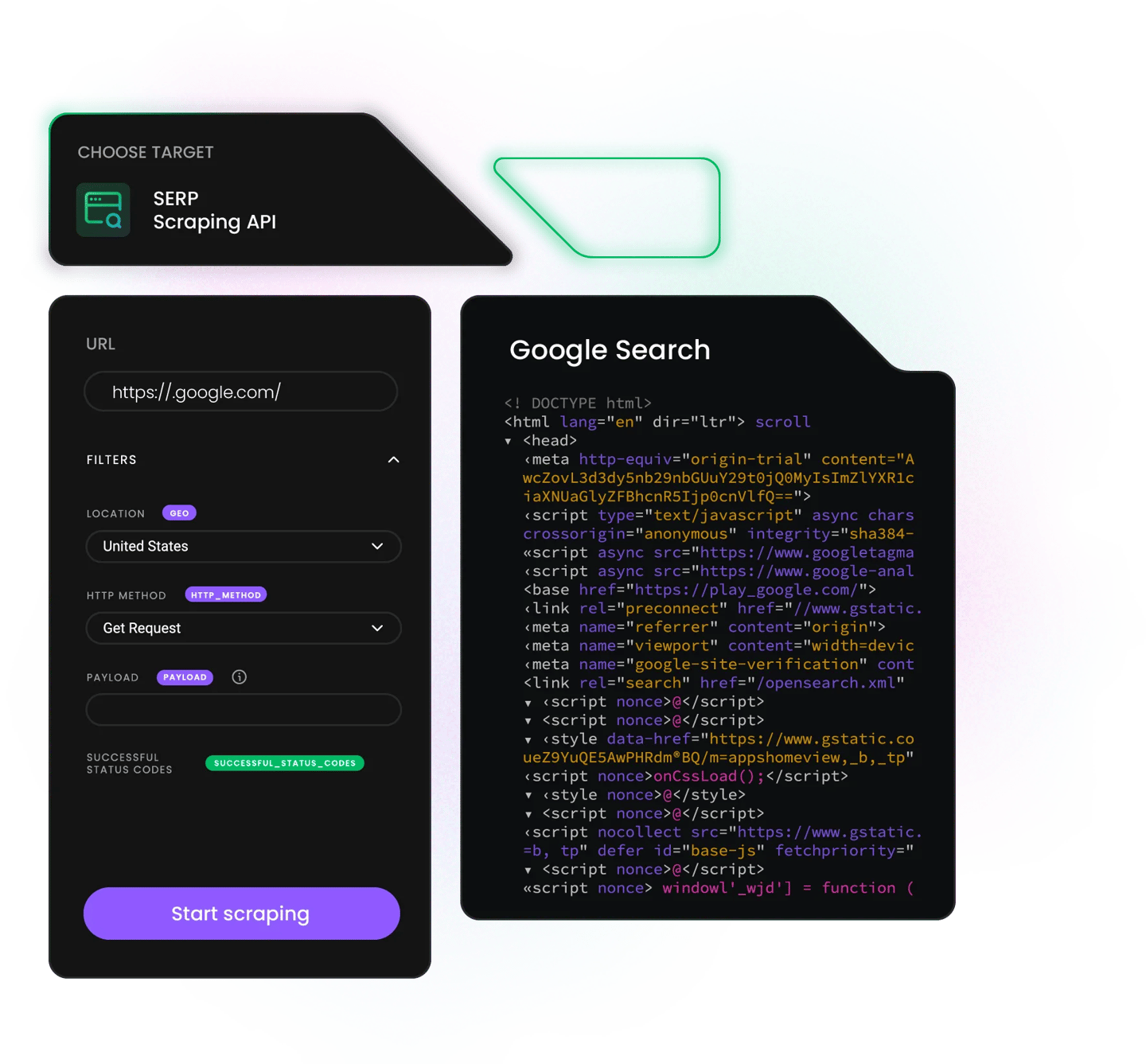

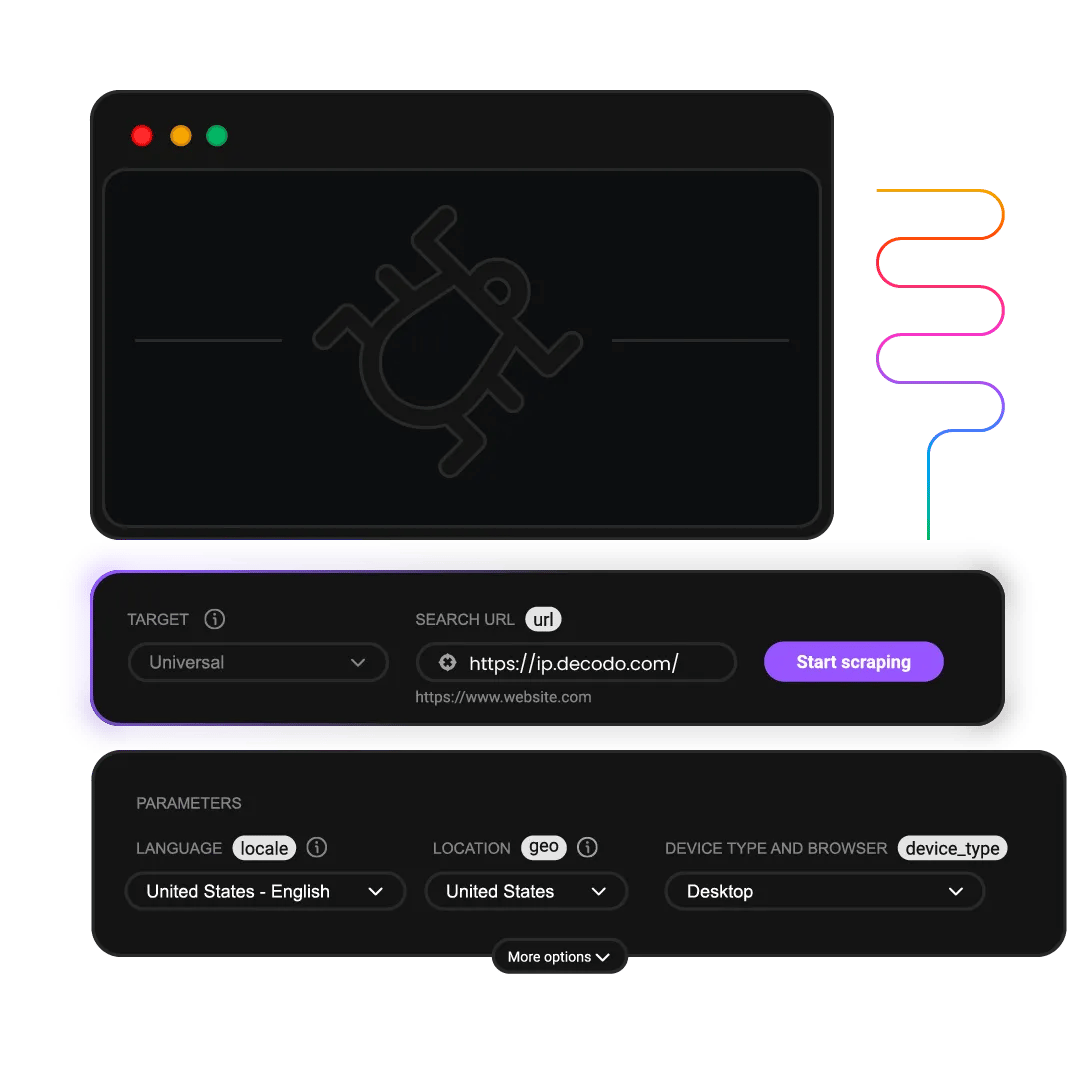

Test drive our Google scraping API

Scraping the web has never been easier. Get a taste of what our Web Scraping API is capable of right here and now.

The most popular Google Search scraper use cases

Power your data-driven projects with our advanced scraping solution and capture real-time insights on demand.

SEO & SERP monitoring

Monitor keyword positions, featured snippets, and SERP features to measure your SEO success and outperform rivals.

Competitor research

Explore rival strategies by pulling search data to identify their ranking keywords and content performance metrics.

Trendspotting

Identify emerging search patterns and trending keywords to inform your content planning and campaign focus.

AdTech

Extract Google Ads data to reveal competitor bidding approaches, pricing models, and advertisement positioning.

Local SEO tracking

Capture location-based campaign data to measure regional presence and track nearby competitor activity.

AI & LLM training

Collect structured Google Search data and feed your AI models or LLMs with current, relevant information.

Collect data from multiple Google targets

Optimize your data acquisition workflow through our versatile Web Scraping API. Get instant insights from Google Search, Trends, and numerous other targets with a simple implementation.

Google Search

Collect real-time data from SERP without facing CAPTCHAs or IP blocks.

Google News

Download bulk headlines, story briefs, and resource links from up-to-date news feeds simultaneously.

Google Lens

Extract visual URLs, image matches, and relevant metadata from Google's picture search.

Google Trends

Pinpoint prevalent search activity on Google Search via our Web Scraping API.

Google Play

Pull contemporary app labels, content descriptions, and segment data.

Google Autocomplete

Deploy targeted research to identify search habits in designated geographic zones.

Google Hotel

Retrieve performance data on sought-after accommodations locally and beat industry rivals.

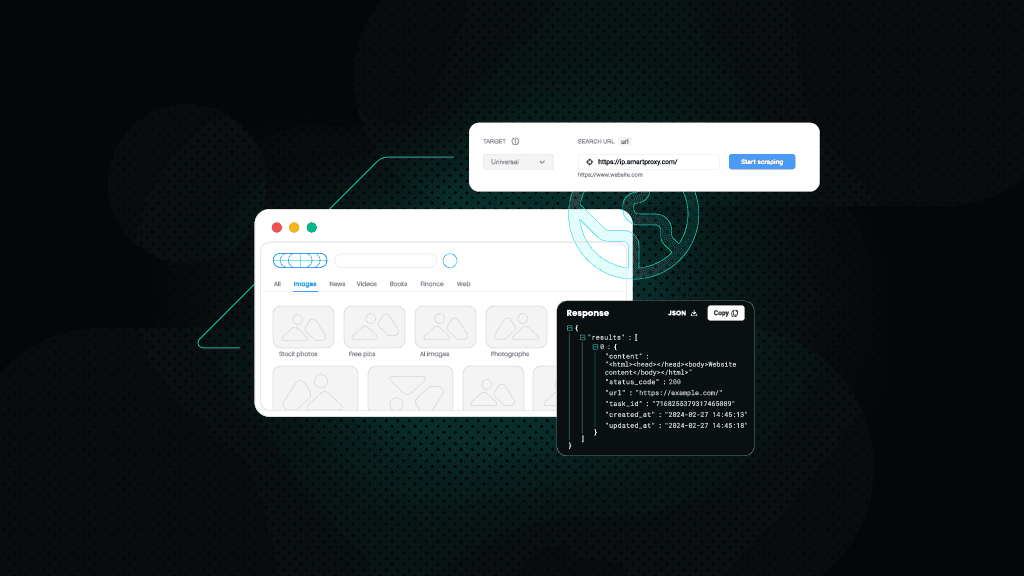

Take advantage of all Google scraping API features

Extract Google Search data effortlessly with our advanced scraping API. Choose from multiple output formats and leverage integrated proxy technology for uninterrupted data collection, no blocks, no CAPTCHAs, just results.

Flexible output options

Get your data in HTML, CSV, JSON, XHR, or Markdown, whatever works best for your setup.

Task scheduling

Set it and forget it – schedule your scraping jobs and get pinged when they're done.

Real-time or on-demand results

Your call, grab data right now or set up jobs to run whenever you need the freshest data.

Advanced anti-bot measures

Skip the headaches of detection, CAPTCHAs, and IP bans with our built-in fingerprinting magic.

Easy integration

Hook up our APIs to your tools in minutes with our quick start guides and ready-to-run code.

Ready-made scraping templates

Jump straight into data collection with our plug-and-play scraper templates.

Geo-targeting

Pick a spot on the map and get data that's specific to that location.

High scalability

Plug our scraping tools into your workflow and pull data from wherever you need it.

Bulk upload

Push out multiple data requests at once, one click, done.

Scrape Google Search with Python, Node.js, or cURL

Our Google Search scraper supports all popular programming languages for hassle-free integration with your business tools.

Find the right Google scraping solution for you

Explore our Google Search scraper API offerings and choose the solution that suits you best – from Core to Advanced solutions.

Core

Advanced

Essential scraping features to unlock targets efficiently

Premium scraping solution with high customizability

Success rate

100%

100%

Output

HTML

JSON, CSV, Markdown, PNG, XHR, HTML

Free trial

Anti-bot bypassing

Proxy management

API Playground

Task scheduling

Pre-build scraper

Ready-made templates

Advanced geo-targeting

Premium proxy pool

Unlimited threads & connections

JavaScript rendering

Explore our plans for any Google Search scraping demand

Start collecting real-time data from Google Search and stay ahead of the competition.

23K requests

$1.25

$0.88

/1K req

Total:$20+ VAT billed monthly

Use discount code - SCRAPE30

82K requests

$1.2

$0.84

/1K req

Total:$69+ VAT billed monthly

Use discount code - SCRAPE30

216K requests

$1.15

$0.81

/1K req

Total:$179+ VAT billed monthly

Use discount code - SCRAPE30

455K requests

$1.1

$0.77

/1K req

Total:$349+ VAT billed monthly

Use discount code - SCRAPE30

950K requests

$1.05

$0.74

/1K req

Total:$699+ VAT billed monthly

Use discount code - SCRAPE30

2M requests

$1.0

$0.7

/1K req

Total:$1399+ VAT billed monthly

Use discount code - SCRAPE30

Need more?

Chat with us and we’ll find the best solution for you

With each plan, you access:

99.99% success rate

100+ pre-built templates

Supports search, pagination, and filtering

Results in HTML, JSON, or CSV

n8n integration

LLM-ready markdown format

MCP server

JavaScript rendering

24/7 tech support

14-day money-back

SSL Secure Payment

Your information is protected by 256-bit SSL

What people are saying about us

We're thrilled to have the support of our 130K+ clients and the industry's best.

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Featured in:

Learn more about scraping

Build knowledge on our solutions, or pick up some fresh ideas for your next project – our blog is just the perfect place.

Most recent

How to Set Up and Use SOCKS5 Proxy

Dominykas Niaura

Last updated: Feb 26, 2026

10 min read

Frequently asked questions

Is scraping Google Search legal?

Web scraping legality depends on the type of data collected and how it's used. Generally, scraping public web data is legal as long as it complies with local and international laws. However, it’s essential to review the terms of service and seek legal advice before engaging in scraping activities.

How do I get started with the Google Search scraper API?

You can start collecting data from Google Search in just a few simple steps:

- Create an account on the Decodo dashboard and access the Web Scraping API section.

- Choose a subscription that matches your needs – you can get started with a 7-day free trial with 1K requests.

- After activating your subscription, go to the Scraper tab, choose the Google Search target, enter your query, and adjust the Web Scraping API settings according to your needs.

- The Web Scraping API will then retrieve the results in your preferred format.

- Optionally, you can use our free AI Parser to get formatted results.

Do you support Google AI Overviews?

Our Web Scraping API collects data from Google search results pages, including valuable information from recently introduced AI Overviews.

What are common use cases for a Google scraping API?

A Google scraping API is a powerful tool used to automate the extraction of search engine data without managing proxies, browsers, or anti-bot bypassing yourself. Developers and data-driven teams rely on it for a wide range of data collection and market intelligence tasks. Here are the most common use cases:

- SEO monitoring – track keyword rankings, featured snippets, and SERP fluctuations at scale.

- Ad verification – validate paid search ads in different locations or devices without manual effort.

- Price intelligence – collect results to benchmark competitors' pricing.

- Market research – extract related queries, "People Also Ask" data, and competitor listings.

- Travel & hospitality – gather real-time flight or hotel availability via search results.

- News monitoring & media intelligence – track brand mentions, competitor coverage, and industry trends across Google News. See our open-source Google News scraper for headline extraction and automated export examples.

- AI and ML training – feed structured search engine output into models for training or fine-tuning.

Can I scrape data for multiple keywords simultaneously?

You can leverage our bulk-scraping feature to collect data from multiple keywords at once. Web Scraping API will return the results from multiple queries in your preferred format – HTML or formatted in JSON or CSV.

Can I retrieve all results across multiple pages?

Yes, you can specify the number of pages or results you want to retrieve. The API will aggregate results across multiple pages and return them in a single response.

Google Search Scraper API for Your Data Needs

Gain access to real-time data at any scale without worrying about proxy setup or blocks.

14-day money-back option