Web Scraping API

Extract structured data from any website – without CAPTCHAs, IP blocks, or complex setup. Stream results in HTML, JSON, CSV, PNG, XHR, or Markdown directly into your workflows and AI agents.

125M+

IPs worldwide

99.99%

success rate

200

requests per second

100+

ready-made templates

7-day

free trial

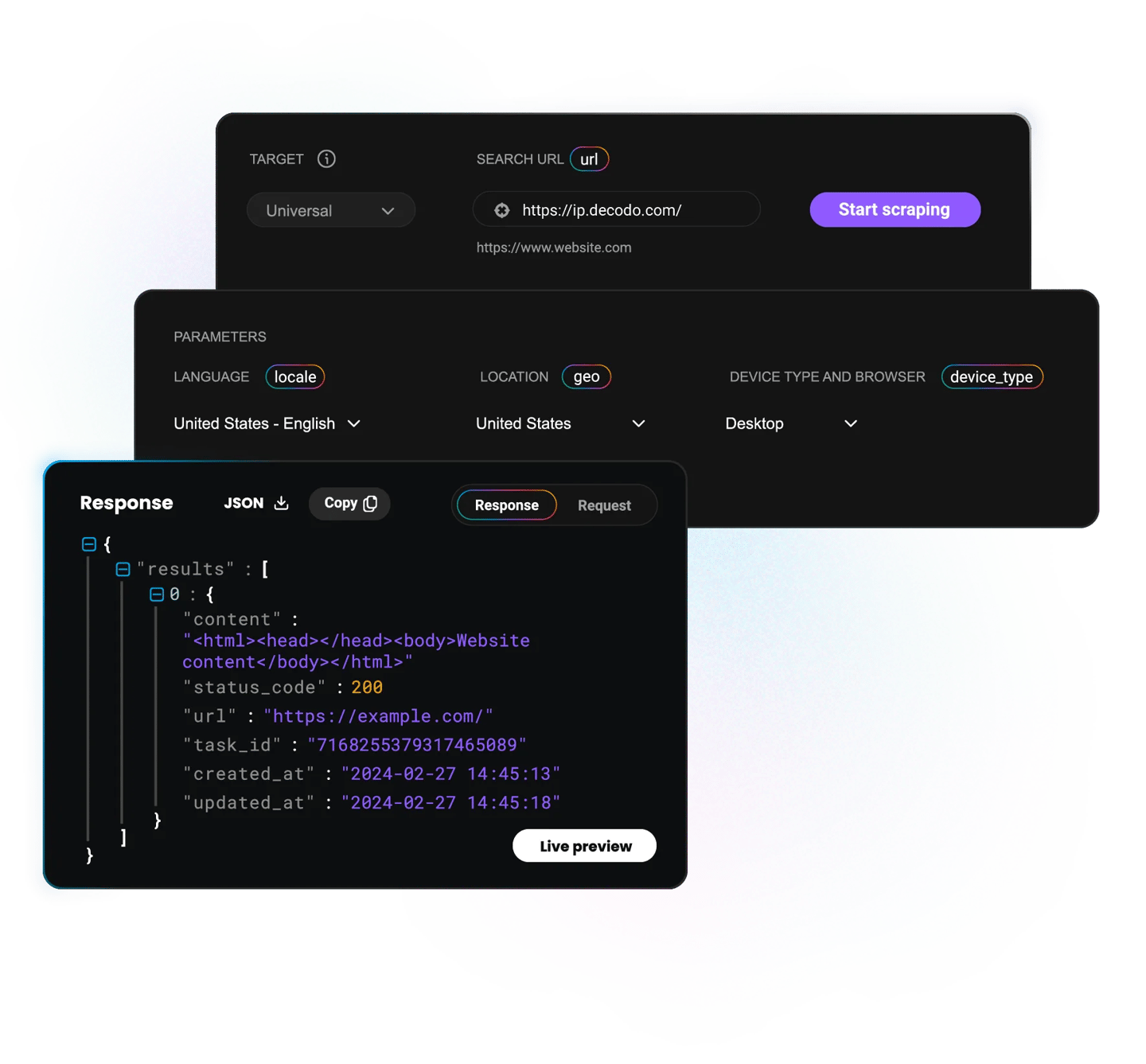

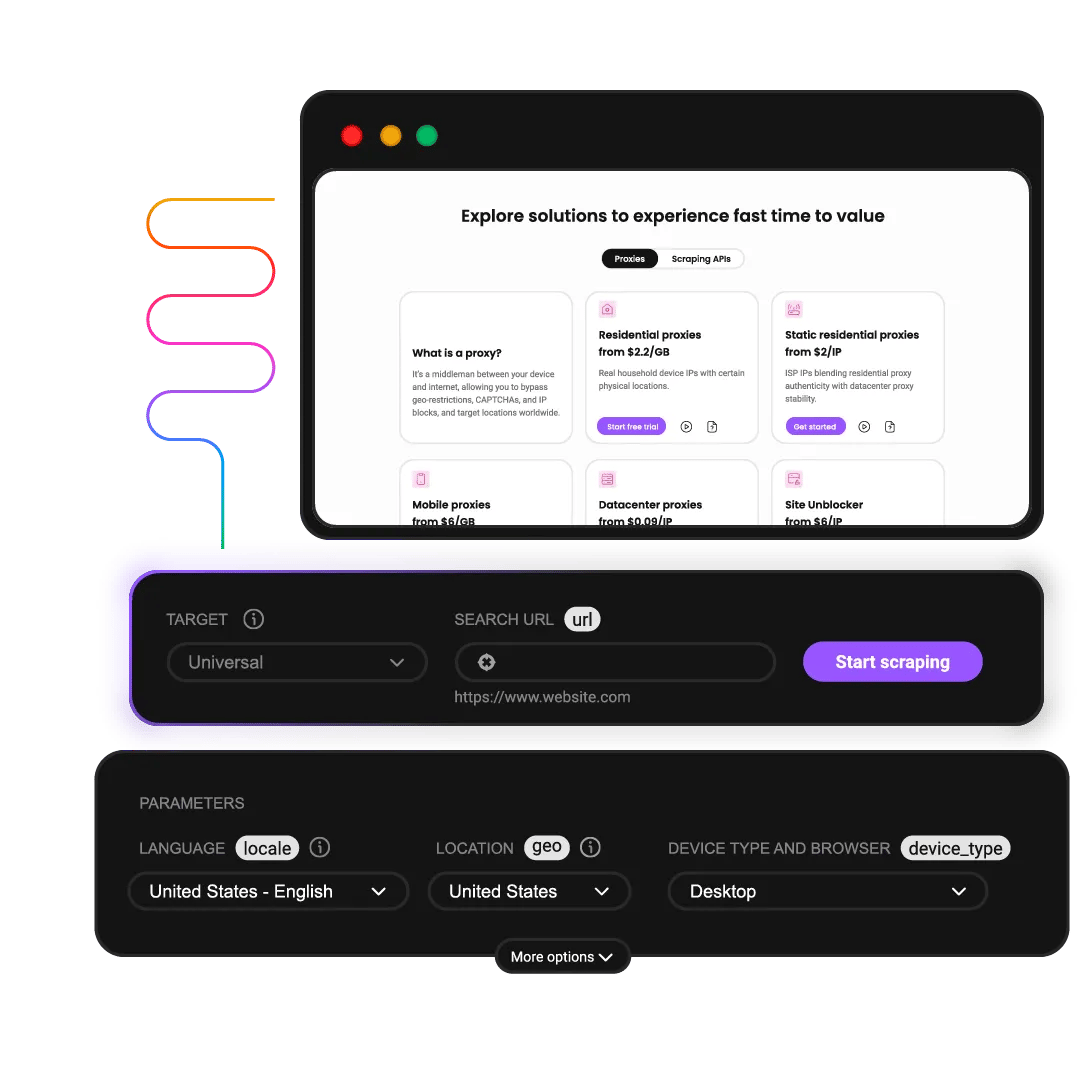

Test drive our Web Scraping API

Scraping the web has never been easier. Get a taste of what our Scraping API is capable of right here and now.

Explore our plans for every scraping task

Pick a plan that suits you. Get ahead with our powerful Scraping API.

23K requests

$1.25

$0.88

/1K req

Total:$20+ VAT billed monthly

Use discount code - SCRAPE30

82K requests

$1.2

$0.84

/1K req

Total:$69+ VAT billed monthly

Use discount code - SCRAPE30

216K requests

$1.15

$0.81

/1K req

Total:$179+ VAT billed monthly

Use discount code - SCRAPE30

455K requests

$1.1

$0.77

/1K req

Total:$349+ VAT billed monthly

Use discount code - SCRAPE30

950K requests

$1.05

$0.74

/1K req

Total:$699+ VAT billed monthly

Use discount code - SCRAPE30

2M requests

$1.0

$0.7

/1K req

Total:$1399+ VAT billed monthly

Use discount code - SCRAPE30

With each plan, you access:

99.99% success rate

100+ pre-built templates

Supports search, pagination, and filtering

Results in HTML, JSON, or CSV

n8n integration

LLM-ready markdown format

MCP server

JavaScript rendering

24/7 tech support

14-day money-back

SSL Secure Payment

Your information is protected by 256-bit SSL

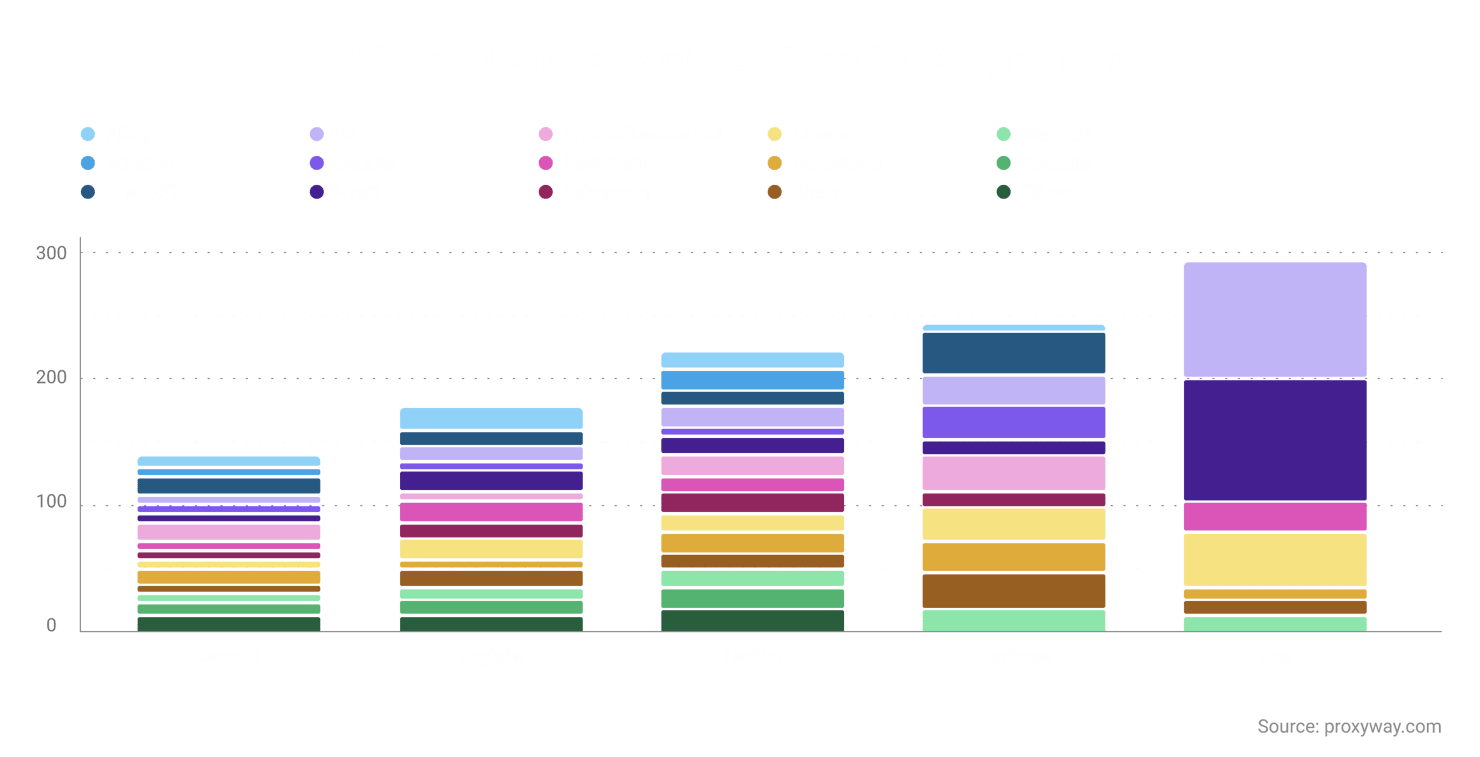

Rated best value by industry experts

Proxyway's independent 2025 analysis puts us at the top for cost efficiency. Spend less time managing budgets and more time extracting value from data.

Explore our ready-to-use SERP scraping templates

Explore a wide range of preconfigured templates to speed up your scraping projects. Whether you need real-time data from Google or want to expand the reach of your insights across multiple search engines, our ready-to-use templates make it fast and easy to get accurate results.

- All

- Bing

- Google

- AI

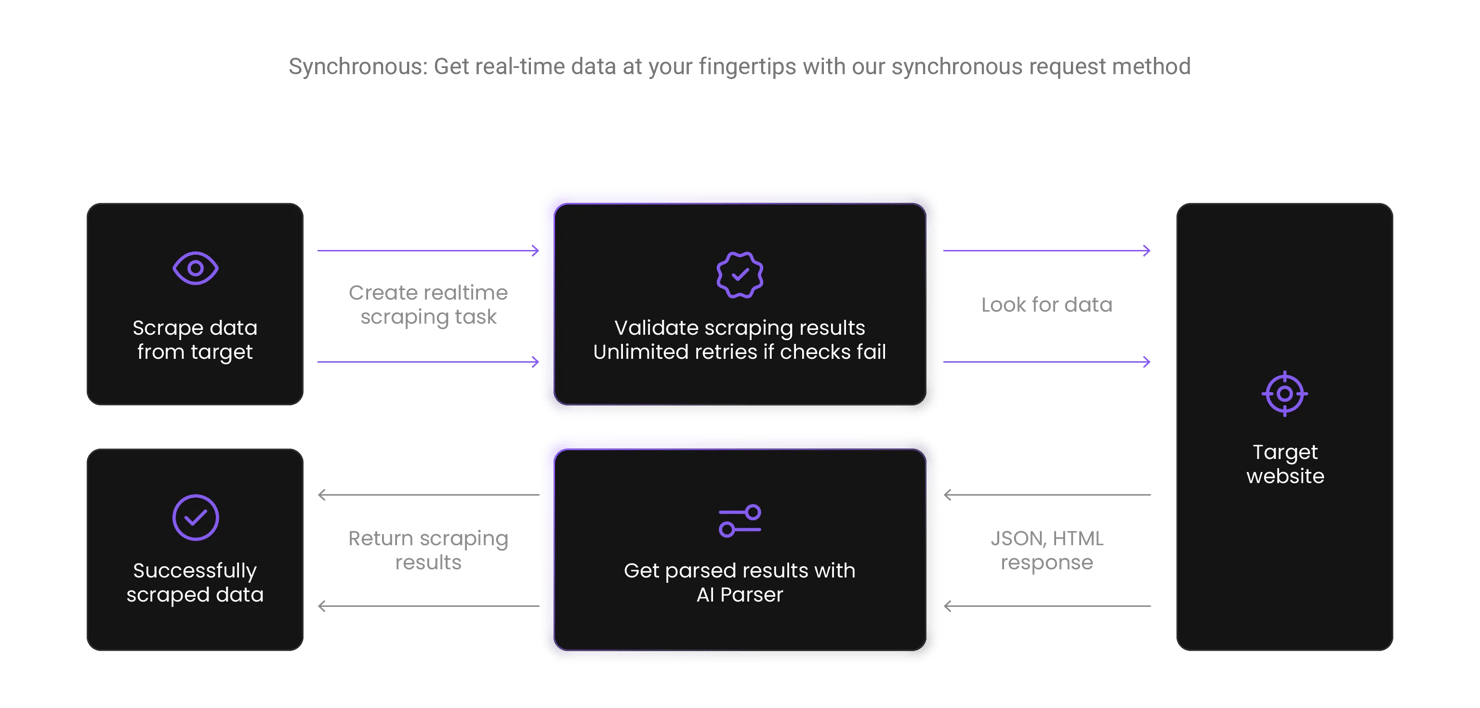

How does Web Scraping API work?

Our Web Scraping API mimics real user traffic to outsmart anti-bot systems and capture accurate data. The API delivers results in HTML, JSON, CSV, or Markdown format, and automatically retries the request several times if it fails.

Find the right scraping solution for you

Explore our scraping line offering and pick what suits you best, from Core to Advanced solutions – we've got you covered.

Core

Advanced

Success rate

100%

100%

Payment

No. of requests

No. of requests

Advanced geo-targeting

US, CA, GB, DE, FR, NL, JP, RO

Worldwide

Requests per second

30+

200

Output

HTML

HTML, JSON, CSV, Markdown

API playground

Proxy management

Pre-build scraper

Anti-bot bypassing

Free trial

Task scheduling

Premium proxy pool

Ready-made templates

JavaScript rendering

Trusted by:

Why the scraping community chooses Decodo

Manual scraping

Other APIs

Decodo

Manage proxy rotation yourself

Limited proxy pools

125M+ IPs with global coverage

Build CAPTCHA solvers

Frequent CAPTCHA blocks

Advanced browser fingerprinting

Handle retries manually

Pay for failed requests

Only pay for successful requests

Maintenance overhead

Complex documentation

Ready-made templates

Days to implement

Limited output formats

JSON, CSV, HTML, Markdown formats

Start collecting data in seconds

Ready-made scrapers

You bring the targets – we'll bring the data. Our ready-made (yet highly customizable) scrapers come with pre-set parameters to help you save time and access the data you need within seconds.

Resources for a quick start

Streamline your development with detailed code samples in popular programming languages like Python, PHP, and Node.js via our Github, or check out our quick start guides for setup tips.

Get a 7-day free trial and collect data without a single restriction

Learn how our scraper works

Simplify your data collection tasks with our ready-made scraping solution within minutes. Get real-time data from even the most protected websites without any hassle.

What people are saying about us

We're thrilled to have the support of our 135K+ clients and the industry's best.

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Frequently asked questions

What is Web Scraping API?

Web Scraping API is our automated data scraping solution that allows real-time data extraction from a huge range of websites without geo-restrictions, CAPTCHAs, or IP blocks. Our all-in-one scraper handles everything from JavaScript rendering to geo-targeting to deliver data ready for automating your workflows.

Is it legal to use a scraper to collect data from websites?

While you can scrape public data, it’s a must to check the website’s terms of service for specific conditions and restrictions. When in doubt, consult a legal expert before scraping data.

Is Decodo's Web Scraping API good for AI workflows?

Yes, Web Scraping API integrates seamlessly with automation tools like n8n and MCP servers, making it straightforward to collect and structure data for AI agents and LLMs. With scalability and support for structured outputs such as JSON and Markdown, it’s a strong fit for AI-driven workflows.

How is web scraping used in business?

Web scraping is how today’s teams automate data collection to gain a competitive edge. With our Web Scraping API, you skip the manual work and anti-bot measures, and can focus on extracting insights that drive strategies.

Web scraper use cases include:

- Competitive analysis. Monitor feature updates and customer sentiment to improve inventory and advertising strategies.

- Price intelligence. Track pricing and stock to offer competitive prices and identify discount potential.

- Market research. Get structured data from product listings, review sections, and public news sites to identify trends, customer needs, and new positioning opportunities.

- Lead generation. Scrape company directories, job boards, and public profiles to automatically feed CRM systems with fresh, quality leads.

- Sentiment analysis. Analyze reviews, forums, and niche communities for product feedback.

- Real estate and finance. Collect listing data, blog insights, and transaction records for accurate competitive benchmarking and trend forecast.

- AI training datasets. Build quality datasets for LLMs, AI agents, and recommendation engines from publicly available content

How to choose the best web scraping tool?

Follow these guidelines to find the web scraper that best fits your needs:

- Define your data goals. Know upfront what you’ll be scraping (e.g. eCommerce listings) and what format you want it in (e.g. JSON) to find scrapers actually designed for the job.

- Check anti-detection features. Keep an eye out for scrapers that handle proxy management and are backed by a vast IP pool. These help avoid CAPTCHAs, geo-restrictions, and IP blocks for uninterrupted scraping.

- Prioritize scaling and automation. Future-proof your data collection projects with scrapers that scale with your needs and are easy to automate with features like task scheduling, bulk upload, and automatic retries.

- Check success rates and reliability. Go with scrapers that have success-based pricing and guarantee 99.99%+ scraping success rates.

- Consider integrations and support. See how well the scraper can be integrated into your infrastructure by checking supported coding languages. Bonus points for 24/7 tech support.

- Test out ease of use. Look for scrapers with full documentation, quick onboarding, and scraping templates. If possible, take advantage of the free trial to see if the scraper lives up to the ease.

- Don’t ignore compliance. Choose providers that take data ethics seriously by sourcing proxies sustainably and complying with data collection laws like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA).

If you’re in the market for a scraper, consider our Web Scraping API, as it has all of the above and more. Try it out for free now!

What are ready-made web scraping templates?

Ready-made templates are presets that streamline scraping for common use cases. Instead of writing custom code from scratch, you launch a tested template where you edit a code example using easily customizable options. This makes your data collection projects more efficient and requires zero coding.

Scraping API for All Your Data Needs

Gain access to real-time data at any scale without worrying about proxy setup or blocks.

14-day money-back option