SERP Scraper API

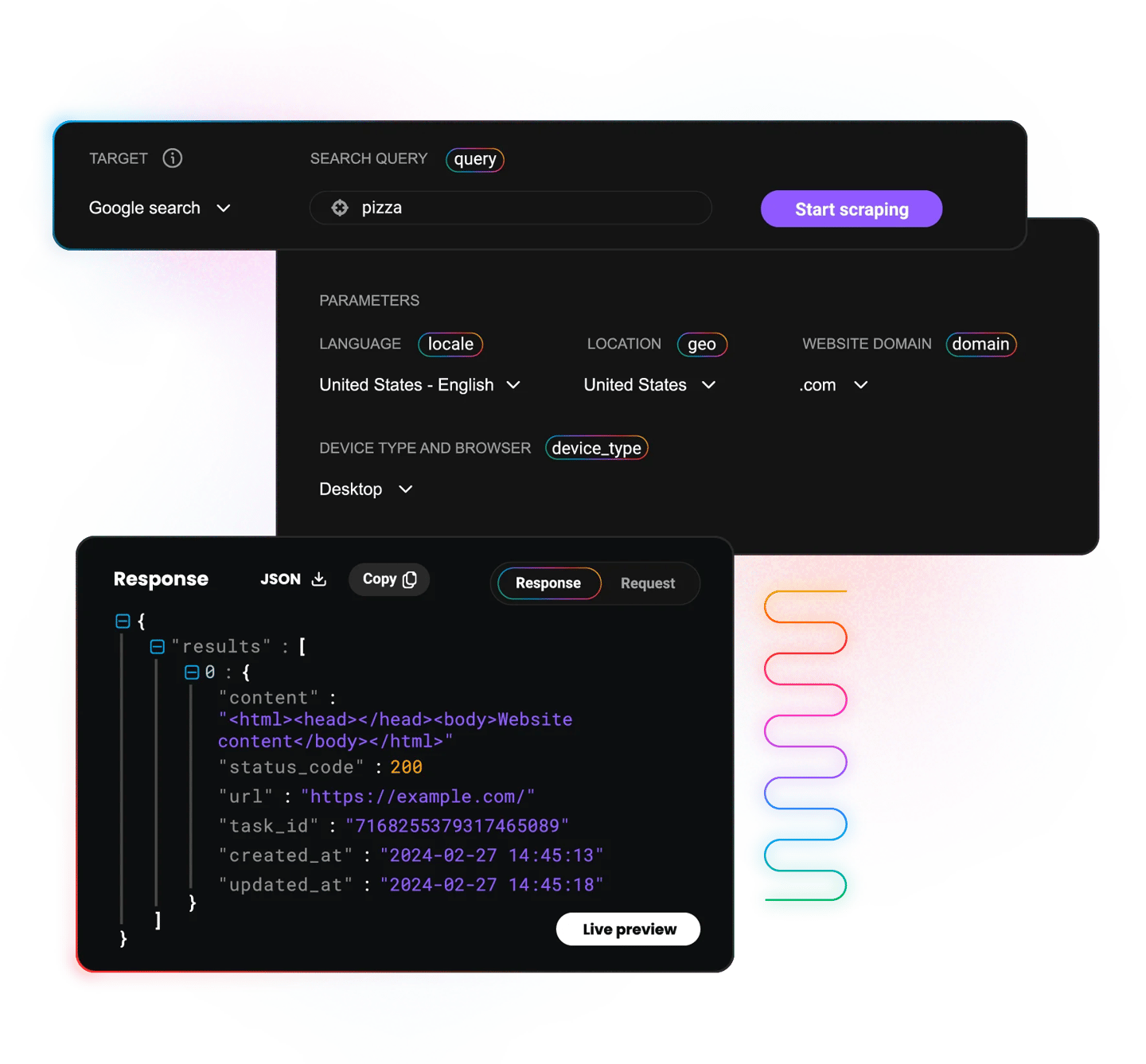

Access real-time search engine results with our SERP Scraping API*. Effortlessly collect structured data in JSON, CSV, Markdown, PNG, XHR, or HTML formats with precise geo-targeting.

* This scraper is now a part of Web Scraping API.

Zero

CAPTCHAs

99.99%

success rate

195+

locations

Task

scheduling

7-day

free trial

Trusted by:

Why use a SERP Scraping API?

Getting reliable search engine data is more than just a technical challenge. It’s critical for making informed marketing and SEO decisions. Without a streamlined solution, teams waste time collecting fragmented data and struggle to maintain consistency across regions and devices. Our SERP Scraping API centralizes this process, providing fast, scalable, and accurate insights that support smarter strategies. Skip the challenge of:

- Building your own scraper that takes weeks, and breaks when search engines change layout.

- IP bans, CAPTCHAs, and geo-targeting that are major blockers for reliable SERP data.

- Wasting hours of manually tracking SERP for ranking changes.

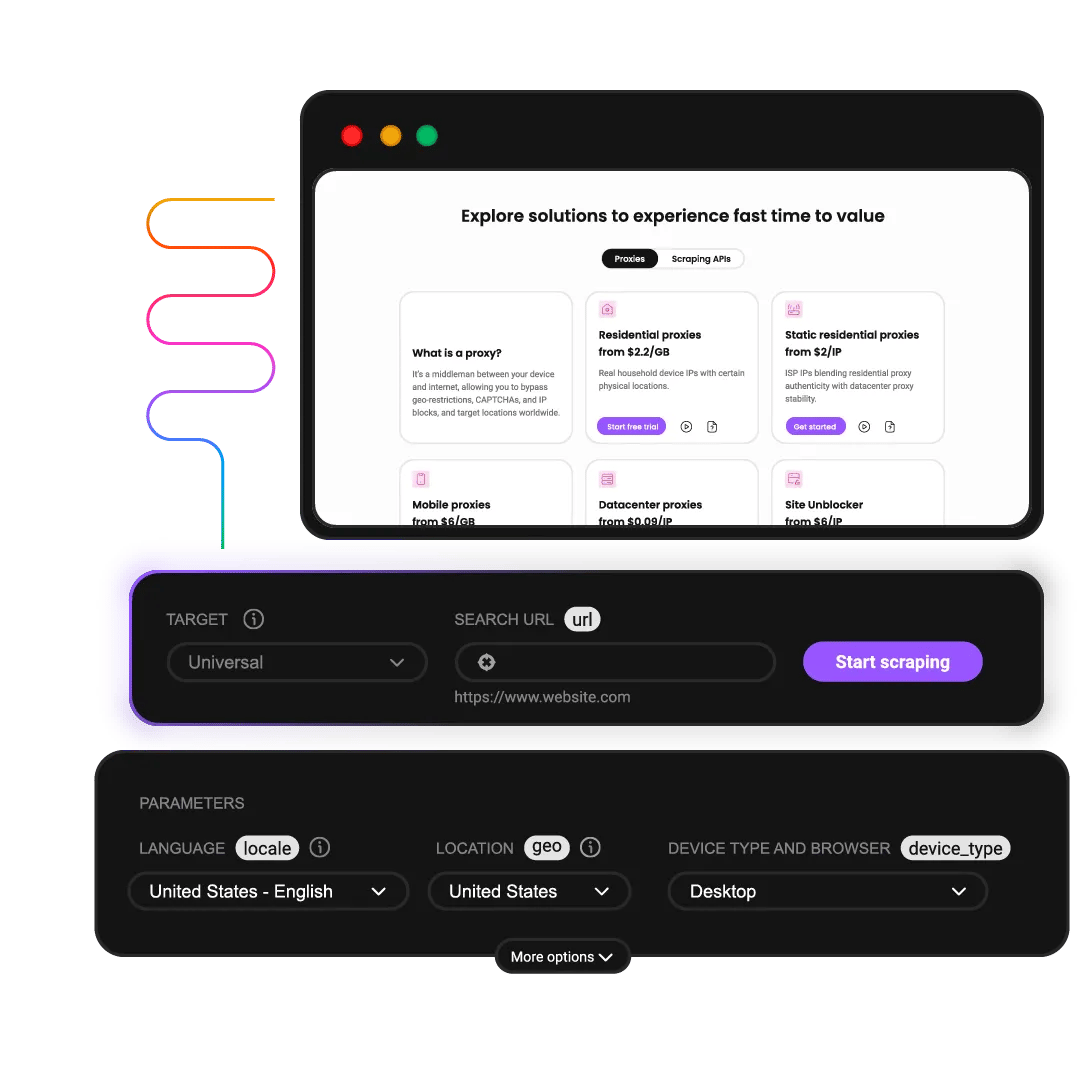

Try Decodo’s SERP Scraping API and see its speed and reliability in action

SERP scraping has never been easier. Experience what Decodo’s SERP Scraping API can deliver in real time.

Explore our ready-to-use SERP scraping templates

Explore a wide range of preconfigured templates to speed up your scraping projects. Whether you need real-time data from Google or want to expand the reach of your insights across multiple search engines, our ready-to-use templates make it fast and easy to get accurate results.

- All

- Bing

- Google

- AI

Choose the SERP data scraping solution that fits your needs

Discover our full SERP Scraper API lineup and select the solution that matches your goals, from Core to Advanced plans.

Core

Advanced

Success rate

100%

100%

Payment

No. of requests

No. of requests

Advanced geo-targeting

US, CA, GB, DE, FR, NL, JP, RO

Worldwide

Requests per second

30+

200

Output

HTML

JSON, CSV, Markdown, PNG, XHR, HTML.

API playground

Proxy management

Pre-built scraper

Anti-bot bypassing

Task scheduling

Premium proxy pool

Ready-made templates

JavaScript rendering

Why scraping community chooses Decodo

Manual scraping

Other APIs

Decodo

Manage proxy rotation yourself

Limited proxy pools

125M+ IPs with global coverage

Build CAPTCHA solvers

Frequent CAPTCHA blocks

Advanced browser fingerprinting

Handle retries manually

Pay for failed requests

Only pay for successful requests

Maintenance overhead

Complex documentation

100+ ready-made templates

Days to implement

Limited output formats

JSON, CSV, Markdown, PNG, XHR, HTML output

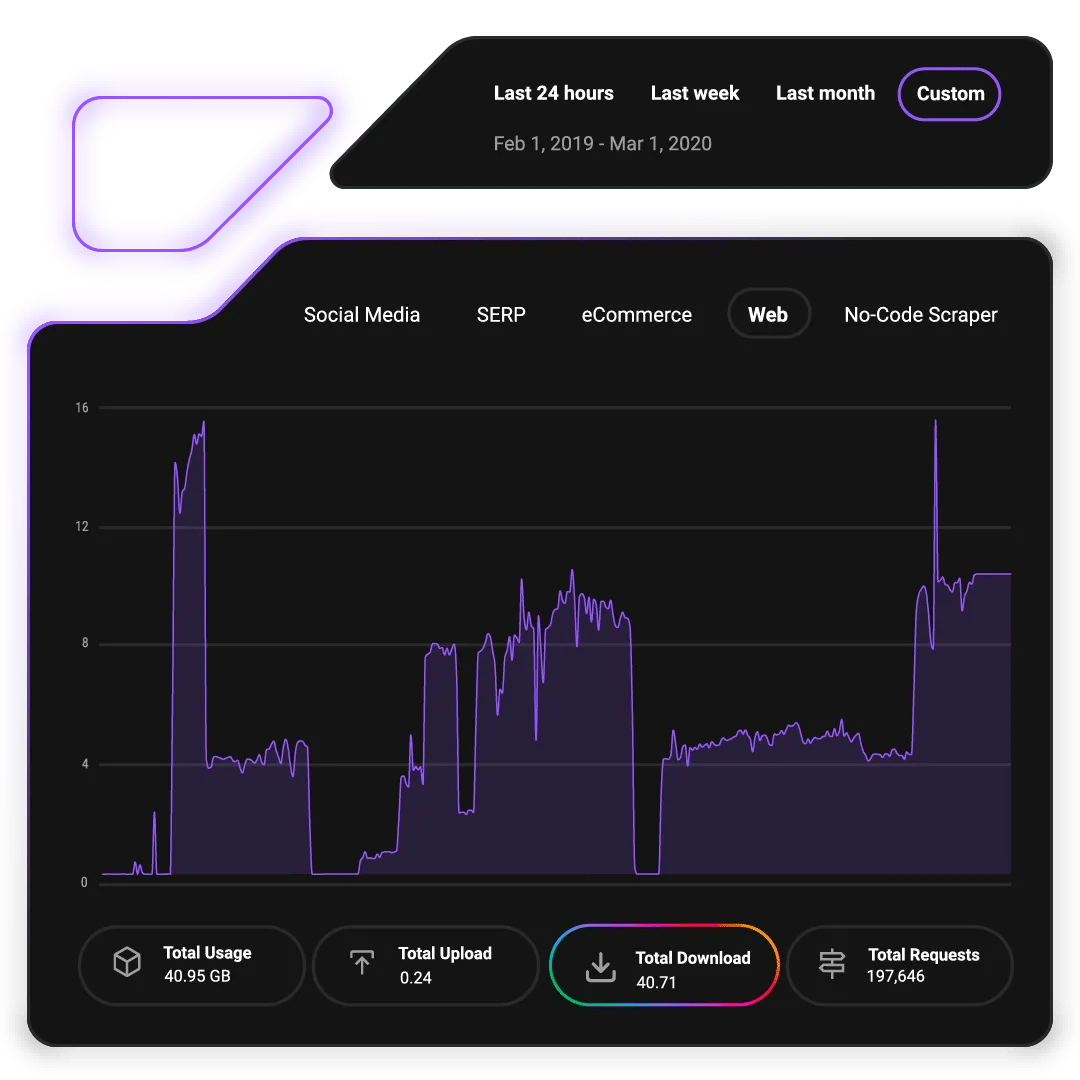

Compare SERP Scraping API pricing plans

Pick the perfect plan for your SERP scraping projects – flexible pricing lets you scale search engine data collection with ease.

23K requests

$1.25

$0.88

/1K req

Total:$20+ VAT billed monthly

Use discount code - SCRAPE30

82K requests

$1.2

$0.84

/1K req

Total:$69+ VAT billed monthly

Use discount code - SCRAPE30

216K requests

$1.15

$0.81

/1K req

Total:$179+ VAT billed monthly

Use discount code - SCRAPE30

455K requests

$1.1

$0.77

/1K req

Total:$349+ VAT billed monthly

Use discount code - SCRAPE30

950K requests

$1.05

$0.74

/1K req

Total:$699+ VAT billed monthly

Use discount code - SCRAPE30

2M requests

$1.0

$0.7

/1K req

Total:$1399+ VAT billed monthly

Use discount code - SCRAPE30

Need more?

Chat with us and we’ll find the best solution for you

With each plan, you access:

99.99% success rate

100+ pre-built templates

Supports search, pagination, and filtering

Results in HTML, JSON, or CSV

n8n integration

LLM-ready markdown format

MCP server

JavaScript rendering

24/7 tech support

14-day money-back

SSL Secure Payment

Your information is protected by 256-bit SSL

Gain structured insights with our SERP Scraping API

Collect structured search engine results data at any scale with flexible output formats, real-time delivery, and more.

Easy integration

Set up with code examples on GitHub, Postman collections, and our quick start guides.

Advanced anti-bot protection

Leverage integrated browser fingerprints for seamless data collection.

Ready-made scraping templates

Get fast access to real-time data with the help of our customizable ready-made scrapers.

Proxy management

Enjoy seamless, uninterrupted scraping with automated proxy rotation.

Dynamic rendering

Accurately capture dynamic and JavaScript-rendered SERP content using built-in headless browsers.

Proxy integration

Avoid blocks and CAPTCHAs while collecting data with 125M+ proxies under the hood.

Real-time SERP feature extraction

Instantly identify and extract rich SERP features such as featured snippets, People Also Ask, local packs, and ad placements.

Easy scalability

Scrape thousands of SERPs simultaneously with high concurrency and intelligent load balancing for enterprise-scale projects.

Automated error handling

Benefit from robust error detection, automatic retries, and intelligent backoff strategies to maximize successful data retrieval.

Start scraping SERP in seconds

Ready-made SERP scraper

Our ready-made (yet highly customizable) scrapers come with pre-set parameters to help you save time and access the SERP data you need within seconds.

Here are some of the key data points you can extract:

Organic search results (titles, URLs, snippets)

Paid ads (ad headlines, URLs, display paths, descriptions)

Featured snippets and answer boxes

People Also Ask (PAA) questions and answers

Local pack/map listings (business names, addresses, phone numbers, reviews)

Site links and related searches

Search result positions and ranking changes

Rich results (e.g., ratings, prices, event info)

Top stories and news results

Images and video carousels appearing in SERPs

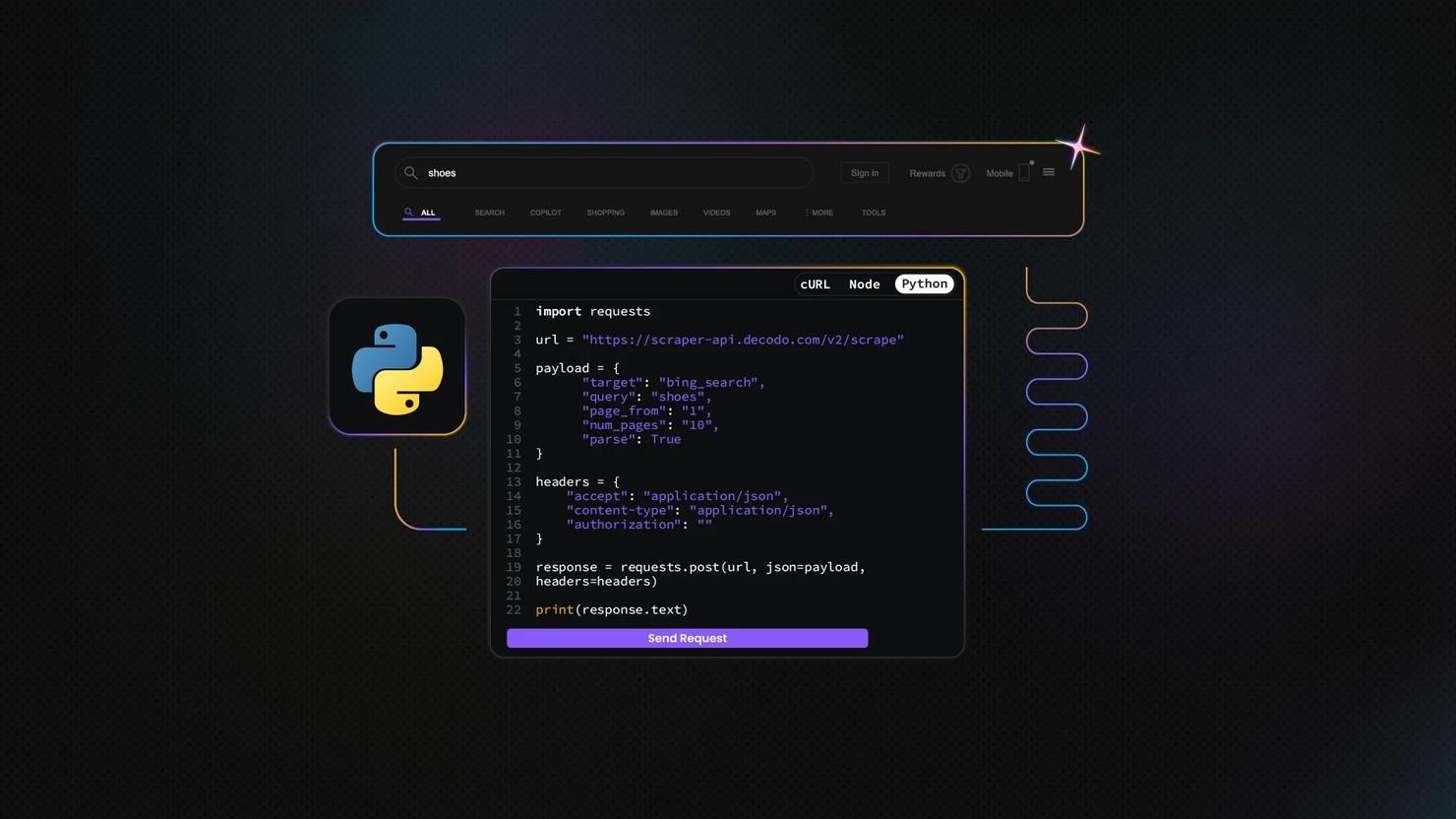

Developer-friendly resources

Fast-track your integration with ready-to-use code snippets for Python, PHP, cURL, and Node.js in our GitHub repository.

For step-by-step setup assistance, explore our easy-to-follow quick start guides designed to help you get up and running with the SERP Scraping API in minutes.

Integrate seamlessly with Python, Node.js, or cURL

Effortlessly connect the SERP Scraper API to your preferred stack, whether you're working in Python, Node.js, cURL, or other popular programming languages. Enjoy smooth, straightforward integration with any workflow or business application.

Get data in JSON with AI Parser

Structured data at your fingertips in just a few clicks.

Get support every step of the way

Explore customer reviews and join our community of 85K+ users to get the most out of our SERP scraper API.

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Decodo blog

Build knowledge on our solutions, or pick up some fresh ideas for your next project – our blog is just the perfect place.

Most recent

What Is a Proxy Server? How It Works, Types, and Use Cases

TL;DR: A proxy server acts as an intermediary between your device and the internet, masking your IP address and routing your requests through alternative IPs. Businesses use proxies to bypass geo-restrictions, avoid blocks and CAPTCHAs, scrape web data at scale, verify ads, monitor prices, and maintain online anonymity. With the proxy market projected to reach USD 3.12 billion by 2032, understanding which proxy type fits your needs can make the difference between seamless data collection and constant roadblocks.

Mykolas Juodis

Last updated: Feb 12, 2026

8 min read

Frequently asked questions

What is a SERP API?

SERP Scraping API is a powerful tool designed to extract data from search engine result pages (SERPs) effortlessly and efficiently. Tailored for busy developers and businesses to save time, our SERP Scraping API eliminates the need to manage proxies, handle IP bans, or deal with CAPTCHAs. With Decodo’s SERP Scraping API, you can focus entirely on collecting structured data from popular search engines like Google, Bing, and more.

What are ready-made scrapers?

Ready-made scrapers are pre-configured tools within our Web Scraping API, designed for easy and quick data collection. They eliminate the need for extensive technical knowledge, custom scraper development, and proxy management, making them ideal for users seeking a low/no-code solution. By using ready-made scrapers, you can access and structure large data sets efficiently.

How long does it take for the SERP Scraping API to give the results back?

Our SERP Scraping API will collect real-time data faster than you’ll blink! We give the results in your preferred format – HTML, JSON, or table. Whether tracking keyword performance, monitoring competitors or analyzing ad campaigns, our SERP Scraping API ensures you get accurate, up-to-date search engine data on demand.

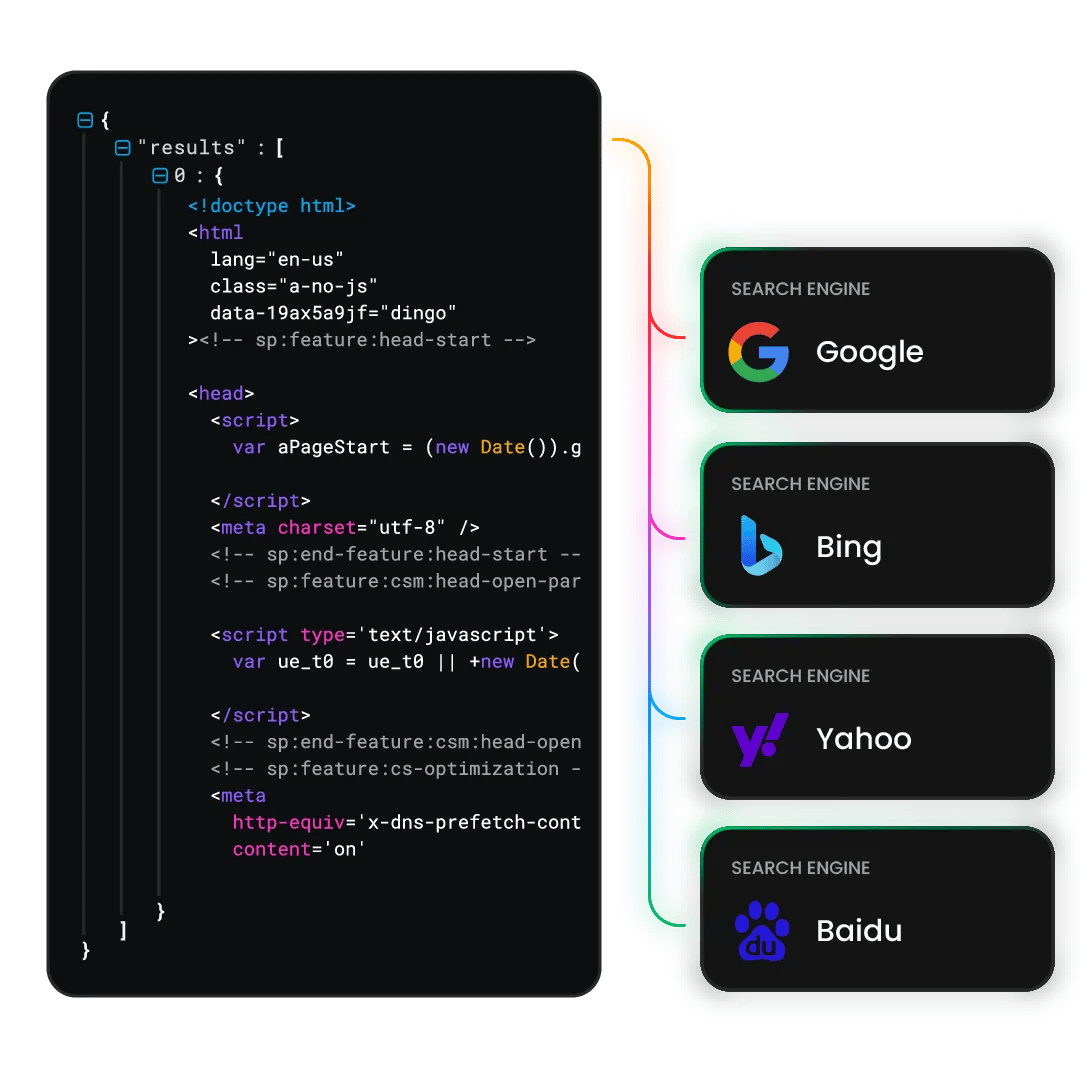

Which search engines are supported?

Our Web Scraping API retrieves real-time data from all major search engines, including Google, Yahoo, Baidu, and Bing.

Can I scrape localized search results with the SERP Scraping API?

Yes, you can scrape localized search results with the SERP Scraping API. The API supports geo-targeting, which allows you to collect search engine results pages (SERPs) from specific countries. This is particularly useful for tracking local search rankings, monitoring region-specific keyword performance, and gathering location-based competitive intelligence.

The API handles proxy management and anti-bot bypassing automatically, so you can focus on analyzing the data rather than dealing with technical barriers. You can target results by country. To learn more, check the Web Scraping API documentation for the most current geo-targeting options.

What output formats are available?

Depending on the target, you can get your results back in JSON, CSV, Markdown, PNG, XHR, or HTML.

Get SERP Scraper API for Your Data Needs

Gain access to real-time data at any scale without worrying about proxy setup or blocks.