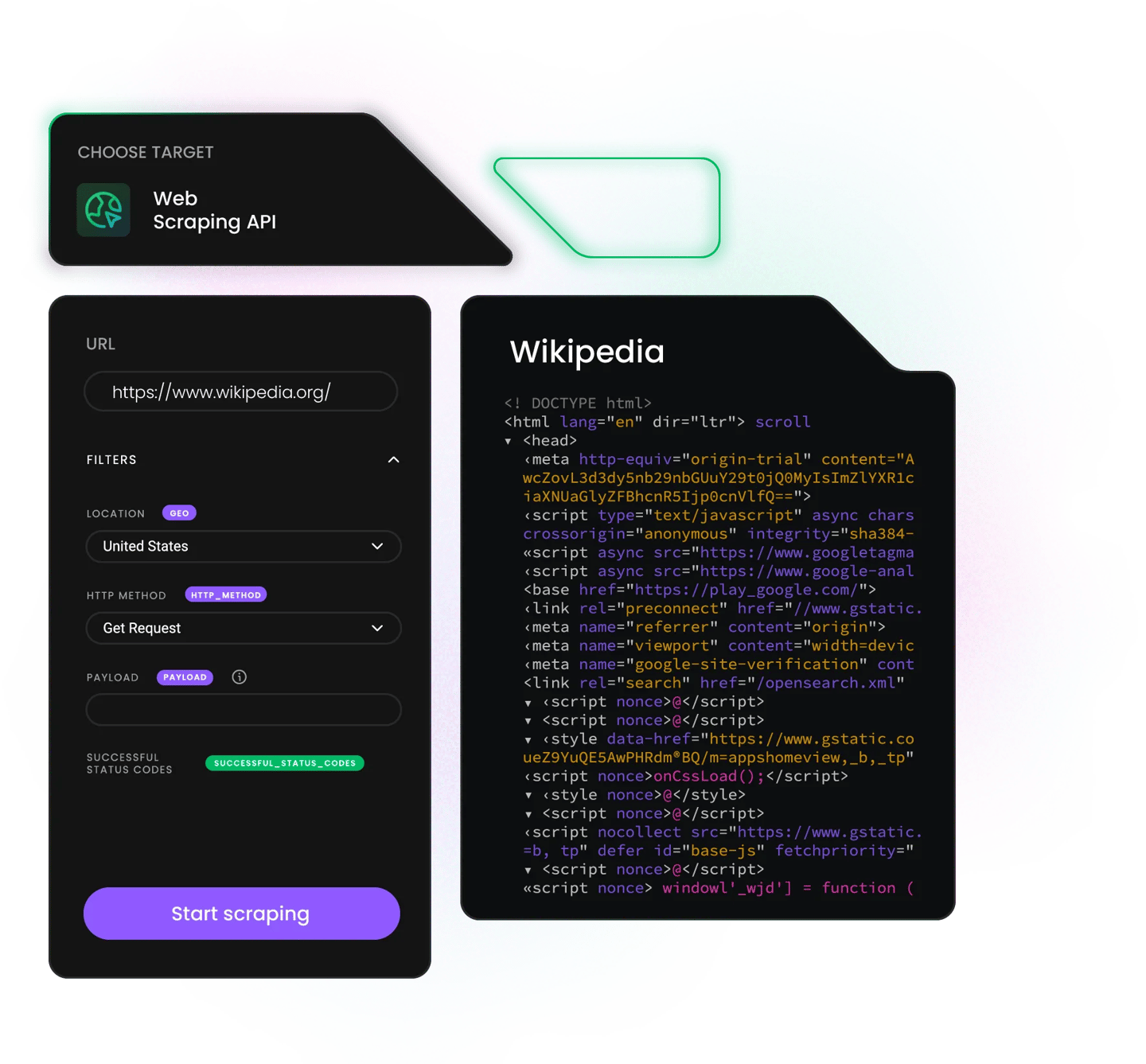

Wikipedia Scraper API

Collect article content, infoboxes, citations, and more with our ready-to-use Wikipedia scraper API* – real-time results without CAPTCHAs, IP blocks, or setup hassles.

* This scraper is now a part of the Web Scraping API.

125M+

IPs worldwide

99.99%

success rate

200

requests per second

100+

ready-made templates

7-day

free trial

Be ahead of the Wikipedia scraping game

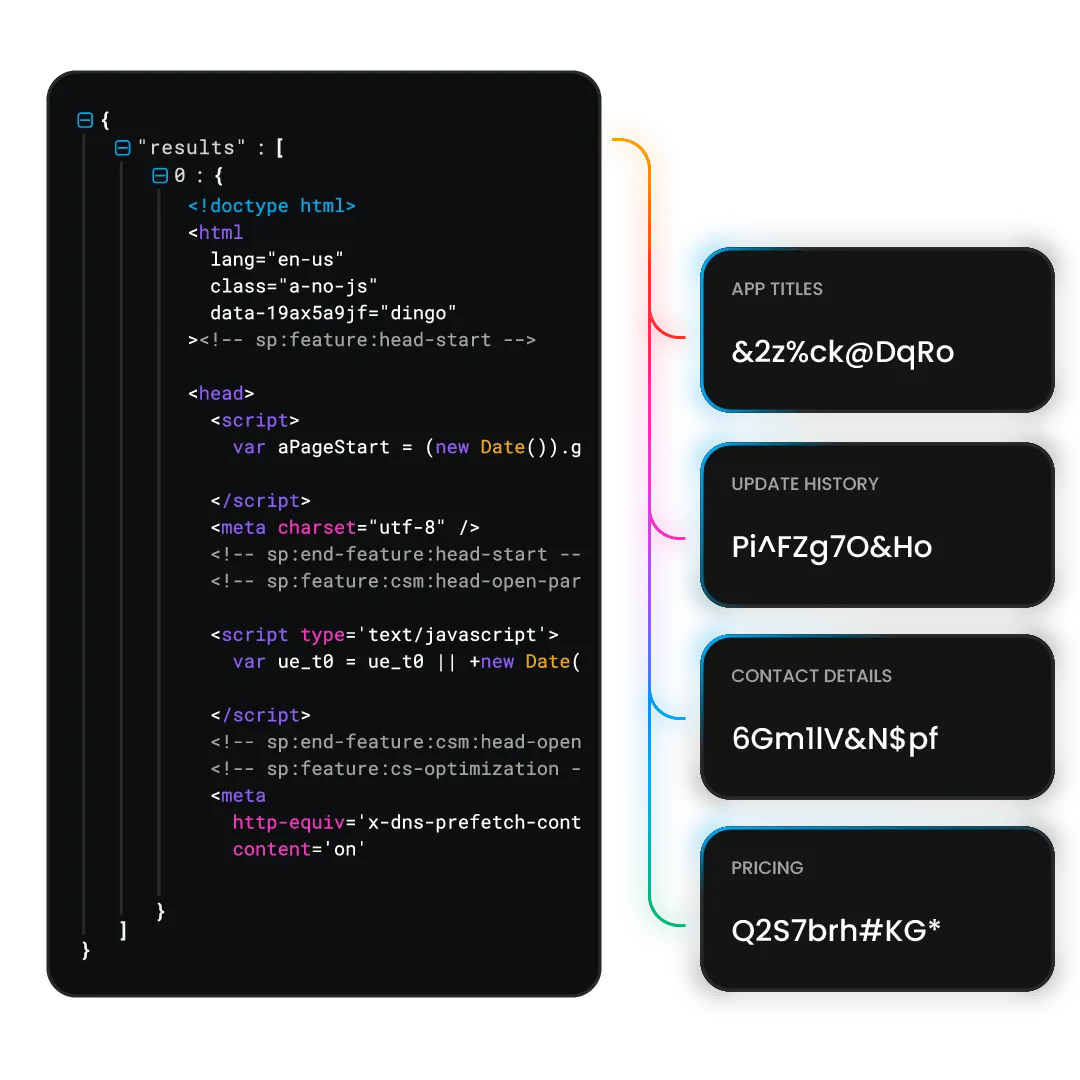

Extract data from Wikipedia

Web Scraping API is a powerful data collector that combines a web scraper and a pool of 125M+ residential, mobile, ISP, and datacenter proxies.

Here are some of the key data points you can extract with it:

- Article titles, summaries, and full content

- Infobox data (dates, locations, statistics)

- Internal and external links

- Categories and page hierarchies

- Tables, references, and citations

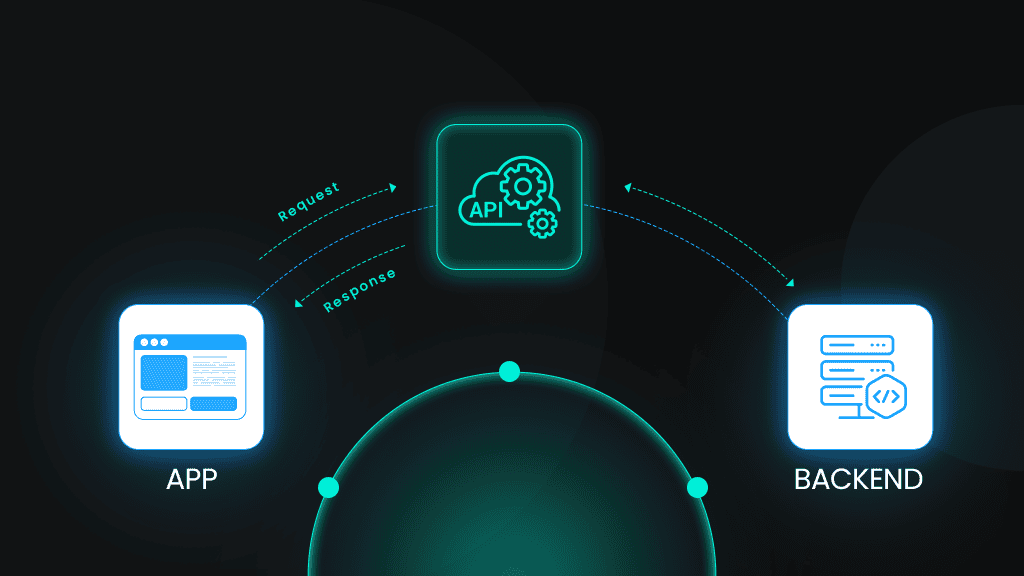

What is a Wikipedia scraper?

A Wikipedia scraper is a solution that extracts data from the Wikipedia website.

With our Web Scraping API, you can send a single API request and receive the data you need in HTML format. Even if a request fails, we’ll automatically retry until the data is delivered. You'll only pay for successful requests.

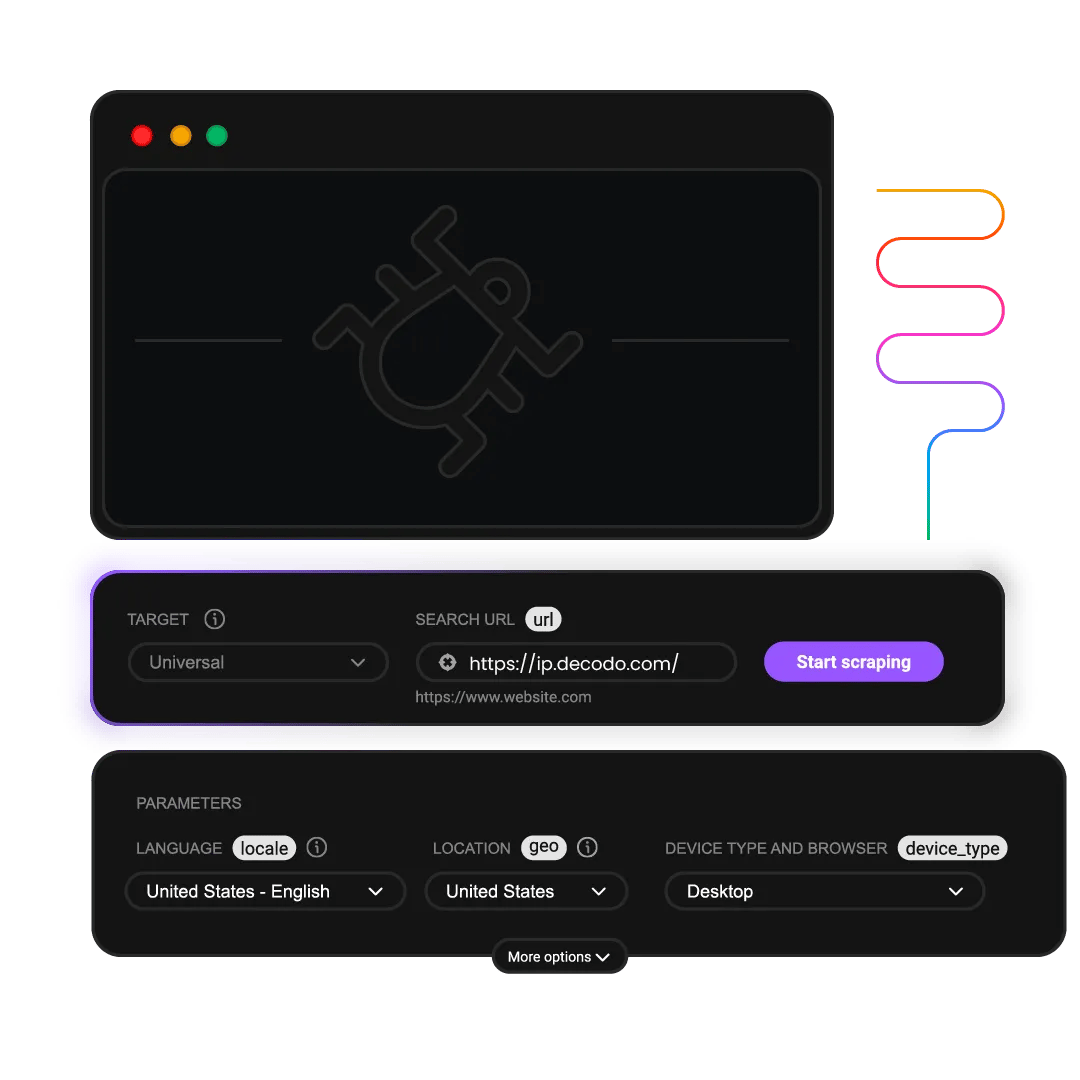

Designed by our experienced developers, this tool offers you a range of handy features:

Built-in scraper

JavaScript rendering

Easy API integration

195+ geo-locations, including country-, state-, and city-level targeting

No CAPTCHAs or IP blocks

Scrape Wikipedia with Python, Node.js, or cURL

Our Wikipedia Scraper API supports all popular programming languages for hassle-free integration with your business tools.

Unlock the full potential of Wikipedia scraper API

Scrape Wikipedia with ease using our powerful API. From JavaScript rendering to built-in proxy integration, we help you get the data you need without blocks or CAPTCHAs.

Flexible output options

Retrieve clean HTML results ready for your custom processing needs.

100% success

Get charged for the Wikipedia data you actually receive – no results means no costs.

Real-time or on-demand results

Decide when you want your data: scrape instantly, or schedule the request for later.

Advanced anti-bot measures

Use advanced browser fingerprinting to navigate around CAPTCHAs and detection systems.

Easy integration

Plug our Wikipedia scraper into your apps with quick start guides and code examples.

Proxy integration

Access data globally with 125M+ global IPs to dodge geo-blocks and IP bans.

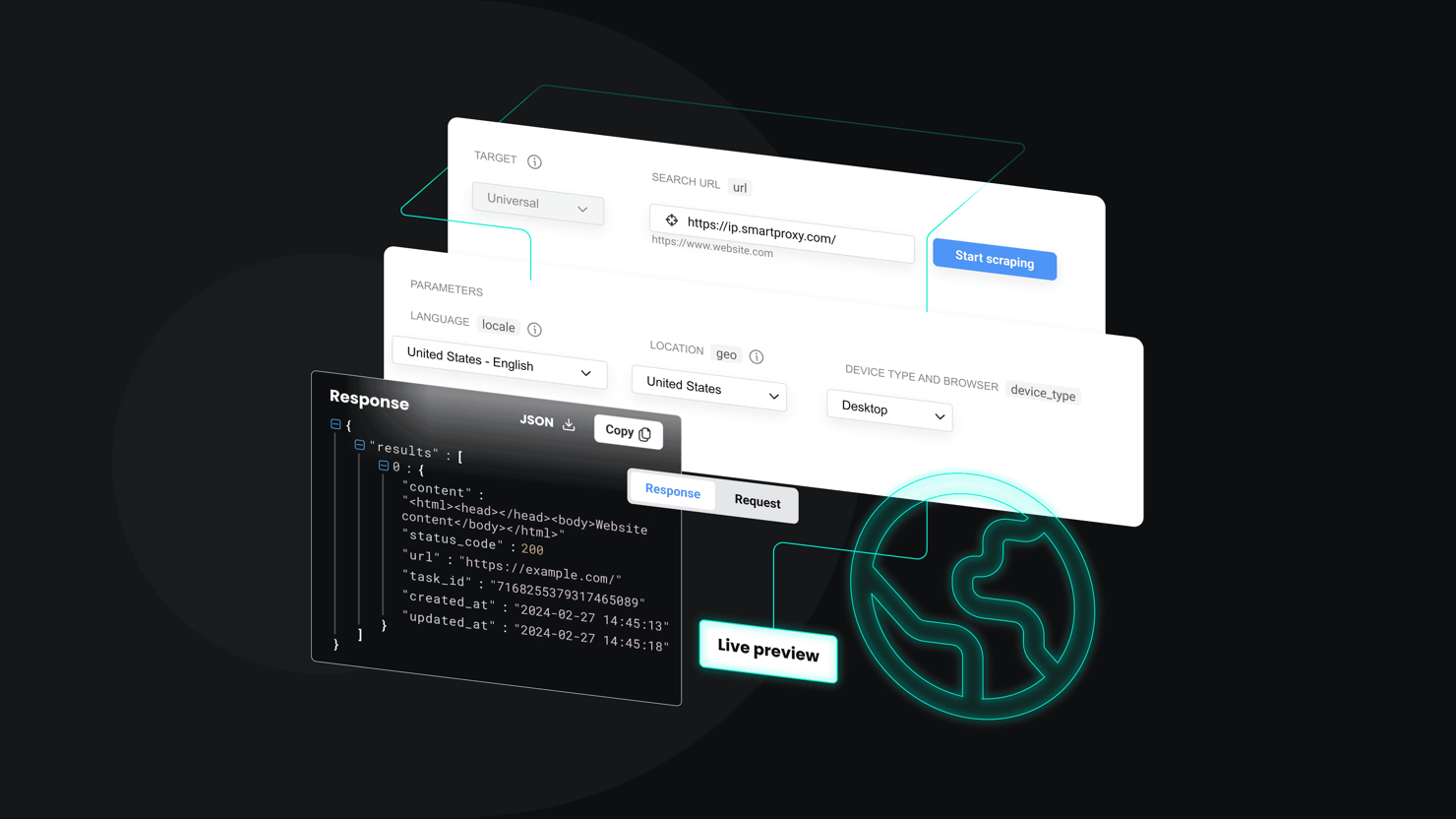

API Playground

Run test requests instantly through our interactive API Playground available in the dashboard.

Find the right Wikipedia data scraping solution for you

Explore our Wikipedia scraper API offerings and choose the solution that suits you best – from Core scrapers to Advanced solutions.

Core

Advanced

Success rate

100%

100%

Payment

No. of requests

No. of requests

Advanced geo-targeting

US, CA, GB, DE, FR, NL, JP, RO

Worldwide

Requests per second

30+

Unlimited

API playground

Proxy management

Pre-build scraper

Anti-bot bypassing

Task scheduling

Premium proxy pool

Ready-made templates

JavaScript rendering

Explore our pricing plans for any Wikipedia scraping demand

Start collecting real-time data from Wikipedia and stay ahead of the competition.

23K requests

$1.25

$0.88

/1K req

Total:$20+ VAT billed monthly

Use discount code - SCRAPE30

82K requests

$1.2

$0.84

/1K req

Total:$69+ VAT billed monthly

Use discount code - SCRAPE30

216K requests

$1.15

$0.81

/1K req

Total:$179+ VAT billed monthly

Use discount code - SCRAPE30

455K requests

$1.1

$0.77

/1K req

Total:$349+ VAT billed monthly

Use discount code - SCRAPE30

950K requests

$1.05

$0.74

/1K req

Total:$699+ VAT billed monthly

Use discount code - SCRAPE30

2M requests

$1.0

$0.7

/1K req

Total:$1399+ VAT billed monthly

Use discount code - SCRAPE30

Need more?

Chat with us and we’ll find the best solution for you

With each plan, you access:

99.99% success rate

100+ pre-built templates

Supports search, pagination, and filtering

Results in HTML, JSON, or CSV

n8n integration

LLM-ready markdown format

MCP server

JavaScript rendering

24/7 tech support

14-day money-back

SSL Secure Payment

Your information is protected by 256-bit SSL

What people are saying about us

We're thrilled to have the support of our 130K+ clients and the industry's best

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Trusted by:

Decodo blog

Build knowledge on our solutions and improve your workflows with step-by-step guides, expert tips, and developer articles.

Most recent

Proxy Anonymity Levels: Transparent vs Anonymous vs Elite

Not all proxies actually protect your identity. Some proxies openly tell websites, "This request came through a proxy," while some can even leak your real IP if they're configured poorly. There are three proxy anonymity levels: transparent, anonymous, and elite. This article will walk you through each one, explain how they work, and help you choose the most suitable option for you.

Vytautas Savickas

Last updated: Feb 20, 2026

7 min read

Frequently asked questions

Is it legal to scrape data from Wikipedia?

Yes, scraping publicly available data from Wikipedia is generally legal as long as you comply with its Terms of Use and the Creative Commons Attribution-ShareAlike License (CC BY-SA 3.0). Wikipedia’s content is openly available for reuse, modification, and distribution, provided you give appropriate attribution, indicate any changes made, and maintain the same licensing.

We also recommend consulting a legal professional to ensure compliance with local data collection laws and the website’s Terms and Conditions.

What are the most common methods to scrape Wikipedia?

You can extract publicly available data from Wikipedia using a few methods. Depending on your technical knowledge, you can use:

- MediaWiki API – ideal for structured access to content like page summaries, categories, and revisions. Supports JSON output, rate-limited but reliable.

- Python libraries – use tools like wikipedia, wikitools, or mwclient to interact with Wikipedia’s API in an object-oriented way.

- HTML parsing with custom scripts – when the API doesn’t offer what you need (e.g., full page layout), fall back on tools like Beautiful Soup or Scrapy for direct scraping from the website.

- Page dumps – Wikimedia also provides full content dumps in XML or SQL format, best suited for offline analysis or large-scale data mining.

- All-in-one scraping API – tools like Decodo’s Web Scraping API help users to collect real-time data from Wikipedia with just a few clicks.

How can I scrape Wikipedia using Python?

Python is one of the most efficient languages for scraping Wikipedia thanks to its rich ecosystem of libraries. Here's how to get started:

- Using the Wikipedia API with the wikipedia library:

- Using Requests and BeautifulSoup for HTML parsing:

For large-scale or structured scraping, use Scrapy, which offers advanced control over crawling and data pipelines.

How do proxy servers help in scraping Wikipedia?

While Wikipedia is relatively open, proxy servers can still be useful when scraping at scale:

- Bypass IP rate limits – Wikipedia monitors request frequency per IP. Rotating proxies help distribute traffic.

- Avoid CAPTCHAs – though rare, some automated detection systems may present CAPTCHAs, proxies help reduce this risk.

- Geo-specific scraping – in some research scenarios, accessing localized versions of Wikipedia may require proxies from specific regions.

Why is Wikipedia a valuable source for data scraping?

Wikipedia is one of the most comprehensive, community-driven, and regularly updated encyclopedias on the internet. It’s valuable for:

- Research and academic studies

- Knowledge graphs and semantic search

- AI and LLMs training

- Market trend analysis

- Content enhancing

What are the benefits of using a Wikipedia scraper for businesses?

Businesses can leverage Wikipedia data for a wide range of use cases:

- Track emerging trends and brand mentions.

- Running market research.

- Enhance SEO strategy by discovering long-tail keywords and expanding topic coverage.

- Training machine learning algorithms and NLP models using high-quality textual data.

- Automatically enrich internal databases or chatbots with publicly available data.

- Enhancing content with publicly available information.

Wikipedia Scraper API for Your Data Needs

Gain access to real-time data at any scale without worrying about proxy setup or blocks.

14-day money-back option