The Best Coding Language for Web Scraping in 2026

Web scraping is a powerful way to collect publicly accessible data for research, monitoring, and analysis, but the tools you choose can greatly influence the results. In this article, we review six of the most popular programming languages for web scraping, breaking down their key characteristics, strengths, and limitations. To make the comparison practical, each section also includes a simple code example that highlights the language’s syntax and overall approach to basic scraping tasks.

Dominykas Niaura

Last updated: Jan 15, 2026

7 min read

What benefits can a well-chosen programming language bring?

Choosing a language is not just about personal preference. It directly affects how reliable, fast, and future proof your scraping setup will be. These are the main factors worth evaluating before you commit:

- Execution speed. Faster languages handle large volumes of requests and data processing more efficiently, which matters for high-scale scraping.

- Flexibility. A flexible language lets you scrape static pages, dynamic JavaScript sites, APIs, and files without switching tools.

- Learning curve. Easy to use languages reduce setup time, simplify debugging, and help you ship working scrapers sooner.

- Scalability. A language that scales well supports parallel requests, background jobs, and distributed scraping setups.

- Maintenance effort. Lower maintenance cost means fewer breaking changes, clearer codebases, and less time spent fixing old scripts.

- Community and learning resources. Strong communities provide faster answers, better tutorials, and long term ecosystem stability.

- Libraries and frameworks. Rich tooling for HTTP requests, parsing, browser automation, and proxies speeds up development dramatically.

- Crawling efficiency. Native support for async tasks, rate limiting, retries, and queue systems makes large crawling jobs far more reliable.

The most popular languages for web scraping

Below are some of the most widely used and reliable languages for web scraping today. For each option, we highlight its key characteristics, main advantages and limitations, and include a simple code example to demonstrate the language’s syntax, readability, and overall approach to basic scraping tasks.

1. Python

Python is one of the most popular and versatile languages for web scraping. It offers a mature ecosystem of libraries and frameworks designed specifically for data extraction, parsing, and crawling, including tools like Beautiful Soup, Scrapy, Requests, and Playwright. Thanks to its readable syntax and gentle learning curve, Python is often the first choice for beginners.

At the same time, Python scales well beyond small projects. It is commonly used in production scraping pipelines, data engineering workflows, and analytics environments, especially when combined with task queues, async frameworks, and cloud infrastructure. Python also integrates smoothly with both SQL and NoSQL databases, making it suitable for end-to-end data workflows.

For very large, performance-critical, or highly concurrent systems, teams may pair Python with specialized services or other languages. Even then, Python often remains the orchestration layer due to its flexibility and rich ecosystem.

Key characteristics:

- High level, readable syntax suitable for beginners and professionals

- Extensive ecosystem for scraping, parsing, automation, and data processing

- Strong integration with databases and data analysis tools

Pros:

- Fast to develop and easy to maintain

- Excellent libraries like Requests, Beautiful Soup, Scrapy, and Playwright

- Large community and rich documentation

Cons:

- Slower raw execution speed compared to compiled languages

- Not ideal for extremely high concurrency without additional tooling

Basic scraping example:

2. Node.js

Node.js is a strong platform for scraping modern, JavaScript heavy websites. It runs JavaScript on the server using the same V8 engine found in Chromium based browsers, which makes it a natural fit for tools like Playwright and Puppeteer. These tools allow you to render pages, execute client-side logic, and interact with dynamic content.

Node.js is particularly effective for concurrent workloads. Its non-blocking, event-driven architecture enables efficient handling of many parallel requests with relatively low overhead. This makes it well suited for large crawling tasks where responsiveness and throughput matter.

Node.js is often chosen by teams that already rely on JavaScript across their stack. Using the same language for scraping, backend services, and automation can simplify development, reduce context switching, and improve long-term maintainability.

Key characteristics:

- JavaScript runtime built for non-blocking, asynchronous workloads

- Strong support for browser automation and dynamic websites

- Well suited for concurrent crawling tasks

Pros:

- Excellent for JavaScript heavy and SPA websites

- Native async model handles many requests efficiently

- Works seamlessly with Playwright and Puppeteer

Cons:

- Smaller scraping ecosystem than Python

- Async patterns can be harder for beginners to reason about

Basic scraping example:

3. Java

Java is widely used in enterprise environments where stability, performance, and long-term maintainability are priorities. It offers strong multithreading capabilities, predictable memory management, and excellent tooling for building large-scale scraping systems. Java based scrapers are often part of broader data processing pipelines.

The ecosystem includes mature libraries for HTTP requests, parsing, and browser automation, as well as robust frameworks for scheduling and distributed processing. Java is a solid choice for scraping at scale, especially when reliability is more important than rapid prototyping.

The main tradeoff is development speed. Java typically requires more boilerplate and a steeper learning curve compared to Python or Node.js, which can slow down iteration in smaller projects.

Key characteristics:

- Strongly typed, compiled language focused on stability and performance

- Widely used in enterprise and large scale systems

- Mature tooling for multithreading and scheduling

Pros:

- Excellent performance and scalability

- Strong concurrency support

- Long term maintainability for large projects

Cons:

- Verbose syntax and slower development cycles

- Steeper learning curve compared to scripting languages

Basic scraping example:

4. C#

C# is a popular option for teams operating within the Microsoft ecosystem. It integrates seamlessly with .NET, Azure services, and Windows based infrastructure, making it a natural fit for enterprise scraping solutions in those environments. Performance is strong, and tooling is highly polished.

Modern C# supports asynchronous programming patterns that work well for concurrent scraping and crawling tasks. Combined with headless browser tools and HTTP clients, it can handle both static and dynamic websites effectively.

C# is less common in the open-source scraping community than Python or JavaScript, but it remains a reliable choice for organizations that prioritize maintainability, type safety, and long-term support.

Key characteristics:

- Modern, strongly typed language within the .NET ecosystem

- Common in Windows based and enterprise environments

- Good support for async programming

Pros:

- Clean syntax with strong tooling and IDE support

- Good performance and reliability

- Easy integration with Azure and .NET services

Cons:

- Smaller scraping community compared to Python

- Fewer scraping specific libraries

Basic scraping example:

5. PHP

PHP is still used for web scraping, particularly in projects where it is already part of the backend stack. It's easy to deploy, widely supported by hosting providers, and capable of handling simple to moderately complex scraping tasks. For basic data extraction, PHP can be a practical choice.

However, PHP is less suited for large scale or highly concurrent scraping. Its ecosystem offers fewer modern scraping and browser automation tools compared to Python or Node.js. As a result, it's typically used for lightweight or legacy workflows rather than high performance crawlers.

Teams that rely heavily on PHP may still use it for scraping to avoid introducing new technologies, but it's rarely the first choice for new scraping systems.

Key characteristics:

- Server side scripting language widely used in web development

- Simple deployment and broad hosting support

- Common in legacy systems

Pros:

- Easy to get started for simple scraping tasks

- Works well when PHP is already part of the backend

- Minimal setup required

Cons:

- Limited scalability for large scraping workloads

- Fewer modern scraping and automation tools

Basic scraping example:

6. Go (Golang)

Go has gained popularity for high performance web scraping and crawling. Its lightweight concurrency model, built around goroutines and channels, makes it especially effective for handling thousands of parallel requests. Go programs are fast, memory efficient, and easy to deploy as single binaries.

The standard library includes strong support for HTTP networking, which reduces dependency on external packages. While Go’s scraping ecosystem is smaller than Python’s, it's sufficient for building robust crawlers and data collection services.

Go is often chosen for performance critical components or large scale crawlers where efficiency and simplicity matter more than rapid prototyping.

Key characteristics:

- Compiled language designed for simplicity and concurrency

- Lightweight and efficient runtime

- Well suited for large scale crawlers

Pros:

- Excellent performance and low memory usage

- Built in concurrency with goroutines

- Easy deployment as a single binary

Cons:

- Smaller scraping ecosystem

- Less convenient for rapid prototyping

Basic scraping example:

How to choose the best language for web scraping?

If you're new to programming, choose a language with a beginner friendly ecosystem and strong learning resources. Python is often recommended thanks to clear documentation and libraries like Requests, Beautiful Soup, Scrapy, and Playwright, all backed by large communities and tutorials. JavaScript (Node.js) is another good option, especially for dynamic sites, with well documented tools such as Playwright and Puppeteer.

Instead of building everything from scratch, rely on established frameworks and third-party tools that handle common scraping challenges for you. Scraping frameworks manage request scheduling, retries, parsing, and crawling logic, while external services help with proxies, browser automation, and anti blocking measures. Using these ready made components significantly reduces development time and improves reliability, especially for first projects.

How to build a reliable web scraping setup?

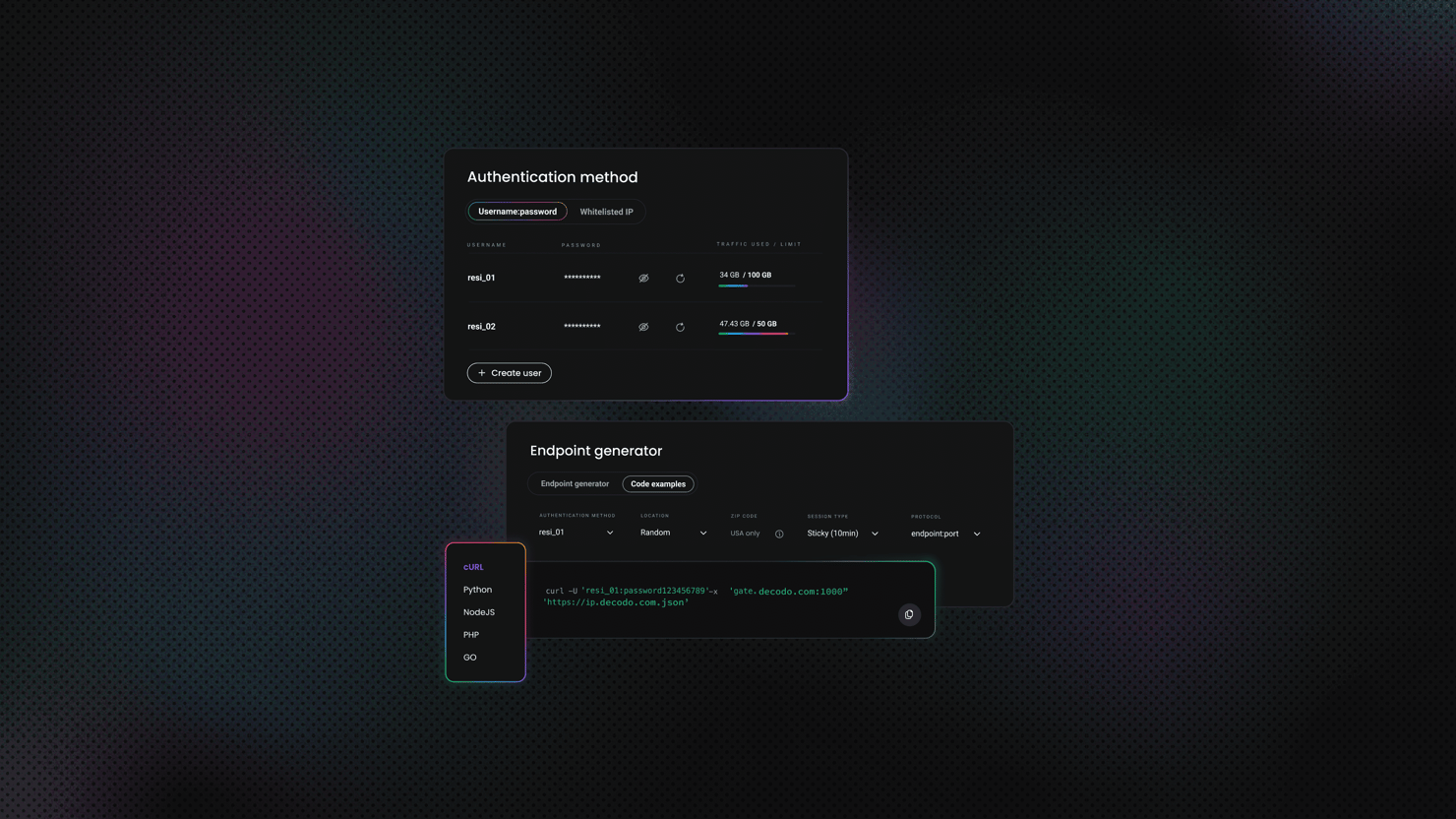

Choosing a language is only one part of the equation. A reliable scraping setup also depends on the supporting tools you use alongside it. Proxies are a key component, as many websites monitor request patterns and block repeated traffic from a single IP address.

Using a trusted proxy provider helps distribute requests across multiple IPs and reduces the risk of bans. Services like Decodo offer large IP pools designed for scraping and data collection workflows. Combined with proper request handling, delays, and error management, these tools help keep your scraper stable.

Web scraping often comes with unexpected challenges, from layout changes to anti bot measures. Preparing for these issues and following best practices from the start will significantly improve the success and longevity of your project.

Final thoughts

The language you choose for web scraping should align with your experience level, project requirements, and long-term goals. Python, JavaScript, Java, C#, PHP, and Go all offer viable paths, depending on how much control, performance, and scalability you need.

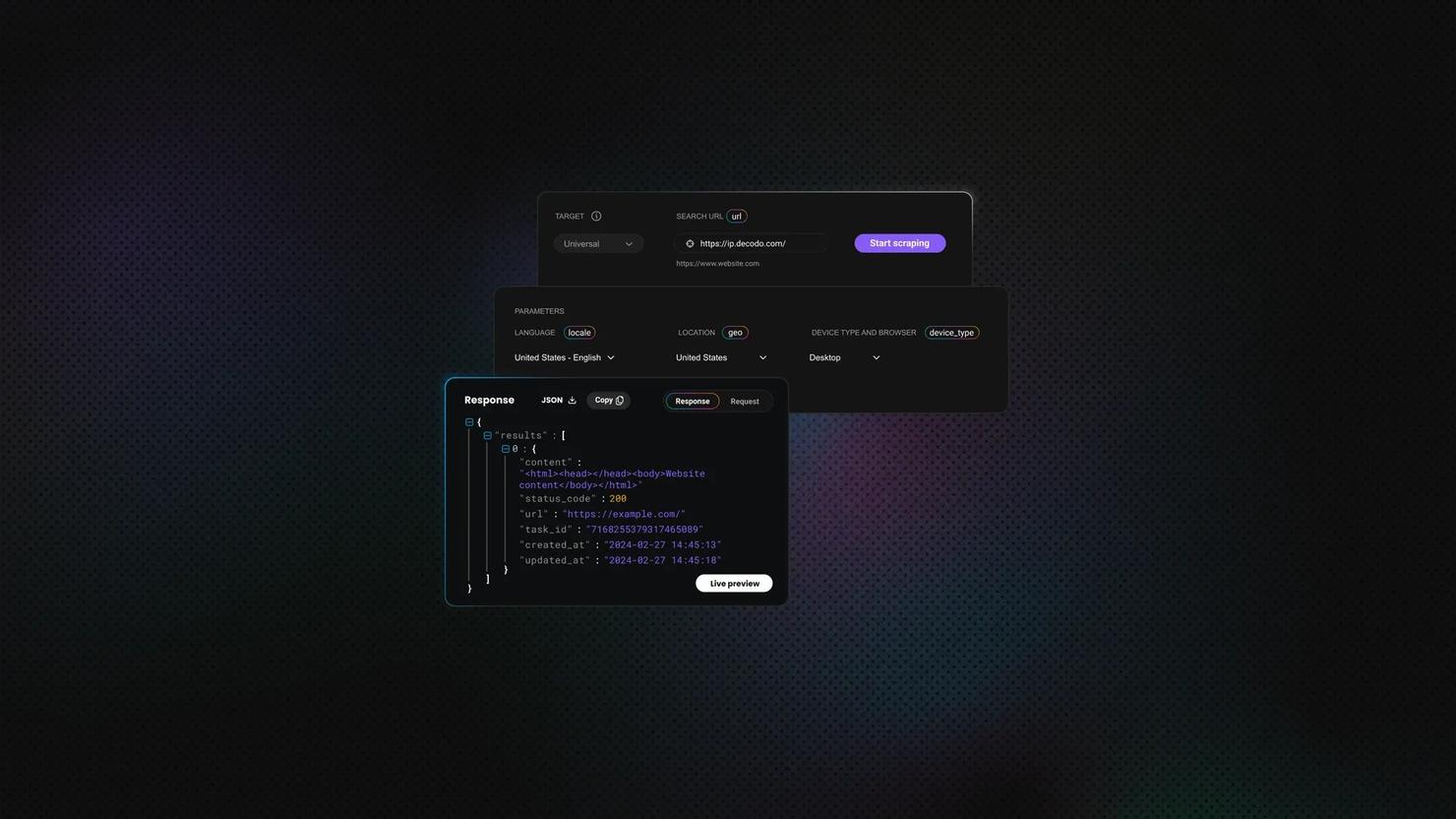

That said, writing and maintaining scrapers is not the only approach. Pre-built solutions like Decodo's Web Scraping API can handle many of the technical challenges for you, from data extraction to infrastructure management. These tools can be integrated into your codebase or used through a simple web interface, making them a practical option for teams that want reliable results without managing scraping systems themselves.

About the author

Dominykas Niaura

Technical Copywriter

Dominykas brings a unique blend of philosophical insight and technical expertise to his writing. Starting his career as a film critic and music industry copywriter, he's now an expert in making complex proxy and web scraping concepts accessible to everyone.

Connect with Dominykas via LinkedIn

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.