Decodo Knowledge Hub

The go-to place for developers and curious minds. Here you'll find many tutorials, integrations and code guides to immediately start building or setting up your next application together with Decodo proxies.

Getting started with Decodo solutions

What are proxies?

A proxy is an intermediary between your device and the internet, forwarding requests between your device and the internet while masking your IP address.

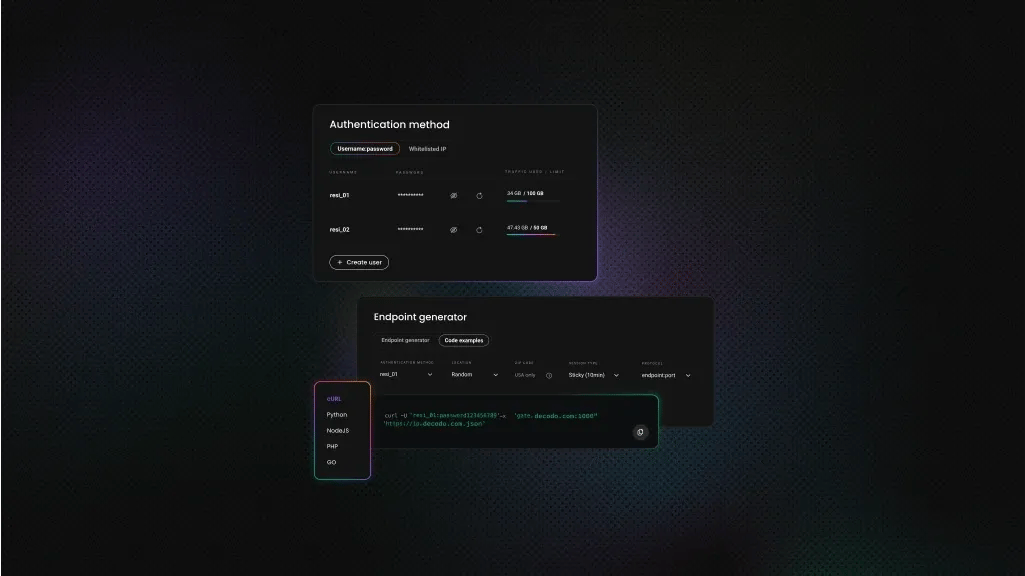

Residential Proxies

Real, physical device IPs that provide a genuine online identity and enhance your anonymity online. Learn more

ISP Proxies

IPs assigned by Internet Service Providers (ISPs), offering efficient and location-specific online access with minimal latency. Learn more

Mobile Proxies

Mobile device based IPs offering anonymity and real user behavior for mobile-related activities on the internet. Learn more

Datacenter Proxies

Remote computers with unique IPs for tasks requiring scalability, fast response times, and reliable connections. Learn more

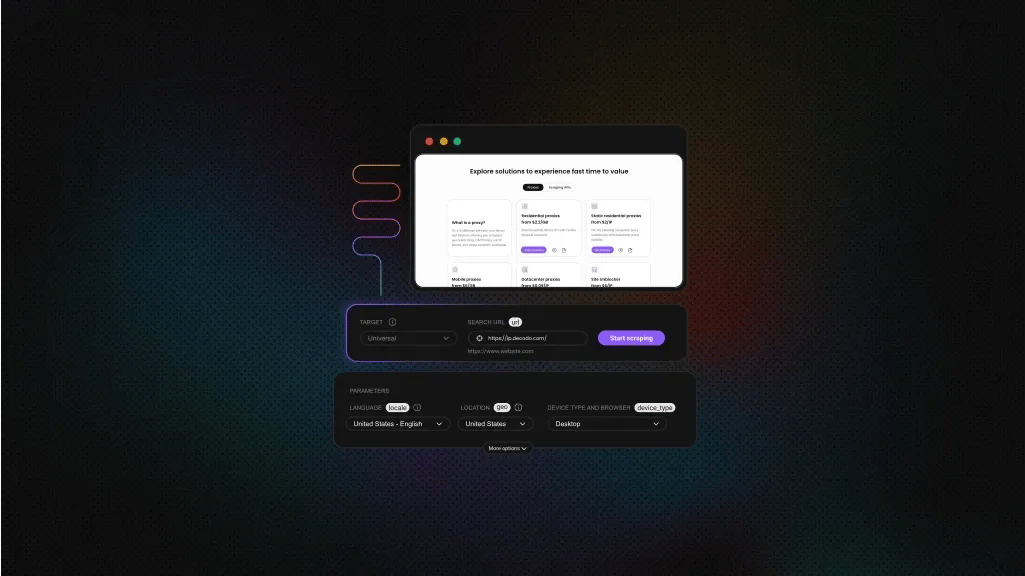

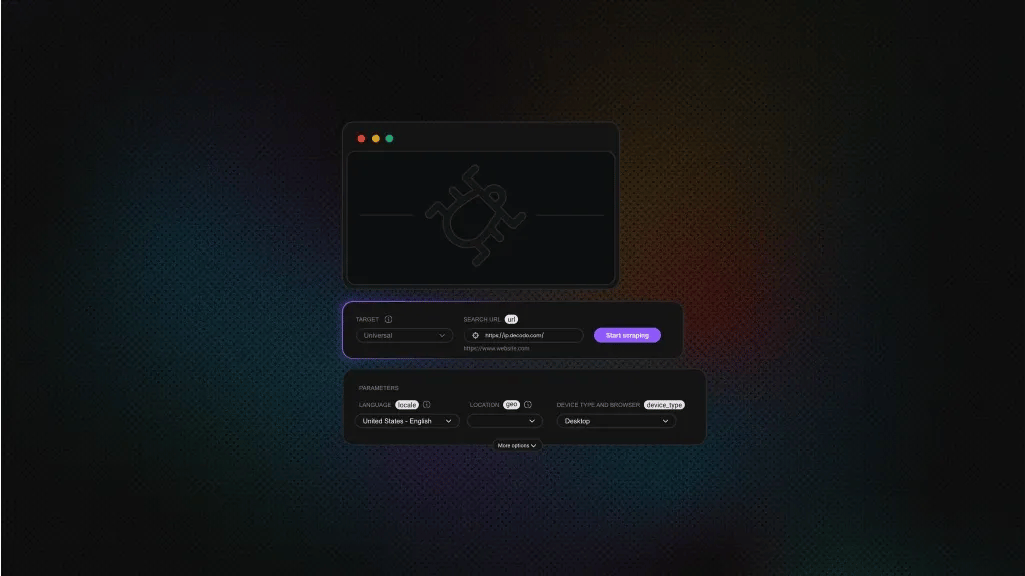

Site Unblocker

A powerful application for all proxying activities offering dynamic rendering, browser fingerprinting, and much more. Learn more

Find the right integrations for your project

Easy Decodo proxy setup with popular applications and third-party tools. Check out these guides to get started right away.

Chrome

Learn more

Safari

Learn more

Firefox

Learn more

Edge

Learn more

Decodo Chrome Extension

Learn more

Decodo Firefox Extension

Learn more

FoxyProxy extension

Learn more

Insomniac

Learn more

SwitchyOmega extension

Learn more

Ghost

Learn more

iPhone

Learn more

Android

Learn more

Waterfox

Learn more

Axios

Learn more

Shadowrocket

Learn more

Dolphin Anty

Learn more

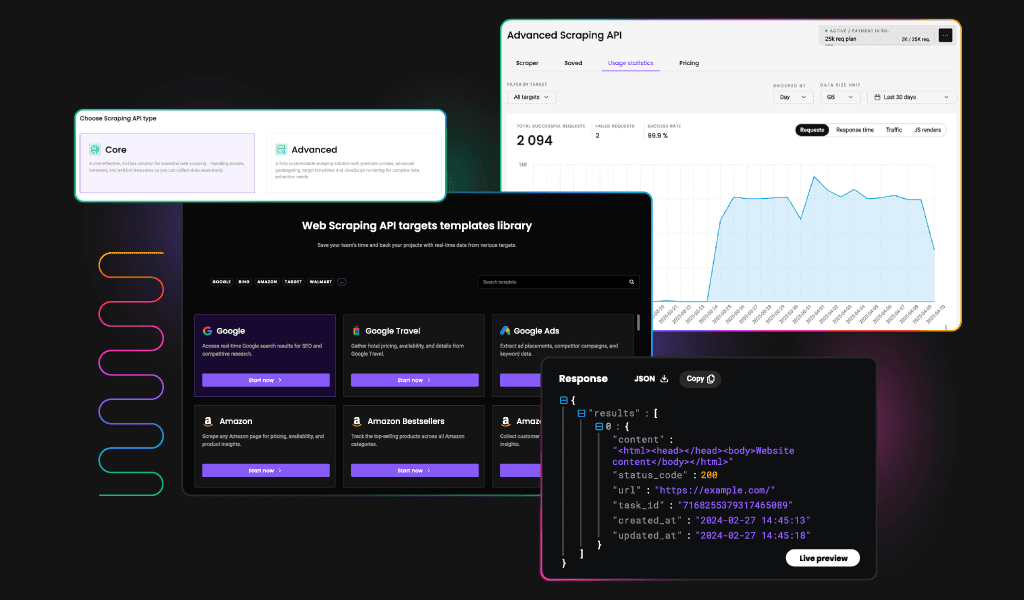

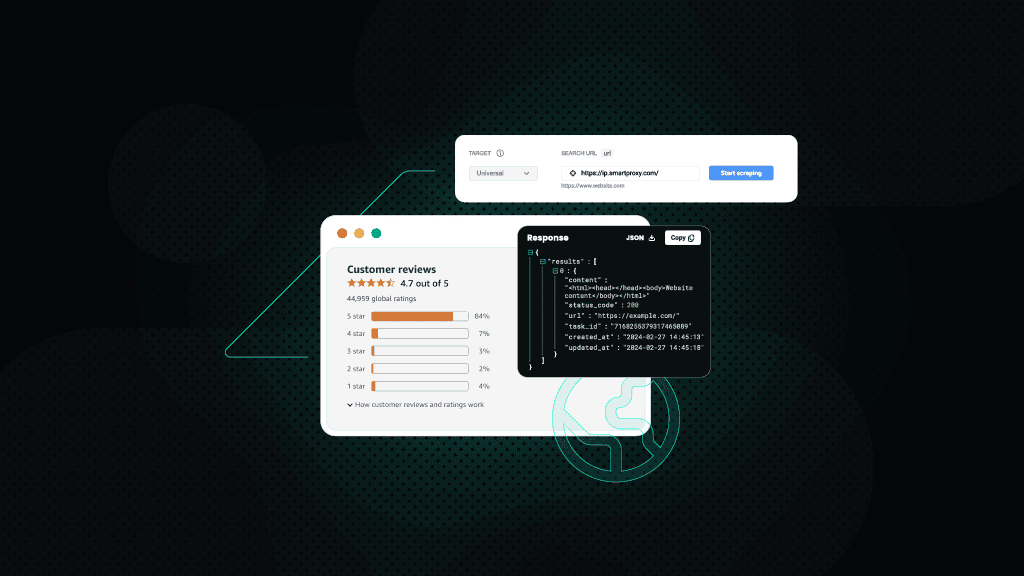

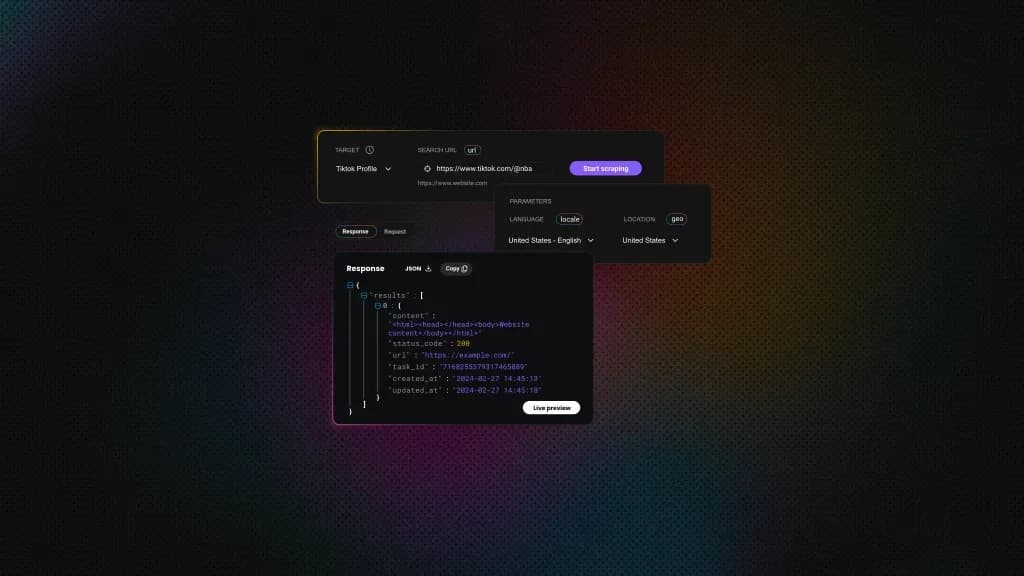

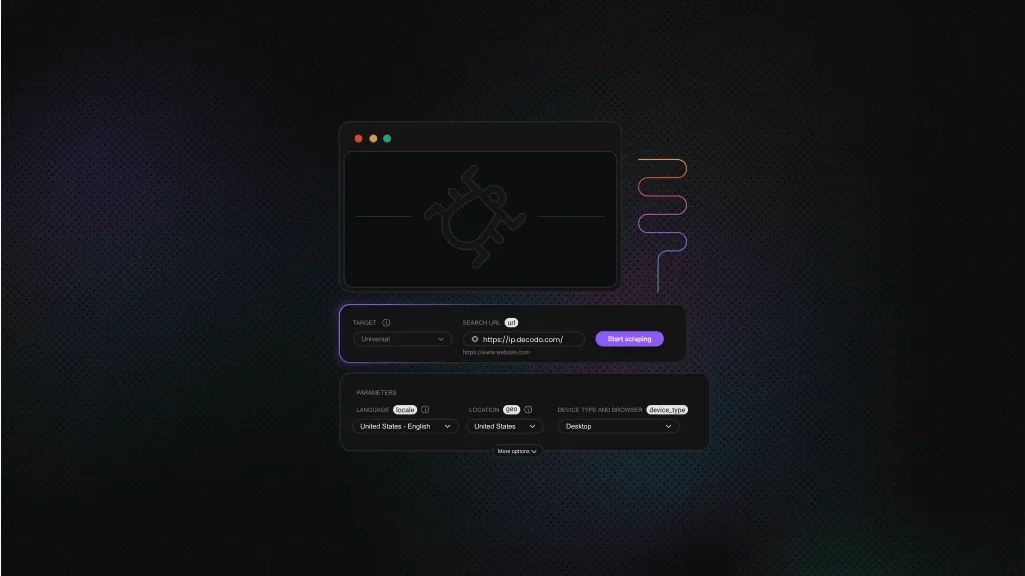

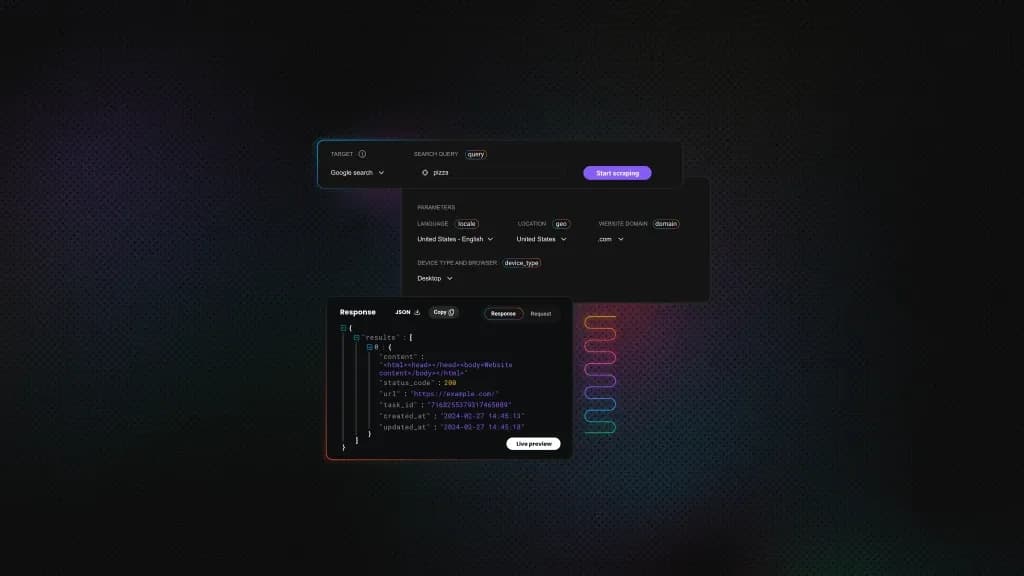

Discover our scraping templates

Explore our extensive template library for all your scraping needs.

Decodo blog

Most recent

How To Find All URLs on a Domain

Justinas Tamasevicius

Last updated: Feb 09, 2026

16 min read

Frequently asked questions

What is the Decodo Knowledge Hub?

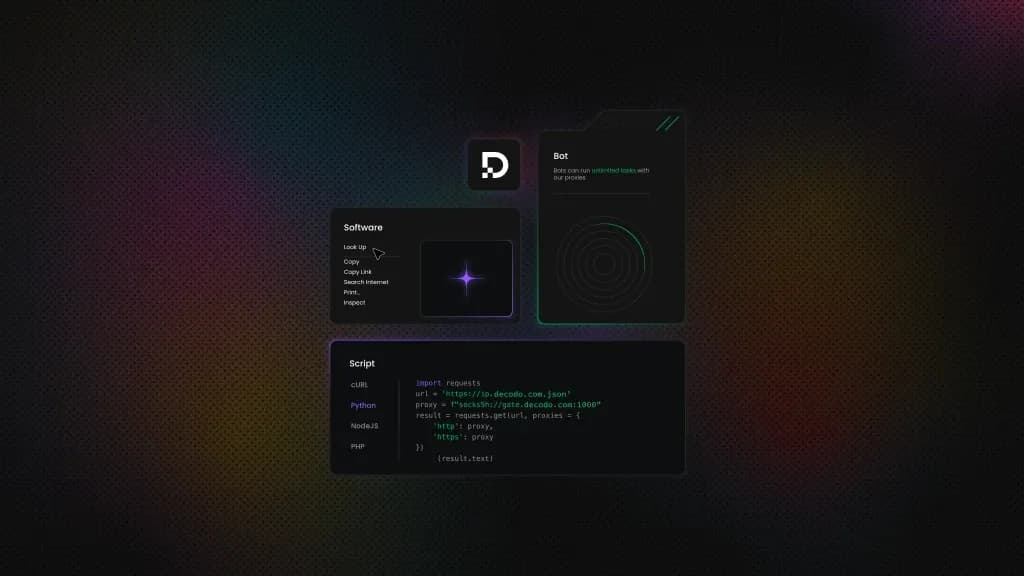

The Decodo Knowledge Hub is the go-to resource for individual developers, teams and those who want to learn more in-depth about proxies and web scraping. It serves as a repository of information, guides, various code tutorials, integration & configuration examples and best practices.

What types of content and resources are available in the Knowledge Hub?

The Knowledge Hub features informative articles, tutorials, and integration guides on how to set up proxies in code and applications. It also offers comprehensive information about Decodo products, such as different types of proxies, scraping APIs, and powerful proxy tools.

How frequently is the content in the Knowledge Hub updated, and how can I stay informed about new additions or changes?

The Knowledge Hub is updated together with new product or feature releases, so you can be sure to always find information about anything new. Various code tutorials, integration guides, and many other valuable resources are added every couple of weeks. You'll soon be able to subscribe to our newsletter to stay informed about the latest content and trends in the proxy world!

What if I cannot find an answer here?

If you don't see an answer to your question, check out our documentation, Discord, or contact our 24/7 live chat support that will be more than happy to help you with your issue.

The Fastest Residential Proxies

Dive into a 115M+ ethically-sourced residential IP pool from 195+ locations worldwide. Now from $4/GB*!

14-day money-back option