Website Change Monitoring with Decodo’s Infrastructure

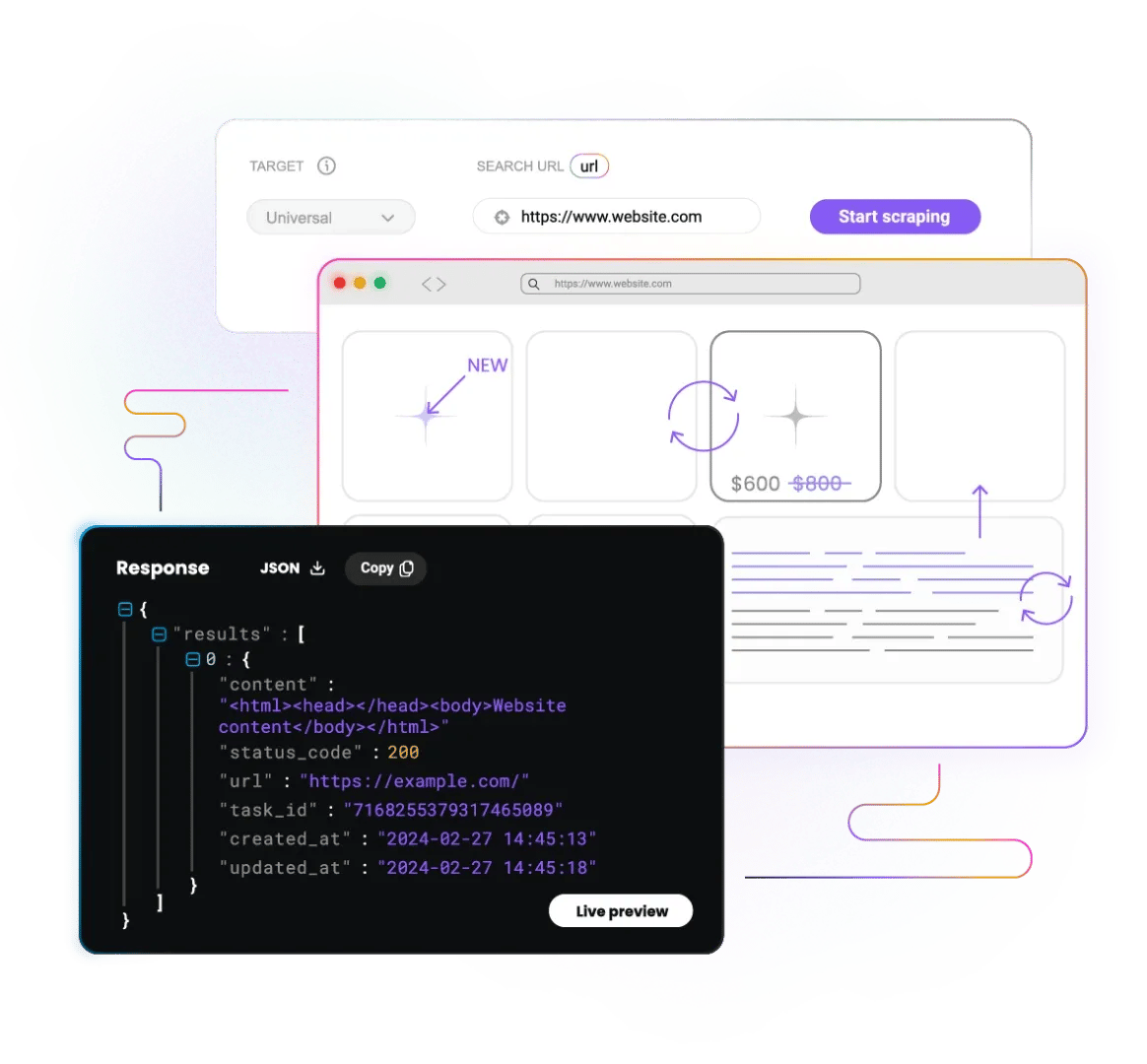

Stay ahead of every update from pricing and inventory changes to content and policy modifications. Decodo gives you a Web Scraping API and high-performance proxies that reliably extract fresh data without blocks, CAPTCHAs, or broken pipelines.

14-day money-back option

125M+

ethically-sourced IPs

<0.2s

average response time

99.86%

success rate

195+

locations worldwide

24/7

tech support

Why every business should track website changes

Online content is constantly updated, and missing a critical update can break pricing strategies, competitive analysis, inventory planning, or compliance efforts. But tracking those changes at scale isn’t simple.

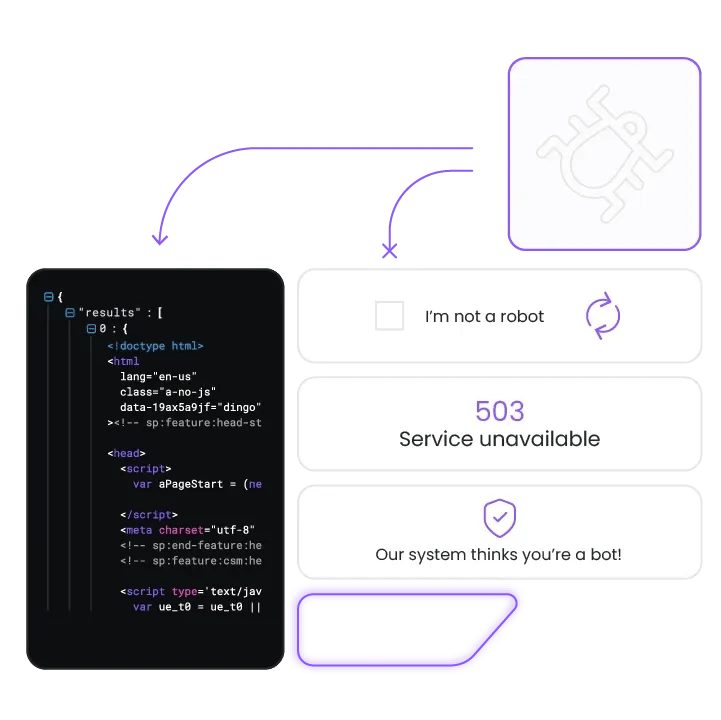

Your team needs fresh, accurate data, yet most scraping setups run into:

- Advanced anti-bot systems

- Region-locked content

- CAPTCHAs and IP bans

- Client-side rendering issues

- High-frequency crawling limitations

- Endless infrastructure maintenance

Decodo’s web scraping infrastructure removes every bottleneck, giving you easy access to dynamic websites and their content, no matter how often the updates roll out.

Track website changes at any time from anywhere

Decodo’s Web Scraping API and proxies let you monitor pages continuously, detect updates instantly, and deliver structured data directly into your workflows.

- Extract precise, structured data in JSON, XHR, PNG, HTML, CSV, and Markdown

- Handle dynamic and heavily protected websites

- Automate high-frequency checks with built-in scheduling

- Gain geographic accuracy with global IP coverage

- Scale from a single page to millions of URLs

Build a monitoring pipeline that never breaks, even when the target website does.

Explore all use cases

Price change detection

Track price increases, discounts, and dynamic updates across marketplaces, retail, and travel sites.

Inventory monitoring

See product availability, out-of-stock items, and restock cycles in real time.

Content & layout updates

Detect changes in text, visuals, metadata, or page structure down to the smallest element.

Policy & compliance change

Stay on top of modifications in terms, conditions, disclosures, and legal pages.

Competitor product updates

Monitor new listings, product detail changes, feature updates, and category expansions.

Choose the right tool for the job

Web Scraping API

Enable JavaScript rendering and geo-targeting across 195+ locations, achieve a 99.99% success rate with automatic retries, export data in flexible formats (JSON, CSV, HTML, XHR, Markdown, PNG), and leverage 100+ ready-made templates for popular targets.

Residential proxies

Achieve a 99.86% success rate with sub-0.6s response times (#1 on the market), use rotating and sticky sessions across 115M+ IPs, target by continent, country, city, state, ASN, and ZIP, and scale with unlimited concurrent sessions.

Mobile proxies

Access 3G, 4G, and 5G networks from 700+ carriers across 160+ locations, tap into 10M+ IPs, maintain a 99.76% success rate, and apply mobile-specific targeting.

ISP proxies

Unlock 99.99% uptime with sub-0.2s response times, run high-speed IPs with residential credibility, choose unlimited traffic, and access premium ASNs across global locations.

Datacenter proxies

Complete tasks with a 99.76% success rate with sub-0.3s response times, achieve high-speed, low-latency performance, choose dedicated or shared IP options, and scale with an unlimited traffic option.

Why scraping community chooses Decodo

We've created a data collection infrastructure that helps you and your project without a single headache.

Manual scraping

Other APIs

Decodo

Manage proxy rotation yourself

Limited proxy pools

125M+ IPs with global coverage

Build CAPTCHA solvers

Frequent CAPTCHA blocks

Advanced browser fingerprinting

Handle retries manually

Pay for failed requests

Only pay for successful requests

Maintenance overhead

Complex documentation

100+ ready-made templates

Days to implement

Limited output formats

JSON, CSV, Markdown, PNG, XHR, HTML output

Here’s how our scraper and proxies can enhance your project

Our proxy and scraping solutions solve data collection challenges across various industries and use cases so that you can get real-time information without CAPTCHAs, geo-restrictions, or IP bans.

eCommerce

Monitor pricing, analyze competitors, and gather market intelligence on multiple eCommerce platforms.

SERP

Collect SERP data across keywords, locations, and devices to track rankings, analyze competitors, and improve SEO performance.

Social media

Create and manage multiple social media accounts to grow your online presence and engage with target audiences.

Find out what people are saying about us

We're thrilled to have the support of our 135K+ clients and the industry's best.

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Featured in:

Frequently asked questions

Which websites can I track for changes?

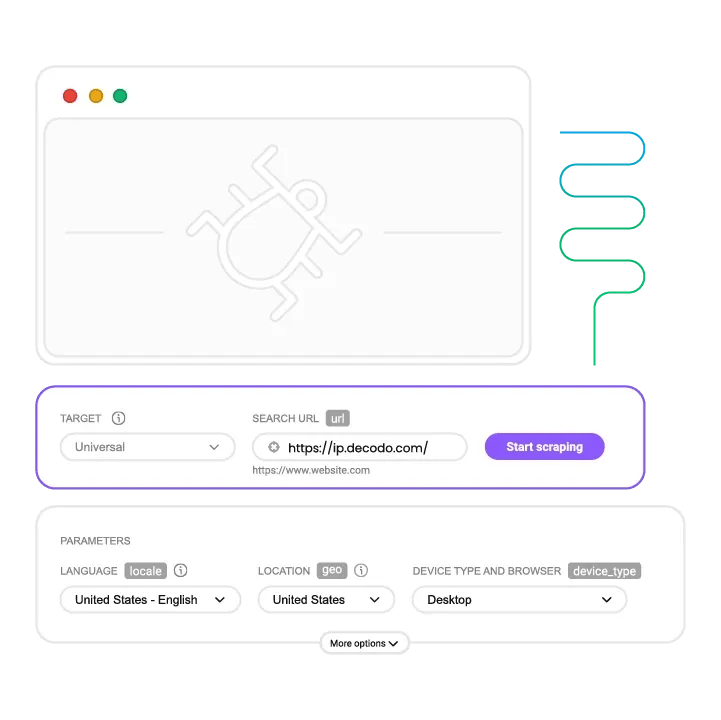

Decodo's Web Scraping API allows you to extract data from a huge range of websites, including Google, Amazon, Walmart, Bing, and even ChatGPT. With over 100 ready-made templates, you can collect data and track website changes with a single click. Our Web Scraping API handles JavaScript rendering, CAPTCHAs, and anti-bot mechanisms automatically, making it possible to monitor dynamic websites and complex platforms that would typically be difficult to scrape.

What's the best way to monitor a website for changes?

The best approach is to use Decodo's task scheduling feature for automated recurring scrapes, which you can configure to run at intervals from hourly to monthly or use custom cron expressions for precise timing. After setting up your scraper configuration with the target URL and parameters in the dashboard, you can save it and schedule automatic execution. Data can be delivered via email, webhook, or Google Drive, allowing you to receive updates automatically rather than manually checking for changes.

How can I receive alerts when a website changes?

Decodo offers multiple delivery methods for change notifications – you can configure scheduled scrapers to send data via email, webhook, or Google Drive. For real-time monitoring, you can use the callback_url parameter with their API to receive notifications when scraping tasks complete.

How frequently can I check for changes?

The frequency depends on the website you’re monitoring. Most eCommerce platforms make daily adjustments, while other websites can make changes once a week or even once a month. We recommend setting up daily data collection and adjusting the frequency depending on the specific target.

Is it possible to monitor changes in specific regions?

Yes, Decodo's proxies offer comprehensive geo-targeting capabilities, including targeting by country, state, region, city, ZIP code, and even ASN across 195+ locations. This allows you to monitor how websites appear and behave in different geographic regions, which is valuable for tracking localized content, regional pricing differences, or geo-restricted information. When configuring your scraper, you simply select your desired location, and the Web Scraping API automatically routes requests through proxies from Decodo's IP pool in that region to ensure accurate, location-specific data collection.

Start Monitoring Website Changes Today

Get fresh, reliable data from any website at any scale.

14-day money-back option