How to Bypass AI Labyrinth: Strategies & Tips Explained

What happens when AI fights AI in the ultimate web scraping showdown? The AI Labyrinth is Cloudflare's latest weapon against unauthorized data collection – sophisticated mazes of AI-generated content designed to trap and exhaust bot resources. This guide explores the AI Labyrinth, including strategies to bypass its defenses, understand its adaptive mechanisms, and discover legitimate alternatives for efficient web data extraction without triggering anti-scraping measures.

Zilvinas Tamulis

Last updated: Aug 14, 2025

8 min read

What is the AI Labyrinth?

The AI Labyrinth is a digital maze that uses AI-generated content to trap misbehaving bots by slowing them down and confusing them — a trap so convincing, bots happily waste their time and computational resources crawling through endless pages of irrelevant content. Instead of simply blocking unwanted crawlers (which alerts them they've been detected), Cloudflare takes a sneakier approach by creating convincing fake content that looks legitimate but is actually useless. When unauthorized bot activity is detected, Cloudflare automatically deploys an AI-generated set of linked pages to send the bot into an endless rabbit hole.

Why bypass the AI Labyrinth?

Organizations across various industries need to bypass AI Labyrinth systems for legitimate business purposes, including research, competitive analysis, and data-driven product development. No matter if you're monitoring market trends, training AI models, conducting automated testing, or gathering business intelligence, encountering Cloudflare AI Labyrinth can disrupt critical operations. Understanding how to evade AI content maze systems becomes essential when your team relies on web data for strategic decision-making and maintaining a competitive advantage.

However, before attempting to bypass AI Labyrinth systems, it's crucial to understand that circumventing website security measures may violate terms of service and potentially trigger limitations, IP bans, or other consequences. Rather than focusing solely on technical bypass methods, consider reaching out to website owners for data partnerships, using official APIs where available, or exploring publicly available datasets. Remember that ethical web scraping requires respecting robots.txt directives, implementing reasonable rate limits, and seeking permission when accessing proprietary content.

How the AI Labyrinth works

Understanding the technical mechanics behind AI Labyrinth helps explain why traditional bot detection methods fall short against this system. Here's the step-by-step breakdown of how Cloudflare's AI bot mitigation traps unwary crawlers:

Step 1: Content pre-generation

The AI Labyrinth begins with Cloudflare creating a bunch of unique HTML pages on diverse topics before any bot activity occurs. Rather than generating AI content on demand, they implement a pre-generation pipeline that saves server resources and doesn't impact site performance. The system generates a diverse set of topics first, then creates realistic content for each topic, producing more varied and convincing results that appear legitimate to automated crawlers. The content is factually accurate to avoid contributing to misinformation, but it's utterly unrelated to the site being crawled.

Step 2: Hidden link integration

When unauthorized bot activity is detected, the AI Labyrinth seamlessly integrates the pre-generated content as hidden links on existing pages via custom HTML transformation without disrupting the original structure. These links remain invisible to human visitors, while each generated page includes appropriate meta directives to prevent search engine indexing and protect SEO.

Step 3: Bot trap activation

Once a crawler follows these invisible links, it enters the maze of AI-generated content that appears real but contains irrelevant information about random scientific facts. No real human would go several links deep into this maze of useless AI-generated content, making it an effective identification mechanism for automated behavior.

Step 4: Resource exhaustion and fingerprinting

As bots traverse the AI-generated pages, they waste valuable computational resources processing irrelevant content rather than extracting legitimate website data. Cloudflare captures detailed behavioral signatures, including IP addresses, timing patterns, navigation paths, and crawling depth, to identify automated activity with high confidence. This data feeds into their machine learning models to improve bot detection capabilities, creating a beneficial feedback loop where each scraping attempt contributes to improving Cloudflare's systems.

Step 5: Blacklist and cross-platform protection

Any crawler identified through the AI Labyrinth system gets added to Cloudflare's list of known bad actors. The fingerprinting data is shared across Cloudflare's global infrastructure, making it increasingly difficult for the same bot to operate effectively on any Cloudflare-protected site. This creates a persistent identification system that tracks bots across various websites and during scraping sessions.

Challenges in bypassing AI Labyrinths

If you attempt to bypass the sophisticated systems of an AI Labyrinth, you're most likely going to face the following challenges:

- Adaptive defenses. AI Labyrinth systems continuously evolve their detection patterns based on bot behavior, making previously successful bypass techniques obsolete within hours while sharing behavioral signatures across Cloudflare's global network.

- Honeypots. Modern AI honeypots create entire networks of realistic URLs with invisible integration through custom HTML transformation, making them nearly impossible for automated programs to identify as fake traps.

- Evolving AI. Machine learning feedback loops from each bot interaction continuously improve Cloudflare's ability to identify new attack patterns, moving toward predictive identification that can flag suspicious activity before it fully manifests.

- Risk and potential consequences. Circumventing AI Labyrinth systems may violate a site's terms of service. This can result in permanent behavioral fingerprinting that follows your infrastructure across multiple platforms, potentially leading to IP bans, legal action, and reputational damage.

Legitimate alternatives

Even if you encounter an AI Labyrinth, that doesn't mean you're completely restricted from accessing the information behind it. Here are a few alternative ways to gather data legitimately:

APIs

Official APIs represent the most straightforward path to accessing web data without triggering AI Labyrinth systems or other anti-scraping defenses. Many major platforms, including social media networks, eCommerce sites, and news organizations, offer structured API endpoints that provide clean, reliable data access with proper authentication and rate limiting. These APIs often include better data quality, real-time updates, and comprehensive documentation compared to scraped content, while eliminating the technical overhead of maintaining bypass techniques.

Data licensing

Data licensing programs provide legitimate access to large-scale datasets without the need to bypass Cloudflare AI Labyrinth or similar protection systems. Companies increasingly offer licensing agreements that grant access to historical and real-time data feeds for research, AI training, or commercial use. These partnerships often include cleaned, structured data with proper metadata, eliminating concerns about data quality contamination from AI-generated content. Licensing agreements also provide legal protection and clear usage terms, making them ideal for organizations that need predictable, long-term data access for business-critical applications.

Partnerships

Strategic data partnerships offer collaborative approaches to information sharing that benefit both parties. Rather than attempting to evade AI content maze systems, organizations can propose mutually beneficial arrangements where data providers receive compensation, attribution, or reciprocal data access in exchange for structured information sharing. These partnerships often result in higher-quality data, custom data formats tailored to specific use cases, and ongoing support relationships that adapt to changing business needs. Building partnerships also establishes legitimate channels for future data requirements and can lead to exclusive access arrangements that provide competitive advantages over organizations relying solely on scraping techniques.

Using different sources

The internet is a vast landscape that has a lot of helpful information, no matter where you look. Instead of trying to circumvent complicated bot detection systems, you can look for information elsewhere.

Many websites are safe to crawl and scrape, but still use anti-bot and anti-scraping tools to protect server performance and prevent malicious activity. When collecting data ethically, these measures can pose a serious obstacle without dependable proxies. Decodo provides premium residential, mobile, datacenter, and ISP proxies in 195+ locations with 99.99% uptime, enabling unrestricted, global data access any time of day.

Unlock global data access

Overcome site restrictions with fast, reliable proxies and gather the insights you need without limits.

The future of AI bot mitigation

Ongoing arms race

The battle between AI-powered bots and AI defensive systems is intensifying rapidly, with automated traffic now comprising 51% of all internet traffic for the first time in a decade. Bad bots account for 37% of web traffic, up from 32% in 2023, mainly driven by AI tools that allow even non-technical attackers to launch sophisticated campaigns.

This escalating arms race has seen cybercriminals using machine learning to enhance their attacks and bypass detection systems, and even take over accounts with credential stuffing. Meanwhile, security specialists are also employing AI-based protection systems, resulting in an ongoing battle between developers, which will soon evolve into a quickly escalating war of AI.

Innovation

Next-generation bot mitigation systems are evolving beyond traditional blocking approaches toward sophisticated deception and behavioral analysis techniques. The AI Labyrinth is just the first step in using generative AI to combat bots. Future versions may create entire networks of linked URLs that blend into existing website structures, making them even harder for automated programs to detect. Machine learning feedback loops now enable defense systems to adapt in real time, with each bot interaction feeding data that improves detection capabilities and creates beneficial protection across entire networks.

Responsible AI use

The growing dominance of AI in web scraping has sparked urgent calls for ethical frameworks and responsible deployment practices across the industry. Recent industry news highlights that ethical scraping in 2026 looks fundamentally different from previous approaches, as AI-powered scrapers can now read, understand, and make autonomous decisions about data collection without human oversight.

Organizations are increasingly recognizing that AI doesn't inherently understand privacy violations or ethical boundaries, placing responsibility squarely on development teams to implement proper governance structures. The OECD's recent report on data scraping and AI advocates for voluntary codes of conduct, technical tools, and standard contract terms to promote responsible AI development while safeguarding intellectual property rights.

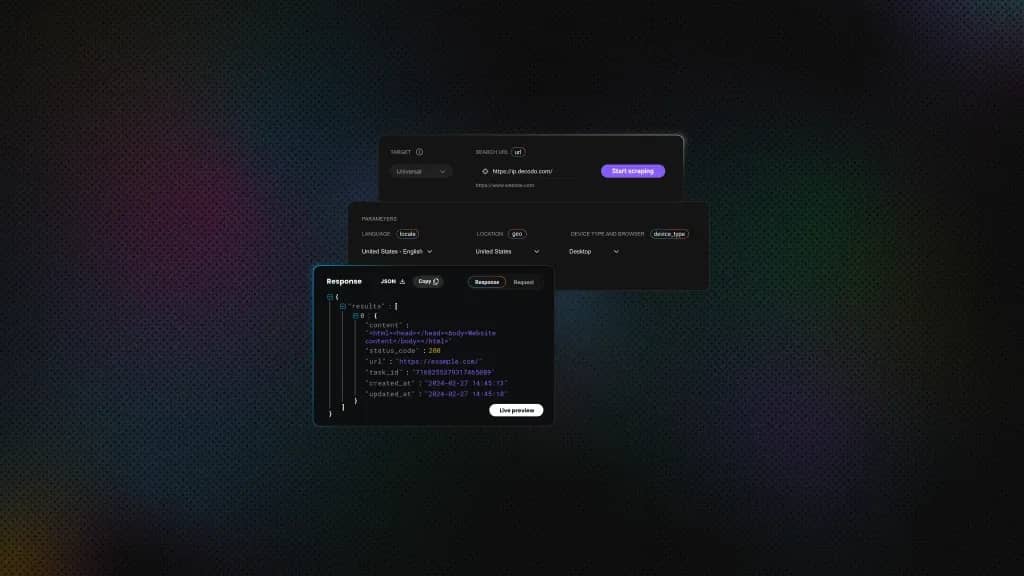

Escaping the maze

AI Labyrinth systems use AI-generated mazes to trap crawlers in resource-draining loops, making web data collection increasingly complex. While bypass methods exist, the risks often outweigh the gains. A better path is using official APIs, data licenses, or partnerships that respect infrastructure and IP. For ethical, reliable data access, professional solutions like Decodo's residential proxies and scraper API can help overcome technical barriers while staying compliant.

About the author

Zilvinas Tamulis

Technical Copywriter

A technical writer with over 4 years of experience, Žilvinas blends his studies in Multimedia & Computer Design with practical expertise in creating user manuals, guides, and technical documentation. His work includes developing web projects used by hundreds daily, drawing from hands-on experience with JavaScript, PHP, and Python.

Connect with Žilvinas via LinkedIn

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.