Web Crawling vs Web Scraping: What’s the Difference?

When it comes to gathering online data, two terms often create confusion: web crawling and web scraping. Although both involve extracting information from websites, they serve different purposes and employ distinct methods. In this article, we’ll break down these concepts, show you how they work, and help you decide which one suits your data extraction needs.

Justinas Tamasevicius

Jul 01, 2025

7 min read

What is web crawling?

Web crawling is an automated process where crawlers (or spiders) systematically scan websites by following links to discover and index pages. Unlike scraping, which extracts specific data, crawling focuses on breadth– collecting URLs, site structures, and metadata to build a comprehensive web index. Key components include seed URLs (starting points), robots.txt (access rules), and politeness policies to avoid overloading servers.

The process begins with seed URLs, downloads page content, extracts new links, and repeats– storing data in a searchable index. Crawlers use strategies like breadth-first (exploring all links per page) or depth-first (following single paths). Essential for search engines like Google, crawling also powers SEO analysis, competitive research, and web monitoring.

How web crawlers work

A practical example is Google’s search engine:

- Starting point. Google’s crawler, often referred to as "Googlebot," begins with a list of known URLs from previous crawls and sitemaps.

- Discovery. It visits each URL, analyses the content (text, images, videos), and follows both internal and external links to uncover new pages, adhering to set rules.

- Indexing. Relevant data, such as keywords, metadata, and backlinks, is processed and stored in Google’s index for fast retrieval.

- Continuous update. The crawler revisits sites periodically (based on their update frequency and authority) to check for new or updated content, ensuring the index stays current.

This approach creates a massive database of web pages. When a user searches on Google, the engine queries its pre-built index rather than scanning the live web, delivering results in milliseconds. Modern crawlers also prioritize pages based on freshness, relevance, and mobile-friendliness, reflecting evolving search algorithms.

What is web scraping?

Web scraping extracts targeted data from websites using automated scrapers. It collects specific information like prices or reviews, unlike broad web crawling. Businesses use it for market research, price tracking, and lead generation. The process involves loading pages, extracting data from HTML, and storing it in structured formats. APIs offer a cleaner alternative when available.

Key elements include scrapers, HTML parsing, and proxies to avoid blocks. Applications range from competitor analysis to data aggregation. Challenges include anti-bot measures and legal concerns. Solutions include coding tools (BeautifulSoup, Scrapy) and no-code platforms, with APIs being ideal when accessible. This enables data-driven decisions while respecting technical and ethical boundaries.

How web scrapers work

For this use case, imagine an eCommerce researcher comparing prices:

- Target definition. Select product pages (e.g., Amazon listings).

- Request and fetch. The scraper retrieves the HTML of those pages.

- Parsing. Extracts key data (product names, prices, ratings) from the HTML.

- Data output. Structures result in CSV, JSON, or databases.

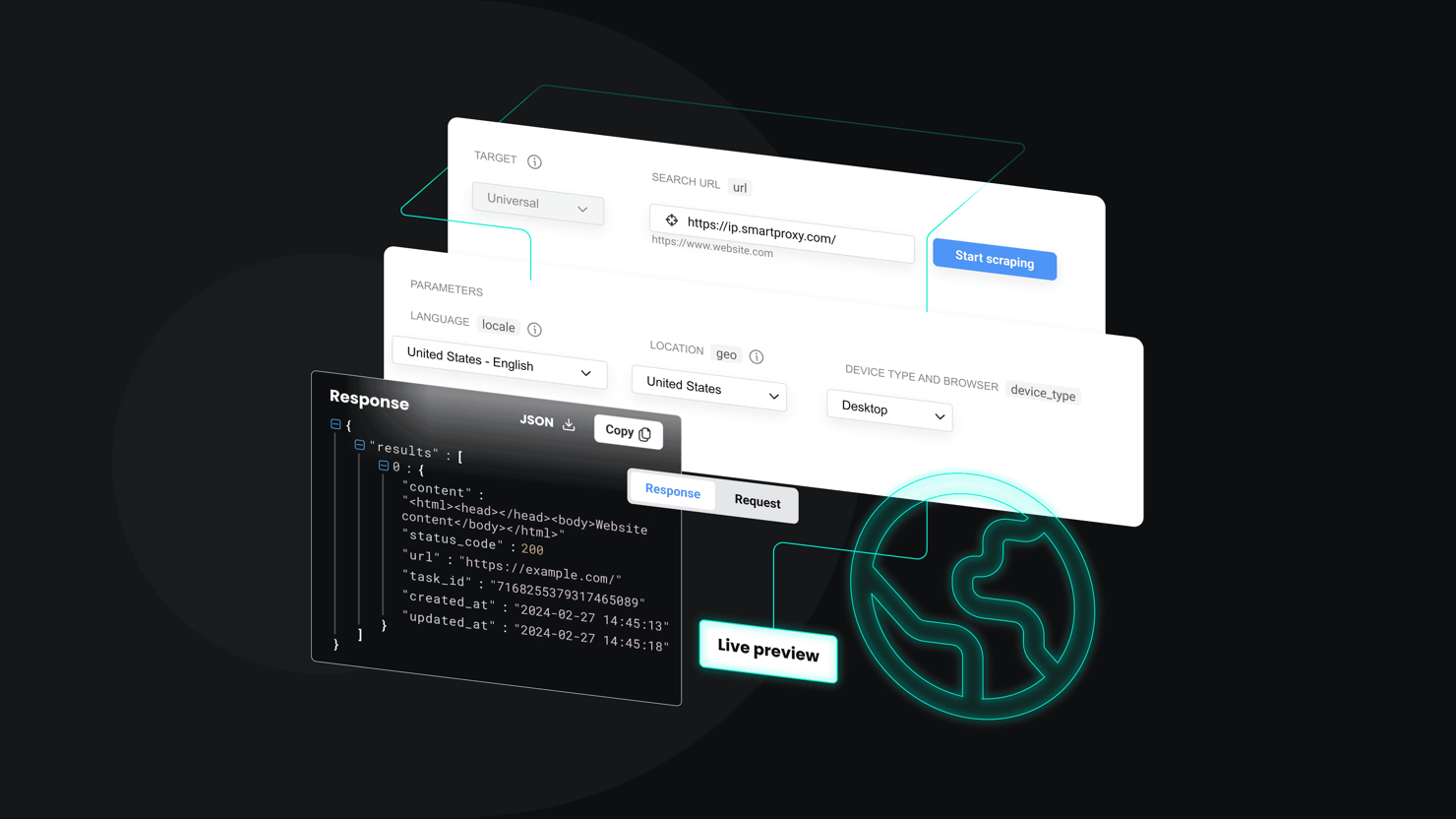

For more advanced or large-scale scraping, specialized tools handle challenges like IP blocks, CAPTCHAs, or JavaScript-rendered pages. For example, Web Scraping API can automatically collect search engine results for ranking analysis, while an Amazon scraper helps with product listings, prices, and reviews.

Web Scraping API with 7-day free trial

Collect data from any website and unlock advanced scraping features.

Key differences between web crawling and web scraping

While often used together, web crawling and web scraping serve distinct purposes – crawling is about broad discovery. Search engines use crawlers (like Googlebot) to systematically explore and index entire websites by following links.

Scraping, on the other hand, focuses on targeted extraction. Tools like BeautifulSoup or Scrapy pull specific data (prices, reviews) from predefined pages. Crawlers map the web at scale, scrapers harvest precise datasets for analysis. Here's a clear side-by-side comparison of what sets them apart:

When to use crawling vs. scraping

While often combined for comprehensive data collection (crawling to discover pages, scraping to extract specific content), the choice depends on your goals:

- Use crawling for broad discovery, like monitoring competitor site structures or aggregating news URLs.

- Use scraping for targeted extraction, such as price tracking, lead generation, or sentiment analysis.

Use cases for web crawling

Web crawling is basically automated data collection from websites, and it's used for tons of different things, including:

- Competitor website monitoring. Track site structure changes, content updates, or rebranding efforts.

- News aggregation. Automatically discover newly published articles for content curation.

- SEO auditing. Identify broken links, duplicate content, or indexing issues across your site.

- Website archiving. Preserve historical snapshots of web pages for legal or research purposes.

Use cases for web scraping

Now web scraping is the process of extracting specific data from web pages once you've accessed them, and it's incredibly versatile for getting exactly the information you need. Individuals and businesses use web scraping for:

- Price monitoring & intelligence. Automate eCommerce price comparisons to stay competitive.

- AI training. Real-time data from various sources can help to train LLMs, agents, and other AI-powered tools.

- Ad verification. Validate ad placements and appearances globally.

- Brand protection. Detect unauthorized product listings or brand misuse.

- Market research. Gather reviews, track trends, or analyze consumer sentiment.

- Lead generation. Extract business contacts from directories or social media platforms.

- Content aggregation. Pull specialized data (articles, specs) for research.

- Academic research. Build datasets from journals or archives.

- Sports statistics. Retrieve player metrics, league standings, and performance data.

- Job listing aggregation. Compile openings from multiple career sites.

- Travel fare monitoring. Track flight/hotel prices for optimal booking times.

What software should you use?

The tools you'll need depend entirely on whether you're conducting web crawling (broad discovery of web pages) or web scraping (targeted data extraction). Another big difference between the those is the software used:

Web crawling tools

Crawling requires specialized software to systematically traverse websites, collect URLs, and map out site structures. Popular solutions include custom-developed spiders using languages like Python or Node.js, as well as open-source frameworks.

Many of these tools feature scheduling options, filtering rules, and detailed logs to help you manage large-scale indexing projects with ease.

Web scraping tools

When the goal is to extract targeted fields, a variety of scraping tools and APIs come into play. Simple scripts with browser automation (like Selenium) can fetch specific data, while advanced platforms offer robust handling of CAPTCHAs, rotating proxies, and JavaScript-heavy pages. Specialized scraping APIs can automate the entire data retrieval process, delivering structured datasets in formats ready for analysis or integration into business workflows.

If you don’t have much coding experience, we recommend using ParseHub or Octoparse. If you prefer Python, try Scrapy or Beautiful Soup. And if you're more into Node.js, consider using Cheerio and Puppeteer.

Why would you want to use a crawling tool?

If you're looking to audit your website, identify broken links, or perform advanced SEO analysis, a tool like Screaming Frog can be invaluable. This powerful SEO crawler scans your site to detect 404 errors, analyze metadata, spot duplicate content, and gather comprehensive data, giving you the insights needed to optimize performance.

Whether you're monitoring your site or analyzing a competitor’s, a crawling tool offers a structured way to uncover hidden issues and opportunities. Here are a few compelling reasons:

SEO audits

Daily or weekly audits help you to:

- Identify broken links. Detect 404 errors and dead-end pages that harm user experience, and rankings.

- Optimize meta tags. Review missing, duplicate, or poorly formatted title tags, and descriptions to ensure search engine visibility.

- Eliminate duplicate content. Spot repeated or thin content that weakens SEO performance and confuses visitors.

Tools like Screaming Frog crawls your site systematically, flagging these issues and many others for quick fixes.

Competitor website monitoring

A little spying on the competitors has never hurt anybody. And in fact, it even helps your business with:

- Tracking strategic changes. Monitor additions like new product pages, pricing updates, and fresh blog content to stay ahead of market shifts.

- Decoding site structure. Analyze their content hierarchy, top-linked pages, and internal linking strategy to uncover their SEO priorities.

Content indexing & site mapping

Content indexing and site mapping are all about understanding what's actually on a website and how it's all connected. Various tool help your business to:

- Optimize for search engines. Visualize how crawlers like Googlebot interpret your site’s structure, ensuring critical pages get indexed.

- Improve internal navigation. Identify orphaned pages and streamline site architecture for both users and search engines.

What Google says about web crawling

Google provides transparent guidance about Googlebot's operations and offers best practices for optimizing crawl efficiency. Their key recommendations include:

Core crawling mechanics

Googlebot serves as Google's primary web crawler, systematically following links to discover new and updated content across the web. This automated process forms the foundation of how search engines build their understanding of what exists online, constantly moving from page to page through internal and external links to map out the entire web ecosystem.

Crawl control & optimization

Managing crawler access involves using robots.txt files and meta tags like "meta name='robots'" to guide which pages should be crawled or indexed by search engines. Google also automatically adapts its crawl frequency based on your site's health metrics such as server response time and overall performance, though you can manually fine-tune these settings through Google Search Console to better control how often your site gets visited.

Modern crawling priorities

With mobile-first indexing now the standard, Google predominantly uses the mobile version of your content for indexing and ranking decisions, making mobile optimization absolutely critical for search visibility. Additionally, submitting an XML sitemap helps Googlebot prioritize and index your most important pages faster, minimizing discovery gaps and ensuring your key content gets found and processed efficiently.

Why use web scrapers?

Web scraping is perfect when you need to grab specific data instead of just wandering around entire websites. While crawling is like exploring a whole neighborhood, scraping is more like going straight to the houses you actually care about and taking notes on what you find. It's a game-changer for businesses that need real data to make smart decisions, and here are three ways companies are using it to get ahead.

Price monitoring (for eCommerce businesses)

If you're selling anything online, you're probably obsessed with what your competitors are charging—and you should be. Web scraping lets you automatically pull product prices, titles, reviews, and stock levels from Amazon, eBay, or wherever your competition hangs out, so you can see exactly what's happening in your market without spending hours clicking around manually. The real magic happens when you set up dynamic pricing that responds to these changes in real time, so when a competitor drops their price or runs out of stock, you can instantly adjust yours to grab more sales or protect your margins.

Ad verification (localised campaigns)

Running ads across different countries or regions can be a nightmare because you never really know if they're showing up correctly until customers start complaining. Web scrapers with residential proxies let you check your ads from different locations and devices, making sure your geo-targeting actually works and your ads look right to people in Japan, Germany, or wherever you're trying to reach. Plus, if you're working with affiliates around the world, you can automatically monitor their promotions to make sure they're not going rogue with your brand or breaking your partnership rules.

Brand protection (content & IP monitoring)

Nobody likes finding knockoff versions of their products or seeing their brand logos stolen and used on sketchy websites. Web scraping helps you stay on top of this by automatically scanning the internet for unauthorized use of your content, fake products, or people impersonating your brand on forums and marketplaces. The earlier you catch these problems, the faster you can shut them down before they confuse customers or hurt your reputation.

Google’s stance on web scraping

While Google itself operates one of the world’s largest web crawlers, it enforces strict boundaries around third-party scraping activities.

Google’s scraping policies

- Explicit prohibitions. Bans scraping that violates site owners’ rights, circumvents security (e.g., bypassing login walls), or overloads servers.

- Anti-bot defences. Deploys advanced protections like reCAPTCHA and rate-limiting to safeguard its services.

- Spam penalties. May demote or blacklist sites employing aggressive scraping tactics that violate Google’s Webmaster Guidelines.

Controversies around Google’s data use

- AI training debates. Google’s use of publicly scraped data for AI/ML models has sparked legal and ethical discussions about copyright and fair use.

- Attribution grey areas. Questions persist about whether companies should compensate or credit original content creators for scraped data.

Ethical scraping best practices

The most important rule you should follow while collecting data from Google or any other website – be compliant with the local laws and respect the website's terms of service to avoid legal complications.

- Check robots.txt and meta tags to adhere to the site owners’ crawl directives.

- Prioritize APIs and use official data sources (e.g., Google Search Console API) when available.

- Limit request rates. Avoid disrupting the target sites’ performance.

Main challenges and things to consider

Collecting data at scale isn’t straightforward. Whether you’re crawling entire domains or scraping specific datasets, you’re likely to encounter:

- IP blocking. Websites may block repeated requests from a single IP, forcing you to use proxy rotation.

- CAPTCHAs and anti-bot measures. Tools like Google reCAPTCHA are designed to filter out automated requests.

- Dynamic content. JavaScript-heavy or AJAX-based pages can be tougher to parse, requiring headless browsers or specialized APIs.

Technical challenges

Scraping at scale brings a host of technical hurdles that can turn a simple data collection project into a complex engineering challenge.

- Rate limiting. Websites often throttle or block excessive requests. Managing request frequency with delays and proxy rotation helps avoid detection.

- Session management. Maintaining logged-in states (cookies, tokens) is critical for accessing authenticated content, but can be complex to automate.

- Data deduplication. Scraping the same data multiple times wastes resources. Implementing checks for duplicate records ensures clean datasets.

- Incomplete or inconsistent data. Missing fields or irregular formatting require data validation and cleaning post-scrape.

- Site structure changes. Websites frequently update layouts, breaking scrapers. Regular maintenance and adaptive parsing (e.g., AI-based selectors) help mitigate this.

Anti-scraping measures

Websites deploy increasingly sophisticated defenses to block automated data collection, including:

- IP blocking. To avoid bans or other IP-related restrictions, use rotating proxies (residential or datacenter) mask your original IP address and distribute the traffic.

- CAPTCHAs and anti-bot systems. Here will help tools like 2Captcha or headless browsers (Puppeteer) that help to bypass even the most advanced anti-bot mechanisms.

- Dynamic content. JavaScript-rendered data requires headless browsers (Selenium, Playwright) or reverse-engineering APIs to retrieve data successfully.

You can also watch our video on web scraping vs. web crawling to better understand the key differences and use cases of these similarly named actions:

Expert tips & tricks for large-scale crawling and scraping

If you're serious about scraping big websites or handling massive data extraction projects, you can't just write a quick Python script and call it a day. Real-world scraping at scale is a whole different beast that requires proper planning, the right tools, and some sneaky tricks to stay under the radar.

Choose the right tools and frameworks

Your scraping stack determines how efficiently you can scale.

- Use headless browsers wisely – tools like Puppeteer or Playwright are great for JavaScript-heavy sites, but are resource-intensive.

- Scrapy for scale – it's built-in request scheduling, middleware support, and async handling make it ideal for large-scale tasks.

- Rotate user agents and proxies – this helps you avoid detection and bans from servers.

Optimize for speed and resilience

Large-scale scraping can quickly overwhelm your system or get you blocked. To get around the blocks:

- Enable asynchronous requests and use aiohttp or frameworks that support async I/O.

- Use caching and save responses where possible to reduce redundant hits.

- Retry logic and error handling to gracefully manage timeouts, 5xx errors, or CAPTCHAs.

Top tools for scalable scraping

The right tools can make the difference between a scraping project that works for a few hundred pages and one that can handle millions without breaking a sweat. While you could theoretically build everything from scratch, there are some battle-tested tools and frameworks that have already solved the hard problems of large-scale data extraction.

Tool

Best for

Key advantage

Scrapy

Structured, high-speed crawling

Python framework, extensible

Selenium

Browser automation & dynamic content

Cross-browser support

Playwright

Modern JS-heavy sites

Multi-browser (Chromium and Firefox)

Web Scraping API

Scraping advanced websites at scale

125M+ IPs, ready-made scraping templates, 100% success rate

Legal risks

Ignoring web scraping best practices exposes you to serious legal consequences, including copyright lawsuits under the DMCA, breach-of-contract claims (as demonstrated by LinkedIn’s case against hiQ Labs), and even criminal liability under laws like the U.S. Computer Fraud and Abuse Act. Technically, aggressive scraping triggers defenses like IP blacklisting, CAPTCHAs, and rate limiting, which can corrupt your datasets or permanently block access. Major platforms like Twitter and Reddit have also restricted API access in response to scraping abuse, punishing even legitimate users.

Reputation and access costs

Beyond legal and technical hurdles, unethical scraping damages professional credibility. Companies increasingly vet partners’ data sourcing methods, and violators risk being blacklisted by anti-bot networks like Scamalytics. Lost partnerships and restricted access to critical platforms can outweigh any short-term gains from unregulated scraping.

Bottom line

Web crawling and scraping might sound like the same thing, but they're actually pretty different tools for different jobs. Crawling is like sending a robot to explore an entire website and make a map of everything it finds, perfect if you're doing SEO audits, trying to understand a site's structure, or building the next Google. Scraping is more like a sniper rifle that goes straight for the specific data you want, whether that's product prices, customer reviews, or contact information from competitor sites.

The trick is knowing which one you actually need. If you're trying to get the big picture of how a website is organized or you want to index everything for analysis, crawling is your friend. But if you just want to grab specific pieces of information to feed into your own systems or keep tabs on what competitors are doing, scraping will get you there faster and with way less hassle. Most businesses end up using both at different times, depending on whether they need the fire hose or the laser beam approach to data collection.

Try Web Scraping API for free

Collect real-time data with advanced IP rotation with a 7-day free trial and 1K requests.

About the author

Justinas Tamasevicius

Head of Engineering

Justinas Tamaševičius is Head of Engineering with over two decades of expertize in software development. What started as a self-taught passion during his school years has evolved into a distinguished career spanning backend engineering, system architecture, and infrastructure development.

Connect with Justinas via LinkedIn.

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.