Top 10 MCPs for AI Workflows in 2026

MCP has shifted from niche adoption to widespread use, with major platforms like OpenAI, Microsoft, and Google supporting it natively. Public directories now feature thousands of MCP servers from community developers and vendors, covering everything from developer tools to business solutions.

In this guide, you'll learn what MCP is and why it matters for real-world AI agents, which 10 MCP servers are currently most useful, and how to safely choose and combine MCPs for your setup.

Mykolas Juodis

Last updated: Jan 13, 2026

9 min read

What is an MCP?

Model Context Protocol (MCP) is an open protocol that standardizes how LLM applications connect to external tools, applications, and data sources. Often describeḑ as the "USB-C for AI", it provides a common language for hosts to discover and invoke capabilities across different services.

An MCP setup has three core components:

- Host. The LLM application (like an IDE assistant or chatbot) that runs the model and coordinates MCP connections.

- MCP client. A connector that the host launches to maintain a 1:1 session with a server.

- MCP server. A service endpoint that exposes capabilities, such as creating GitHub issues or fetching Airtable records.

Through this architecture, AI systems can access live data and trigger operations in real time via standardized JSON-RPC 2.0 messages defined by the MCP specification.

Why MCPs are essential for AI workflows

Implementing MCPs delivers transformative benefits to AI projects, impacting developers, organizations, and end-users alike:

- Automation of multi-step workflows. MCPs allow hosts to chain tool calls across services while maintaining context. These sequences can run fully automatically or with user consent at key steps – for example, fetching a file, analyzing it, then updating another system under host-controlled approvals.

- Interoperability and standardization. By standardizing capability discovery and JSON-RPC messaging, MCPs eliminate many one-off integrations. Teams can integrate once and reuse broadly, with minimal extra implementation effort, even when swapping or combining tools.

- Developer productivity. Official SDKs and a growing catalog of community MCP servers help teams build faster. Instead of writing glue code from scratch, developers can speed up prototypes and reduce maintenance overhead.

- Enterprise-ready controls. MCPs support transport-level authorization so clients can call restricted servers on a user's behalf. While the protocol doesn't mandate role-based access control or audit logging, hosts and servers can implement these features, along with consent prompts and per-tool identity scoping.

Together, these capabilities make AI assistants more context-aware, capable, and trustworthy.

Top 10 MCP servers for AI workflows in 2026

Many MCP servers now connect AI systems to everything from code repositories to design tools. Here are 10 of the most notable MCP servers shaping AI workflows in 2026.

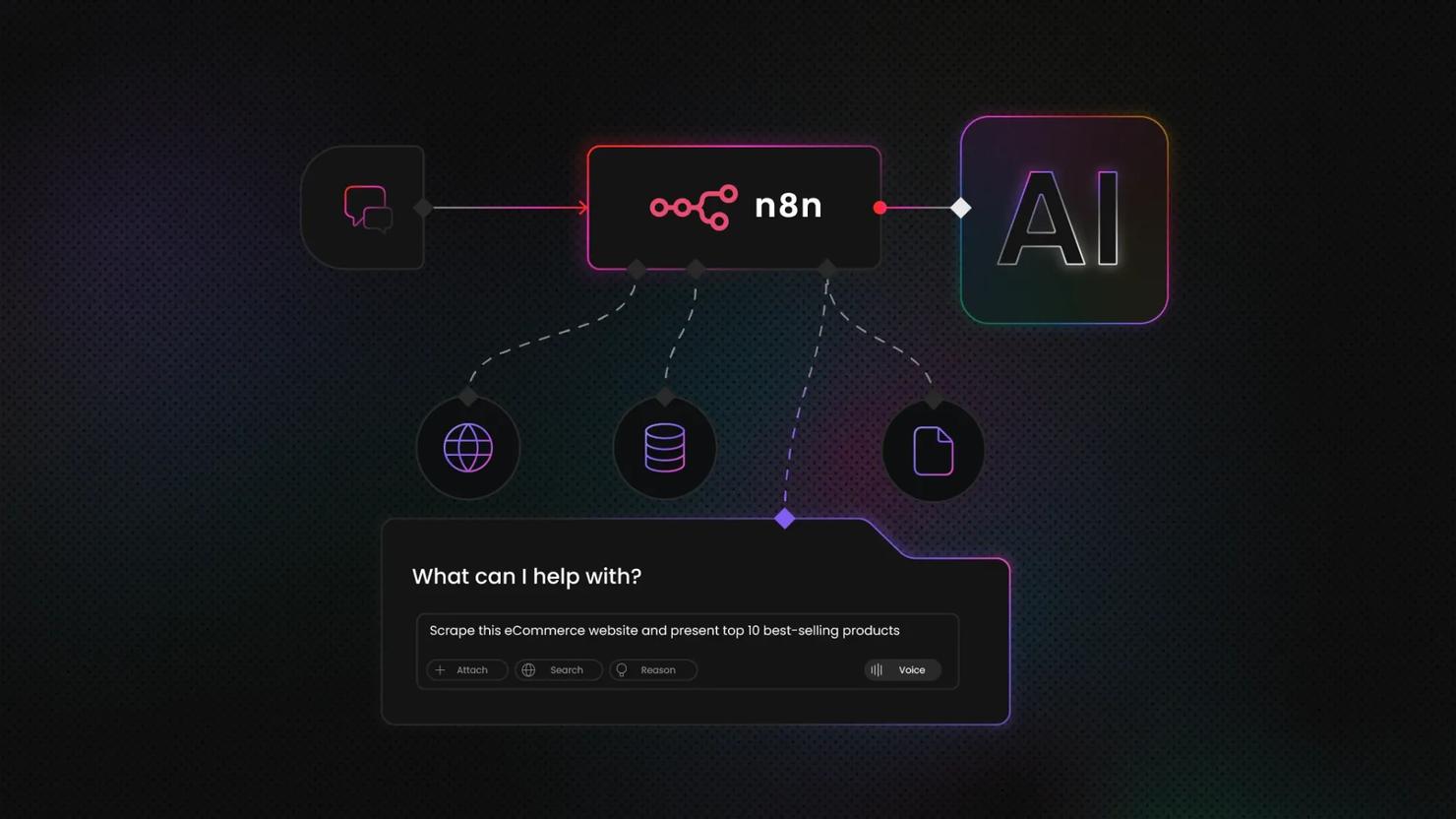

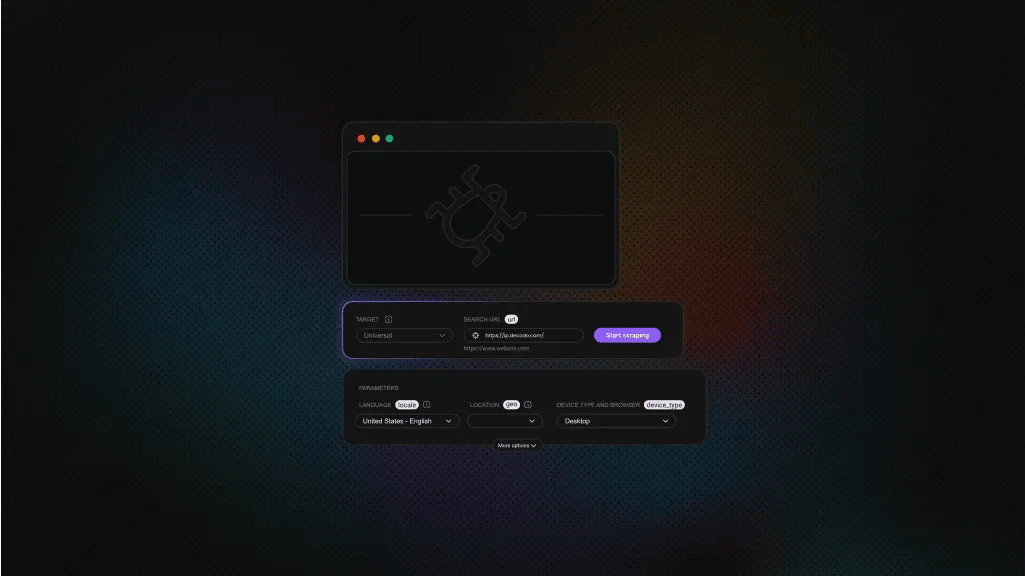

1. Decodo MCP – web scraping and data extraction

Decodo’s MCP server connects AI agents to Decodo's comprehensive scraping infrastructure, letting them fetch live web content and search results on demand. You can run it locally as a Node.js server with your Decodo Web Scraping API credentials or use a hosted option.

Key features

- Built-in scraping tools – including scrape_as_markdown (returns clean Markdown), google_search_parsed (parsed Google results), amazon_search_parsed (parsed Amazon listings), reddit_post (parsed Reddit posts), reddit_subreddit (parsed Reddit subreddits).

- JavaScript-heavy site support – optional headless rendering via jsRender to execute client-side scripts and capture dynamic content.

- Anti-blocking and geo targeting – leverages Decodo's Web Scraping API for IP rotation with country and region targeting.

- Flexible output controls – tune responses with geo, locale, tokenLimit, and fullResponse to control content length and payload structure.

- Hosted or local deployment – run locally as a Node.js MCP server or use the hosted option via Smithery. Both authenticate with your Decodo Web Scraping API key.

- Ready-to-use in LLMs – Decodo’s MCP server can be integrated directly with the most popular AI-powered tools and LLMs, including GPTs, Claude Desktop, Cursor, Gemini, and others.

- Smithery-compatible – Decodo's MCP server can be plugged into any Smithery-enabled workflow, making it effortless to pair real-time web data with your favorite AI tools, automation platforms, or custom agents without building complex integrations from scratch.

Best use cases

- Live data retrieval for research assistants, Q&A bots, or pricing and SEO monitoring.

- Adding fresh, citeable web context to LLM responses.

For example, you can ask your AI assistant, “Fetch the current GDP per capita of Japan from Wikipedia”. The AI calls Decodo's scraping tool, retrieves the page, processes it, and returns the statistic.

Setup

You can install Decodo's MCP server from GitHub or follow our step-by-step setup guide.

Collect data faster with Web Scraping API

Access any website and get real-time data without CAPTCHAs, IP bans, or geo-restrictions with a 7-day free trial and 1K requests.

2. GitHub MCP – code repositories and DevOps

The GitHub MCP server gives AI assistants secure, real-time access to GitHub, letting them browse and search code, manage issues and pull requests, and automate repository tasks.

Key features

- Browse and search repositories, files, commits, and project history.

- Create and update issues and pull requests, review or merge changes, and manage notifications and discussions.

- CI/CD and security insights via GitHub Actions, Code Scanning, and Dependabot.

- Safety and scope controls via read-only mode and toolset scoping to limit capabilities.

Best use cases

- AI-assisted coding, repository search and analysis, and automated issue triage.

- DevOps agents that open or update PRs and surface build or security insights from Actions and Code Scanning.

For example, ask “Add a CODEOWNERS file in /api and open a PR.” The server can create the file, commit it, and open the pull request.

Setup

Straightforward integration through GitHub MCP server repository.

3. Slack MCP – workplace communication and collaboration

The Slack MCP server connects AI assistants to Slack, letting them read and search channel, thread, and DM history, with optional posting capabilities. This is a community project, not an official Slack product.

Key features

- Multiple connection options. Supports stdio and SSE transports; outbound proxy via SLACK_MCP_PROXY.

- Flexible authentication. Stealth mode using xoxc/xoxd tokens or OAuth with user xoxp tokens. Posting is disabled by default, but can be enabled via SLACK_MCP_ADD_MESSAGE_TOOL with channel allowlisting.

- Smart history and search. Fetch messages by date range or count; search across channels and DMs with filters for user, channel, date, and thread context.

Best use cases

- Summarizing discussions, stand-ups, or generating weekly team recaps.

- Q&A over historical Slack content and posting updates when enabled.

For example, the AI retrieves the week's #release-planning messages, summarizes the main discussion threads, and if posting is enabled, sends the recap back to the channel.

Setup

Easy step-by-step setup with Slack MCP GitHub repository.

Just a heads up – this server can access DMs and multi-person IMs if your token permits. Make sure usage complies with your organization's Slack policies.

4. Figma MCP – design files and design-to-code

The Figma MCP server gives coding assistants a structured design context by simplifying Figma API data into a lean, LLM-ready view of layouts and styles.

Key features

- Simplified design data. Converts raw Figma API output into a streamlined representation of layouts and styles for more accurate code generation.

- Targeted retrieval. Paste a file, frame, or group link, and the server fetches only the relevant design metadata.

- Local integration. Runs locally via npx figma-developer-mcp with a Figma access token and is optimized for Cursor.

Best use cases

- Converting Figma designs into production-ready components with high design-to-code fidelity.

- Accelerating UI implementation in AI-powered IDEs by providing structured design context instead of relying on screenshots.

Here's an example – paste a Figma frame link and ask, “Generate the React component.” The MCP retrieves compact layout and style metadata from Figma, then produces matching code.

Setup

Can be connected with GitHub repository. Optionally, Figma also ships an official Dev Mode MCP server that exposes design context to AI tools from the desktop app.

5. Notion MCP – documents, notes, and knowledge bases

The Notion MCP server lets assistants securely search, read, and write across pages, databases, and comments in your workspace. You can use the hosted endpoint (OAuth) or run the open-source server locally with a Notion integration token.

Key features

- Workspace-wide tools. search (across Notion and connected Slack/Drive/Jira), fetch, create-pages, update-page, move-pages, duplicate-page, create-database, update-database, create-comment, get-comments, get-users, get-user, get-self.

- Permission-aware access. Hosted OAuth mirrors your account’s permissions; the open-source server uses a Notion integration token and only sees content you explicitly connect.

- Flexible hosting options. Use Notion’s hosted MCP at mcp.notion.com/mcp (streamable HTTP) or mcp.notion.com/sse (SSE). For local runs, use @notionhq/notion-mcp-server with NOTION_TOKEN. STDIO is supported locally, and you can wrap the hosted endpoint for STDIO with mcp-remote.

Best use cases

- Summarizing roadmaps, wikis, or knowledge bases, and answering workspace questions through search and fetch.

- Creating or updating documents and tasks directly from chat – meeting notes, status updates, or new project pages.

For example, you can ask, “Summarize our Q3 roadmap.” The AI searches your workspace, fetches relevant pages, and returns a concise summary.

Setup

This MCP server can be set up with Notion MCP GitHub repository.

6. Stripe MCP – payments and financial operations

The Stripe MCP server lets assistants work with core Stripe objects – customers, invoices, subscriptions, refunds, disputes, products, prices, payment links, and balance – and search Stripe documentation and support articles.

Key features

- Hosted remote server. Streamable HTTP at mcp.stripe.com with OAuth and dynamic client registration. Admins can review or revoke authorized MCP clients.

- Bearer token option. For agent backends, call the remote server with a restricted API key to limit available capabilities.

- Local deployment. Run npx -y @stripe/mcp and choose which tools to expose (for example, tools=all).

- Built-in documentation search. The search_documentation tool returns official answers with shareable links directly from Stripe's knowledge base.

Best use cases

- Automating billing or support workflows, such as issuing refunds, updating invoices, or changing subscriptions.

- Answering payment-related questions with authoritative links from Stripe’s documentation.

For example, ask, “Show this customer’s recent payment intents, refund the most recent one, and include the refund-policy link.” The AI lists the payment intents, processes the refund, and returns the relevant documentation link.

Setup

This MCP server can be plugged by following Stripe MCP documentation.

7. Zapier MCP – multi-app workflow automation

The Zapier MCP server gives your AI-controlled access to 8,000+ apps, so it can send messages, create records, and schedule events, without custom integrations. Generate your endpoint at mcp.zapier.com, allowlist the specific actions and accounts, then connect from any MCP-compatible client.

Key features

- Scoped action control. Allowlist the exact actions your AI can use, per app, in the MCP server.

- Built-in authentication and infrastructure. Zapier handles OAuth/account linking, API limits, and security for connected apps.

- Broad client compatibility. Quick start guides for Claude, Cursor, Windsurf, and other MCP-compatible tools.

Best use cases

- Executing one-off cross-app actions, such as adding a CRM entry, scheduling a meeting, or posting to Slack.

- Running sequenced actions in a single session, or setting up Zapier Agents for always-on background automations.

For example, ask, “Onboard ACME Corp.” and let the AI create a CRM record, schedule a kickoff meeting, open a Slack channel, and send a welcome email, using only the actions you’ve approved.

Setup

Plug your AI with Zapier by following Zapier MCP page.

8. Linear MCP – issue and project tracking

The Linear hosted MCP server lets AI assistants search, create, and update issues, projects, and comments in your workspace, with OAuth-scoped access.

Key features

- Core Linear operations. Search, create, and update issues, projects, and comments.

- Ready-to-use hosted endpoints. SSE and streamable HTTP are available for every user.

- Permission-aware access. OAuth ensures only actions your account allows are available. API keys and user tokens aren't supported currently.

Best use cases

- Stand-ups and planning. Create tasks from meeting notes or check task status by assignee.

- Triage and reporting. List open bugs by label, owner, or project for faster prioritization.

For example, you can ask, “Create a critical-bug triage for Project Alpha and assign owners.” The AI finds critical issues, compiles a report, and opens or updates issues as needed.

Setup

You can set up Linear MCP by following their extensive documentation page.

9. Sentry MCP – error monitoring and incident response

The Sentry MCP server securely brings your org’s issue and debugging context into MCP-compatible clients with OAuth-scoped access, and can invoke Seer for root-cause analysis and automated fix recommendations.

Key features

- Comprehensive toolset. Over 16 tools for querying organizations and projects, searching issues and events, retrieving detailed issue information, and managing DSNs.

- Seer integration. Trigger automated analysis, get fix suggestions, and track resolution status directly through your AI client.

- Flexible deployment. Connect to the hosted server at mcp.sentry.dev/mcp (streamable HTTP with SSE fallback) or run locally with your Sentry token and required scopes org:read, project:read, project:write, team:read, team:write, event:write.

Best use cases

- Debugging and on-call triage – find the latest errors, analyze stack traces, and check affected releases.

- Reporting and project summaries – review open issues by severity, track trends across projects.

For example, ask, “Find errors in components/UserProfile.tsx from the last day, then run Seer on ISSUE-123 and report the outcome.” The AI then searches recent events, retrieves context, invokes Seer analysis, and returns actionable results.

Setup

Can be connected with Sentry MCP documentation.

10. Airtable MCP – databases and spreadsheets (no-code data)

The Airtable MCP server runs locally and connects your base to MCP-compatible clients. Assistants can explore schemas with list_bases, list_tables, describe_table, do full CRUD on records, and create or update tables and fields. The AI sees what exists before it writes or changes anything.

Key features

- Complete database access. Includes tools for listing bases and tables, describing table structure, searching records, and creating or updating both data and schemas. The AI gets a full toolkit: list_bases, list_tables, describe_table, list_records, search_records, get_record, create_record, update_records, delete_records, create_table, update_table, create_field, and update_field.

- Secure token authentication. Uses Airtable Personal Access Tokens with granular scopes like schema.bases:read, data.records:read, and optional write permissions. You can limit tokens to specific bases for better security.

- Schema visibility for AI. Exposes table schemas as MCP resources (airtable://<baseId>/<tableId>/schema) so agents can understand your data structure and plan changes intelligently.

Best use cases

- Conversational queries over structured team data such as pipelines, inventories, or CRM records.

- Rapid AI prototypes using Airtable as a lightweight, no-code datastore.

Here's a handy example – ask, “How many deals are in 'Negotiation’? Add ‘Company XYZ’ as ‘Interested’.” The AI searches your records, returns the count, and creates the new entry automatically.

Setup

Can be plugged with your favorite AI tools through Airtable MCP GitHub repository.

Comparison of the top 10 MCP servers

To help summarize the above reviews, the table below compares the key attributes of each MCP server:

MCP server

Provider

Hosting

Core tools and best fits

Badges (auth/controls)

Decodo MCP

Decodo (official)

Hosted locally

scrape_as_markdown, google_search_parsed, amazon_search_parsed, reddit_post, reddit_subreddit, headless JavaScript rendering, geo targeting, and IP rotation. Best for live web research, price monitoring, and competitive intelligence.

API key

GitHub MCP

GitHub (official)

Hosted locally

Browse and search code, create or update issues and PRs, repository triage, and automation.

OAuth, PAT, scoped read/write

Slack MCP

Community (korotovsky)

Local

Read and search channels, threads, and DMs, optional posting (disabled by default). Ideal for summaries, stand-ups, and Q&A over historical content.

OAuth or session token, “post message” tool disabled by default

Figma MCP

Community (GLips/Framelink), design-to-code

Local

Retrieve element hierarchy, styles, and components, optimized design context for AI-driven code generation.

Figma PAT, read-only

Notion MCP

Notion (official), docs/notes

Hosted, local OSS

Search, fetch, create, or update pages, move or duplicate pages, create/update databases, manage comments, and retrieve user data. Useful for knowledge Q&A and live doc/task updates.

OAuth (hosted), integration token (local), permission-aware

Stripe MCP

Stripe (official)

Hosted (local)

Manage customers, invoices, subscriptions, refunds, and disputes, search_documentation tool for official answers. Best for billing automation and support assistants.

OAuth (DCR), restricted API key option, scoped tools

Zapier MCP

Zapier (official), multi-app actions

Hosted

Trigger actions across 8,000+ apps, allowlist exactly which actions your AI can perform. Perfect for orchestrating cross-app workflows directly from chat.

Zapier account auth, action allowlisting, beta rate limits

Linear MCP

Linear (official), issues/projects

Hosted

Find, create, and update issues, projects, and comments. Strong fit for agile planning, stand-ups, and bug triage.

OAuth (dynamic client registration), no API keys

Sentry MCP

Sentry (official), error tracking

Hosted (local)

Query issues/events, stack traces, and releases, Seer for root-cause analysis, manage DSNs. Great for on-call triage and incident response.

OAuth (hosted) or token (local), streamable HTTP/SSE

Airtable MCP

Community (domdomegg), database/no-code

Local

Schema discovery plus full CRUD on bases, tables, and records. Useful for bots, trackers, and lightweight data prototypes.

PAT with scopes (schema.bases:read, data.records:read/write)

Trends and future of MCPs

As MCPs continue growing in 2026, several key trends are emerging:

- Open-source acceleration. Community catalogs, SDKs, and starter kits are making it easy to build private, internal MCP servers that safely expose enterprise APIs to AI agents under organizational policy controls.

- Enterprise adoption is led by major vendors. OpenAI supports MCP across the Responses API and Agents SDK, with similar capabilities from Microsoft Copilot Studio, Anthropic, and Google Gemini. Governed tool use (approvals, audit trails, scoped permissions) is becoming standard.

- Creative and 3D tool integration. While platforms like Zapier MCP already provide extensive prebuilt integrations, new MCP connectors are being prototyped for creative and design applications, including early 3D workflows – letting citizen developers assemble AI workflows from prebuilt connectors without writing code.

- Standardization and governance. As adoption grows, enterprise-grade features such as human-in-the-loop approvals, audit logging, and fine-grained permission controls are becoming standard in MCP gateways.

- Deeper SDLC integration. With GitHub, Linear, and Sentry already providing MCPs, the next wave will likely add connectors for CI/CD pipelines and cloud platforms, enabling agents to draft fixes, open PRs, run tests, and trigger deployments.

How to choose the right MCP

Picking the right MCP servers depends on your project needs and team setup. Here's how to choose the right MCP:

- Start with your use case. What does the AI need to access or accomplish? For development work, GitHub, Linear, and Sentry make sense. For knowledge management, Notion works well. Cross-app workflows call for something like Zapier MCP.

- Factor in your security posture. Enterprise environments should prioritize official MCPs with OAuth and scoped permissions. When OAuth isn’t available, use tightly scoped API keys or PATs. If you’re considering community servers, review the code, pin specific versions, and limit token scopes.

- Decide between hosted and local deployment. Hosted servers are simpler – just a URL and an OAuth setup. Local deployment keeps data in-house and can work when a client doesn’t support remote servers; you still depend on the upstream service being available. Choose hosted for speed, local for control and compliance.

- Match complexity to your team's technical depth. Non-technical teams benefit from plug-and-play hosted options like Zapier MCP. Developer teams can handle community servers and custom setups, but remember these require ongoing maintenance.

- Understand data limitations. Most MCPs work best with targeted queries rather than bulk data exports. For example, Airtable paginates results (100 records per page) and has rate limits (5 requests per second per base).

- Keep your toolset lean. Large toolsets can degrade model performance and increase security risks. For sensitive actions like processing refunds, enable human confirmation. Most servers and clients offer allowlisting or tool disabling features.

To keep it short, start with one server, observe how the AI uses it, then expand gradually.

Bonus – choosing an MCP client

MCP clients are the applications that connect servers into your workflow – whether that's chat apps, IDEs, or desktop tools. Here are some good options to consider:

- Claude Desktop offers the most straightforward setup. This desktop application provides robust MCP support with one-click server installation through "Desktop Extensions". You can add servers from a link, then review and approve tool calls before they execute. It's an excellent choice for getting started without any configuration overhead.

- Cursor integrates MCP directly into the development environment. Add servers through Cursor's MCP settings and run agent workflows alongside your code. This works particularly well for developers who spend most of their time in an IDE.

- Windsurf targets enterprise environments with its Plugin Store and team management capabilities. You can install MCP plugins or add custom ones while maintaining organizational controls over what tools are permitted. This makes it suitable for teams that need standardized, policy-driven configurations.

- Cline brings MCP functionality to VS Code through an extension. Install Cline, connect your servers via the interface, and run agent workflows directly within your repositories. This is ideal when you want MCP capabilities without leaving VS Code.

- LibreChat provides an open-source, self-hosted chat interface with MCP support. Configure servers in librechat.yaml and set up multi-user environments where each user authenticates to their own accounts. This option works best when you need complete control or on-premises deployment.

Bottom line

We've introduced MCP concepts and explored the top 10 MCP servers making waves this year. From Decodo's web scraping MCP (which can transform an AI into a web data powerhouse) to Zapier's all-in-one integration MCP (connecting AI to thousands of apps).

Companies like GitHub, Notion, Stripe, Linear, and Sentry are officially investing in MCP to bring AI deeper into their ecosystems.

If you're developing an AI application or exploring AI assistants for your team, start with MCP integrations. If there isn't an MCP server for your tools yet, the spec is open for you to create your own.

Next steps

To dive deeper or get hands-on:

- Learn how to set up the Decodo MCP server with this step-by-step guide, then connect it from Cursor, VS Code, or Claude Desktop to try AI-driven web scraping in your own environment.

- Check the awesome mcp servers directory and the official modelcontextprotocol/servers repository to track new servers and reference implementations.

- Explore real-world patterns with our AI use cases across industries, and read about how AI systems gather and process data to understand transparency.

- Use our comparative guide to AI data-collection tools to choose a data stack that pairs well with your MCP setup.

Collect real-time data from any website

Start your 7-day free trial of Web Scraping API and unlock all advanced features.

About the author

Mykolas Juodis

Head of Marketing

Mykolas is a seasoned digital marketing professional with over a decade of experience, currently leading Marketing department in the web data gathering industry. His extensive background in digital marketing, combined with his deep understanding of proxies and web scraping technologies, allows him to bridge the gap between technical solutions and practical business applications.

Connect with Mykolas via LinkedIn.

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.