How to Train a GPT Model: Methods, Tools, and Practical Steps

GPT models power 92% of Fortune 500 companies, but generic ChatGPT is amazing at everything and perfect at nothing. When you need domain-specific accuracy, cost control, or data privacy that vanilla models can't deliver, training your own becomes essential. This guide covers the practical methods, tools, and step-by-step process to train a GPT model that understands your specific use case.

Zilvinas Tamulis

Last updated: Oct 03, 2025

9 min read

What is a GPT model?

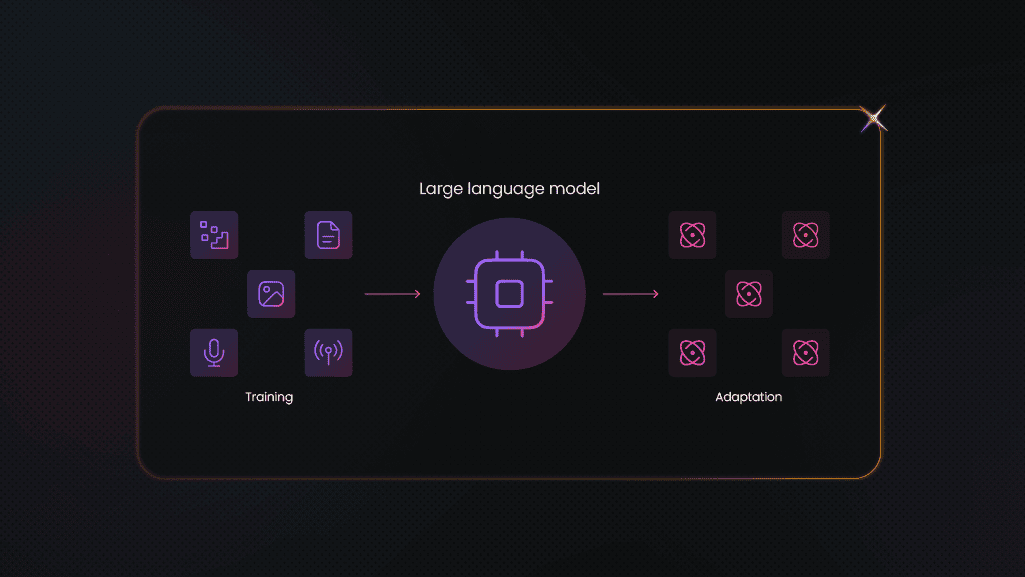

A GPT (Generative Pre-trained Transformer) is an AI model that predicts and generates human-like text. It's a sophisticated autocomplete system that learned from billions of data points from the web. It can write emails, answer questions, generate code, and handle countless other text-based tasks.

GPT models use a transformer architecture to understand context and relationships between words, trained to predict the next word in a sequence. This simple concept enables remarkably complex behavior like reasoning, creativity, and problem-solving through text.

- Pre-trained GPT models come ready to use straight from companies like OpenAI. They have broad knowledge but lack specific expertise in your domain. These models work well for general tasks but struggle with specialized requirements.

- Fine-tuned GPT models take a pre-trained model and train it further on specific data. This process teaches the model your particular use case, terminology, and preferences. Fine-tuning requires large datasets and substantial computational resources but delivers superior domain-specific performance.

- Custom GPT chatbots use techniques like retrieval-augmented generation (RAG) without expensive fine-tuning. They combine a base model with your specific knowledge base or documents. This approach offers customization without the complexity and cost of complete model training.

Why train or customize a GPT model?

Out-of-the-box GPT models are brilliant but frustratingly generic when it comes to your specific business needs. When you ask ChatGPT about internal systems or proprietary data, you get educated guesses rather than precise answers. These models can't access your documentation, customer histories, or specialized knowledge that wasn't part of their training. The result is AI that sounds confident but consistently misses the critical details that matter in real scenarios.

The most significant advantage of customization comes through dramatically improved accuracy and consistency within your domain. Instead of generic responses that sound plausible but lack substance, trained models deliver precise answers using your requirements. They understand industry nuances, follow your guidelines, and handle edge cases that trip up standard models. This reliability builds genuine trust with users and eliminates the constant second-guessing of AI-provided answers.

Customer support and specialized industries see the most dramatic improvements from custom GPT training. Training on help documentation and support tickets creates AI that provides accurate responses while freeing human agents for more complex issues. Legal firms get models that understand case law, and medical practices get AI trained on treatment protocols. The more specialized your field, the greater the accuracy gains if the GPT is provided enough context.

Approaches to training or customizing GPT

You don't need a PhD in machine learning to customize GPT models for your specific needs. Modern approaches range from simple prompt tweaking to full model retraining, each with distinct advantages and complexity levels. Let's look at a few approaches to see which fits your requirements the best.

Fine-tuning

Fine-tuning takes a pre-trained GPT model and continues training it on your specific dataset to specialize its behavior. This approach works best when you have large, high-quality datasets and need consistent performance on specialized tasks. The process involves preparing your training data, configuring hyperparameters, and running the training process on powerful hardware. Fine-tuning provides the highest accuracy for domain-specific applications but requires significant technical expertise and computational resources.

Retrieval-Augmented Generation (RAG)

RAG combines a base GPT model with a searchable knowledge base, allowing the AI to retrieve information before generating responses. Unlike fine-tuning, RAG doesn't modify the underlying model but instead feeds it contextual information from your documents in real time. This approach excels when your knowledge base has frequent updates or when you need the AI to cite specific sources. RAG offers a more straightforward implementation than fine-tuning while maintaining accuracy for knowledge-intensive tasks.

Custom GPTs and No-code platforms

Platforms like ChatGPT, CustomGPT, Chatbase, and Botpress let you create specialized AI assistants without writing any code. These tools handle the technical complexity while you focus on uploading documents, setting instructions, and configuring behavior through user-friendly interfaces. They're perfect for non-technical users, rapid prototyping, and small to medium-scale deployments. Most platforms offer pre-built integrations and deployment options that get you from concept to working chatbot in hours.

Prompt engineering and N-shot prompting

Advanced prompting techniques can dramatically improve GPT outputs without any model training or complex infrastructure. Few-shot prompting provides examples within your prompt to guide the model's responses, while careful prompt engineering shapes the AI's behavior through strategic instruction formatting. These methods work immediately, cost nothing beyond API usage, and allow rapid experimentation with different approaches. Skilled prompt engineering can often achieve 80% of fine-tuning's benefits with 5% of the complexity and cost.

Step-by-step guide: how to train or customize a GPT

The path from generic ChatGPT to a specialized AI assistant doesn't have to be overwhelming. Success comes from systematic planning, careful data preparation, and choosing the right approach for your specific needs. Let's look at how you can build something genuinely useful for yourself or your organization.

Step 1: Define your goals and use case

Start by clearly defining what you want your GPT to accomplish and who will be using it daily. Are you building a customer support assistant that answers product questions, or do you need an internal tool that summarizes lengthy reports? Write down specific scenarios where your custom GPT will provide value, including the types of questions it should handle expertly.

Consider success metrics like response accuracy, time savings, or user satisfaction scores that will help you measure effectiveness. The clearer your vision, the better you can design your training approach and evaluate whether your customized model actually solves real problems.

Step 2: Gather and prepare your data

Up next, select diverse, high-quality data sources that represent your specific domain and use cases:

- Text files and documents. Internal manuals, policies, and procedures provide structured knowledge that teaches your GPT organizational standards and processes.

- FAQs and knowledge bases. Question-answer pairs offer perfect training examples that show your GPT how to respond to common inquiries.

- Website content. Blog posts, product pages, and marketing materials help your GPT understand your brand voice and public-facing information.

- Chat logs and support tickets. Historical customer interactions reveal real-world language patterns and problem-solving approaches your users expect.

- Email templates and communications. Business correspondence examples teach your GPT appropriate tone, formatting, and communication styles for different contexts.

- Training materials and presentations. Educational content helps your GPT explain complex concepts in ways your audience can understand.

Data cleaning and formatting tips

- Remove duplicates and redundant content to prevent your model from memorizing repeated information that could lead to generic or repetitive responses.

- Standardize formatting consistently by converting all documents to plain text, removing special characters, and ensuring uniform structure across your dataset.

- Filter out irrelevant information like navigation menus, headers, footers, and metadata that don't contribute meaningful knowledge to your use case.

- Organize content hierarchically by grouping related topics and creating clear categories that help your model understand relationships between concepts.

When gathering public online data for training, you'll often need web scraping to collect comprehensive datasets from multiple sources efficiently. Reliable proxies become essential for bypassing CAPTCHAs, avoiding IP blocks, and maintaining consistent access to target websites. Decodo's Web Scraping API simplifies this process by handling proxy rotation, CAPTCHA solving, and rate limiting automatically, letting you focus on data quality rather than technical obstacles.

Streamline your data collection

Skip the headaches of proxy management and CAPTCHA solving with Decodo's Web Scraping API.

Step 3: Choose your training/customization method

When working with GPTs, you have three main paths to tailor the model. Each approach has its sweet spot:

Method

Best for

Pros

Cons

Fine-tuning

Consistent brand voice, structured outputs, and narrow tasks

Highly tailored, predictable responses

Costly, retraining needed for updates, less flexible

RAG

Up-to-date knowledge, large and changing datasets

Always current, cheaper than fine-tuning, scalable

Requires infra setup, retrieval quality impacts results

No-code GPTs

Prototypes, internal assistants, non-technical teams

Fast to deploy, no coding, easy iteration

Limited depth, less control, tied to OpenAI's platform

Your choice here depends on whether you want maximum control, fresh knowledge, or fast experimentation. Pick the path that matches your project’s complexity, then let GPT do the heavy lifting.

Step 4: Implement the training/customization

Tools and platforms overview

The variety of GPT customization tools offers options ranging from code-heavy APIs to entirely visual no-code platforms that anyone can use:

- OpenAI API. Direct access to GPT models with fine-tuning capabilities for developers comfortable with Python and technical implementations requiring maximum control.

- Chatbase. No-code platform that lets you upload documents and create custom chatbots trained on your data within minutes, perfect for quick deployments.

- Botpress. Visual bot builder with GPT integration offering advanced workflow automation, multi-channel deployment, and developer-friendly customization options.

- ChatGPT Custom GPTs. Built-in OpenAI solution requiring only a ChatGPT Plus subscription, ideal for personal use and rapid prototyping without infrastructure setup.

- Pickaxe. User-friendly platform designed for creators and small businesses to build AI tools with custom instructions and document uploads.

Uploading data and configuring settings

The process will differ based on the platform you choose, but it will generally follow these 3 steps:

- Start by organizing your prepared data files according to your chosen platform's requirements, typically accepting formats like TXT, PDF, or CSV.

- Upload your documents through the platform's interface, ensuring files are correctly named and categorized for easy management and updates.

- Configure your GPT's personality by setting the tone, response style, and any behavioral guidelines that align with your brand or use case. Adjust technical parameters like temperature for creativity control, token limits for response length, and retrieval settings if using RAG approaches.

Step 5: Test and evaluate your model

Begin testing with a diverse set of questions that represent real scenarios your users will encounter in actual usage. Compare the GPT's responses against your source documents to verify factual accuracy and ensure it's not hallucinating information. Ask edge case questions and intentionally tricky queries to identify gaps in knowledge or areas where the model provides unhelpful responses. Monitor key metrics, including response accuracy rates, user satisfaction levels, and how often the model admits uncertainty rather than giving wrong information.

Use your test results to identify specific weaknesses in your model's performance, then address them systematically through targeted adjustments. Add missing information to your knowledge base when you discover gaps, and refine your prompts or instructions to fix consistent errors. Re-upload updated documentation, adjust configuration settings, and test the same problematic queries to verify if improvements actually work.

This cycle of testing, identifying issues, making changes, and retesting continues until your GPT meets your quality standards consistently. Remember that customization is an ongoing process as your business evolves and new edge cases emerge.

Step 6: Deploy and integrate

You've built something useful, now comes the fun part, where you actually let people break it in production. Here are a few options:

- API integration. Embed your custom GPT directly into existing applications and workflows, giving developers programmatic access to incorporate AI capabilities wherever they're needed most.

- Website chatbot widget. Add an interactive chat interface to your site that provides instant assistance to visitors, reducing support burden while improving user experience and engagement.

- Dedicated chatbot interface. Create a standalone chat application accessible via web or mobile apps, perfect for internal tools or customer-facing services.

- Slack/Teams integration. Deploy your GPT as a bot within team collaboration platforms where employees already spend their time, making AI assistance seamlessly available during daily workflows.

- Email automation. Connect your model to email systems to automatically draft responses, categorize incoming messages, or provide suggested replies that maintain consistent communication quality.

Challenges and considerations

Training and deploying custom GPT models come with real obstacles that can derail your project if you don't plan for them upfront. Here are a few of them:

- Data privacy and security. Your training data may contain sensitive customer information, proprietary business knowledge, or regulated data that could be exposed through model responses or stored insecurely. Use enterprise API plans with data processing agreements, implement on-premise deployments for sensitive data, or utilize platforms that guarantee no training on your information.

- High resource requirements. Fine-tuning models demands expensive GPU compute time, substantial storage for datasets, and ongoing API costs that can quickly exceed budgets. Start with no-code platforms or RAG approaches that minimize costs, leverage cloud services for scalable compute, or use minor model variants that balance performance with budget constraints.

- Limited technical skills. Implementing custom GPT solutions often requires Python expertise, machine learning knowledge, and infrastructure management capabilities your team may lack. Choose user-friendly platforms like Chatbase or Custom GPTs that require zero coding, invest in team training, or partner with AI consultants for initial implementation and knowledge transfer.

- Context window limitations. Models can only process a fixed amount of text at once, causing issues when users need information from lengthy documents or multiple sources. Implement chunking strategies to break large documents into smaller pieces, use RAG to retrieve only relevant sections, or upgrade to models with extended context capabilities like Claude.

- Poor brand integration. Generic AI interfaces don't reflect your visual identity or communication style, creating disconnected user experiences that feel off-brand. Customize system prompts to match your voice and style, use platforms offering white-label options, or build custom interfaces that align with your existing design systems.

- User access barriers. Requiring separate accounts or learning new platforms creates friction that prevents adoption and limits your AI's actual impact. Deploy through familiar channels like email and Slack, create simple authentication flows, or embed chatbots directly into websites where users already engage with your brand.

- Bias and data quality issues. Training data may contain prejudiced viewpoints, outdated information, or factual errors that your model will confidently reproduce in responses. Audit training data for problematic patterns, implement human review processes for critical responses, and continuously monitor outputs to catch and correct biased or inaccurate information.

Addressing these challenges early in your planning process saves countless headaches and budget overruns down the road.

Real-world use cases

Custom GPT models are delivering measurable results across industries, from energy companies handling millions of customer inquiries to healthcare providers saving lives with faster diagnostics. These examples show how targeted training transforms generic AI into specialized tools that solve real problems:

Customer support: Octopus Energy's AI transforms service delivery

Octopus Energy developed "Magic Ink", a custom GPT tool trained on customer histories, product specifications, and conversational data from their platform serving 54 million accounts. The system adapts to individual support agents' writing tones while preventing AI hallucinations through verification systems. Magic Ink now handles 44% of total customer inquiries with a 70% satisfaction rating, effectively replacing approximately 250 support staff positions. Around one-third of AI-generated messages require zero to minimal changes, proving that domain-specific training dramatically outperforms generic chatbots.

Source: TechUK Case Study

Healthcare: Color Health accelerates cancer screening with custom GPT

Color Health partnered with OpenAI to build a HIPAA-compliant cancer screening copilot using GPT-4o trained on screening protocols and diagnostic pathways. The system integrates with patient medical data, family history, and clinical guidelines to support physicians in cancer care workflows. Providers using the copilot identify 4 times more missing labs, imaging, or biopsy results than those without assistance. Patient record analysis time dropped from hours or weeks to an average of 5 minutes, with the system projected to support over 200,000 cases by 2024.

Source: OpenAI – Color Health

eCommerce: Stitch Fix scales personalized content with fine-tuned models

Stitch Fix fine-tuned GPT-3 models specifically for ad headlines and product descriptions, training on hundreds of high-quality copywriter examples and client feedback data. Their "expert-in-the-loop" approach lets AI generate content while humans review and refine outputs for advertising campaigns. The custom model achieved a 77% pass rate for AI-generated ad headlines requiring minimal review and generated 10,000 product descriptions in 30 minutes. This AI-driven personalization contributed to a 40% increase in average order value and $150 million in annual operational savings.

Source: Stitch Fix Technology Blog

Internal knowledge bases: McKinsey's Lilli unlocks institutional knowledge

McKinsey & Company developed "Lilli", a proprietary AI platform accessing their hundred-year body of knowledge through custom fine-tuning and advanced search. The system scans 40+ curated sources, including 100,000+ documents and interview transcripts, using McKinsey-specific intent recognition beyond simple retrieval. 72% of McKinsey employees actively use the platform, generating 500,000+ prompts monthly with up to 30% time savings in knowledge synthesis. Document tagging accuracy improved from 50% to 79.8% while reducing processing time from 20 seconds to 3.6 seconds per document.

Source: McKinsey – Meet Lilli

Personal assistants: Student entrepreneur builds million-dollar educational tool

Leon Niederberger, a student at IE Business School Madrid, created AI Ace using CustomGPT.ai as a custom educational tutor. The system was explicitly trained on macroeconomics textbooks and course materials with a student-friendly persona that provides citations to professorial resources. Within just 3 days, AI Ace answered 1,750 questions from 300+ students, eliminating the need to research content manually. The custom training led to recognition as IE University's Best Undergraduate Start-Up with a $1.2 million valuation by early 2024.

Source: CustomGPT Case Study

Final thoughts

Training your own GPT model doesn't require a machine learning PhD or massive budgets anymore. From simple prompt engineering to full fine-tuning, the right approach depends on your specific use case, available data, and technical resources. The key is starting with clear goals, gathering quality training data, and choosing methods that match your team's capabilities and timeline.

For efficient data collection, Decodo's Web Scraping API handles the technical obstacles so you can focus on gathering quality training datasets and iterating based on real-world feedback.

About the author

Zilvinas Tamulis

Technical Copywriter

A technical writer with over 4 years of experience, Žilvinas blends his studies in Multimedia & Computer Design with practical expertise in creating user manuals, guides, and technical documentation. His work includes developing web projects used by hundreds daily, drawing from hands-on experience with JavaScript, PHP, and Python.

Connect with Žilvinas via LinkedIn

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.