Is Web Scraping Legal? Guide to Laws, Cases & Compliance

Web scraping extracts data from websites using automated tools. It's become a standard practice for businesses gathering competitive intelligence, training AI models, and building data-driven products. But the big question remains – is web scraping legal? The answer depends on what you scrape, how you scrape it, where the data comes from, and what you do with it next.

Benediktas Kazlauskas

Last updated: Oct 15, 2025

5 min read

What is web scraping?

Web scraping is the automated process of extracting data from websites using automated apps or scripts. Rather than manually copying and pasting data from each web page, scraping tools automatically access a website’s content through its underlying code, typically HTML, and collect specific data according to set rules by the user. The extracted data is then structured into a usable format, such as CSV, JSON, or a database, making it easier to analyze or integrate into other systems.

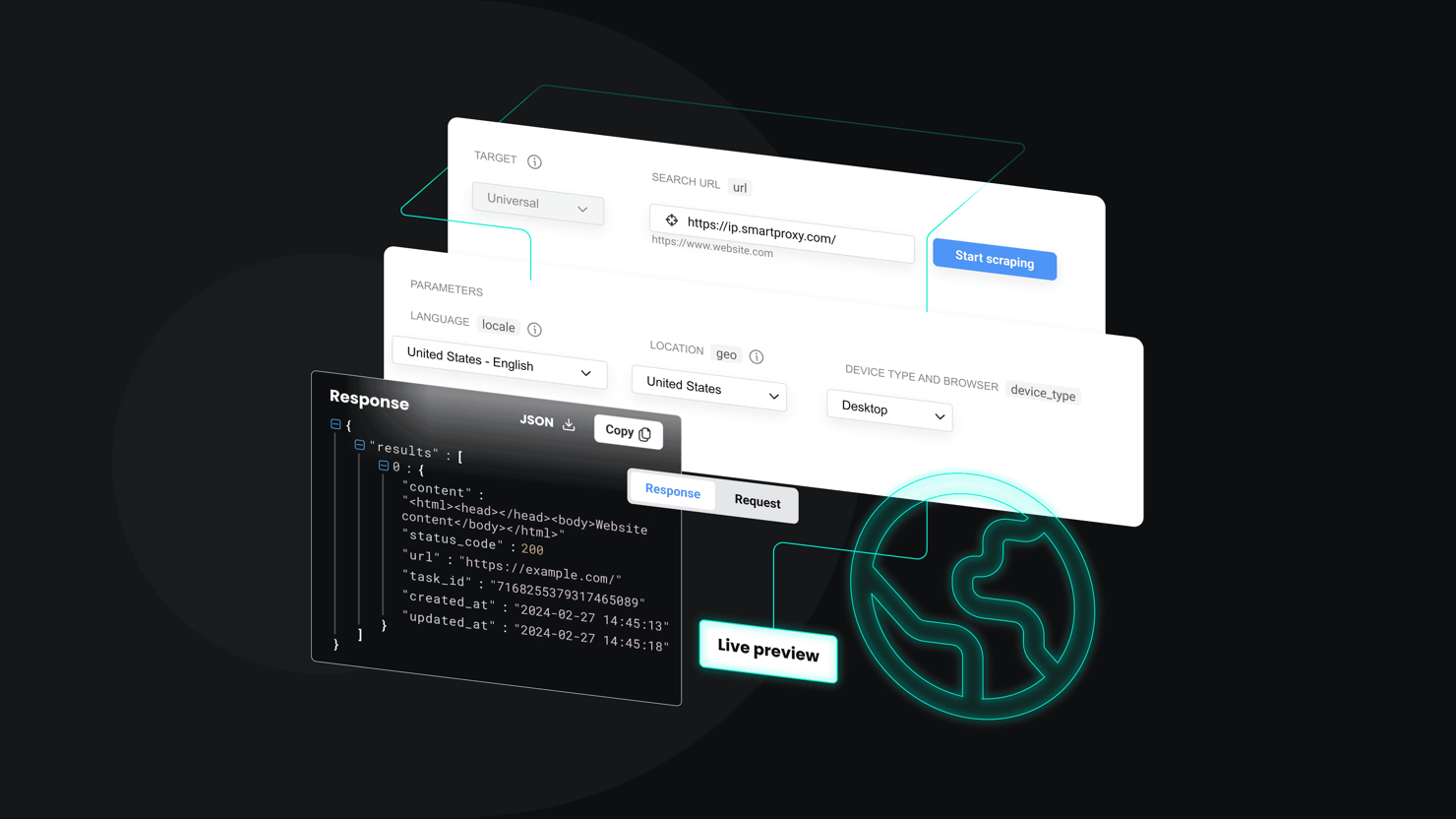

At a technical level, web scraping works by sending HTTP requests to a target website, just as a browser does when a user visits a page. The server responds with the page’s HTML source, which the scraper parses to identify and extract relevant elements like text, images, product listings, prices, or links. Advanced scrapers, like Decodo’s Web Scraping API, may also help users handle dynamic websites with built-in capabilities. In cases that require simulating full browser activity, tools such as Selenium, Playwright, or Puppeteer are commonly used. Some frameworks can even schedule automated crawls, manage cookies and sessions, and handle retries or error recovery.

Legitimate use cases of web scraping

Web scraping is a powerful and widely used technique across industries. Businesses, researchers, and developers rely on it for a variety of cases, including:

- Market and competitor analysis, like tracking prices, reviews, and trends, to improve business decisions.

- Data aggregation, compiling product listings, financial data, or real estate information from multiple sources.

- Lead generation and business intelligence, like collecting publicly available company details, contact information, or service listings.

- Academic and policy research for gathering data for studies in economics, social sciences, or digital culture.

- AI and machine learning, building training datasets from publicly available information to improve algorithms and models.

How web scraping is different from similar activities

It’s important to differentiate web scraping from other data-related practices:

- Screen scraping captures what is visually displayed on a computer screen, often by reading pixel data or the graphical interface of an application. It’s typically used when direct access to the underlying data isn’t possible.

- Data mining involves analyzing large, pre-existing datasets to uncover trends, correlations, or insights. Unlike web scraping, data mining focuses on interpreting and finding meaning in data, not collecting it.

- Web crawling, often used interchangeably with scraping, is more about systematically discovering and indexing web pages (as search engines do), whereas scraping targets specific information on those pages.

In essence, web scraping bridges the gap between the unstructured information available on the web and the structured data formats needed for analysis and automation. When used responsibly, it can be a valuable tool for innovation, research, and decision-making across modern industries and research fields.

Common misconceptions about the legality of web scraping

Web scraping often sits in a legal and ethical gray area, leading to widespread misunderstanding about what is and isn’t allowed. While the technology itself is simply a tool for automating data collection, how and where it’s used can determine whether it’s considered acceptable, questionable, or outright unlawful. Misconceptions arise because there are no web-scraping-specific laws. This practice is governed by various pre-existing regulations, whose interpretation isn’t always consistent across jurisdictions and court cases.

Myth #1: All web scraping is illegal

There's no blanket law banning web scraping. Scraping public product listings for price comparison or collecting data for market research is perfectly fine, provided you adhere to applicable regulations such as privacy and intellectual property laws. However, scraping user passwords from a hacked database may cross the line and be illegal.

Myth #2: Public data means free data

Just because information appears publicly doesn't mean you can use it however you want. For example, public social media profiles still contain personal data protected by GDPR or other privacy laws. Depending on the data scraped, it may also be subject to other laws like intellectual property regulations. So, when collecting publicly available data, make sure to comply with the laws that apply to your activities.

Myth #3: If it's for personal use, it's always legal

Even personal scraping projects can violate applicable laws or breach third-party rights. For example, scraping hundreds of thousands of pages from a site in a short period of time for your personal archive might still breach that site's technical limitations.

The legal status of web scraping: a global perspective

Whether it's legal doesn't really depend on the scraping tech itself. It's more about what data you're grabbing, how you're doing it, and what privacy, copyright, and other laws apply. Even something that's perfectly fine in one country might violate a local law in another. Governments and courts everywhere are still figuring out where to draw the line between innovation, fair access to information, and protecting people's data and digital property.

Below is an overview of how several major countries approach web scraping and related data collection practices.

The United States

The U.S. has no single law explicitly addressing web scraping. Instead, several statutes collectively define its legal boundaries. While this is not an exhaustive list, here are some laws relevant to the U.S.:

- Computer Fraud and Abuse Act (CFAA). The CFAA, enacted in 1986, was designed to combat unauthorized computer access. Courts have gradually clarified their relevance to web scraping. The key distinction lies between unauthorized access to protected systems (such as bypassing passwords or encryption) and accessing publicly available data, which doesn’t typically violate the CFAA. In essence, if no digital barriers exist, such as login requirements or authentication gates, scraping publicly accessible web pages is unlikely to constitute unauthorized access.

- Copyright Laws. While the privacy laws are very relevant in other countries, U.S. ones distinguish between facts, which aren’t protected, and creative expression, which is. Scraping only factual data points such as prices, weather statistics, or product details is generally allowed. However, reproducing substantial portions of written articles, images, or other creative works without permission may constitute copyright infringement. The fair use policy may protect certain limited or transformative uses, such as academic research, commentary, or data analysis, but this defense is context-dependent and not guaranteed.

- Contracts and Terms of Service. Websites often include Terms of Service that restrict or prohibit automated data collection. Courts differentiate between clickwrap agreements (where users explicitly agree to terms) and browsewrap agreements (where terms are merely posted on the site). The former are typically enforceable, while the latter may not be, especially if users aren’t clearly notified. Contracts and Terms of Service also exist in other countries.

European Union

The EU provides one of the world’s most comprehensive legal frameworks governing data protection and content usage.

- General Data Protection Regulation (GDPR). Under the GDPR, personal data, any information that can identify an individual, including names, IPs, or behavioral patterns, can only be collected and processed under a lawful basis such as consent, legitimate interest, or contractual necessity. Public visibility doesn’t remove GDPR protections. Organizations engaging in web scraping need to minimize the personal data they collect, provide transparency about processing activities, and honor data subject rights (such as deletion or access).

- Copyright and Database Protection. Alongside other copyright-related laws and regulations, the EU Database Directive protects databases that require significant investment to compile, even when the underlying facts aren’t creative. Unauthorized extraction of substantial parts of such databases can infringe these rights.

- Consent requirements. The GDPR provides different legal bases for data collection, such as contract, legitimate interest, and consent. When it comes to consent, GDPR mandates explicit, informed, and freely given consent for data collection. Pre-ticked boxes, vague disclosures, or implied consent are insufficient. Users must also be able to withdraw consent as easily as they grant it, reinforcing individual control over personal data.

Other countries

Beyond the United States and the European Union, many other nations have introduced legislation to regulate how organizations collect, use, and share data, including through web scraping. While approaches differ, most frameworks emphasize the importance of having a valid legal basis for data collection and processing - such as consent, contractual necessity, or legitimate interest - along with transparency and accountability. Consent, in this context, means that individuals must be given clear information and a genuine choice, allowing them to freely agree (or refuse) to the use of their personal data.

- Canada’s Personal Information Protection and Electronic Documents Act (PIPEDA) regulates private-sector handling of personal data. Organizations must obtain meaningful consent, when consent is a suitable legal basis to collect the data, and use collected personal data only for legitimate, specified purposes. Even when personal data appears public, collecting it for unrelated uses, like marketing or profiling, can violate PIPEDA’s fairness principle.

- Following the United Kingdom's departure from the EU, the UK introduced a GDPR-aligned framework under the Data Protection Act. The Computer Misuse Act continues to restrict unauthorized computer access, while copyright laws protect creative works but permit limited text and data scraping for research purposes.

- India’s Digital Personal Data Protection Act (DPDP) introduces a consent-based model similar to the GDPR. The law establishes obligations for organizations to safeguard personal data, obtain explicit consent, and ensure fair processing. While the enforcement framework is still maturing, the DPDP signals a growing emphasis on individual data rights and cross-border accountability.

- Singapore’s Personal Data Protection Act (PDPA) requires organizations to secure consent before collecting, using, or disclosing personal data. Regulators actively monitor compliance, and enforcement actions have been taken against entities engaging in improper data collection.

Major legal risks and liabilities

Web scraping, while a powerful tool for gathering data, comes with its own set of legal risks every user should know about. From accepting and violating website terms, infringing copyright, mishandling personal data, to accessing systems that require authorization, understanding these risks will help you collect publicly available data without facing any consequences.

Violating Terms of Services

Many websites restrict automated access through Terms of Service agreements. These agreements can be enforceable depending on whether they are accepted before scraping the data in question. Browsewrap agreements, which simply display terms in a footer or sidebar, are often unenforceable because users don’t explicitly consent. In contrast, clickwrap agreements require users to actively click I agree, which courts are more likely to uphold as binding contracts.

For scrapers, the key question is whether the scraper has taken an action indicating acceptance. Simply visiting a page typically doesn’t create a binding contract, but logging in, clicking through a consent screen, or otherwise interacting with terms can form enforceable obligations. Smart scraping practices include reviewing the user flow and identifying any access restrictions or consent requirements before collecting data.

Copyright infringement

Scraping can also implicate copyright law if the content being collected is protected. Copyright safeguards original creative works such as text, images, databases, logos, and multimedia. While facts, ideas, and processes are not protected, the creative expression of those facts is.

In the United States, fair use provides some flexibility, particularly for transformative uses like aggregating, analyzing, or generating insights from scraped data. Republishing content verbatim or copying substantial portions of creative works without permission, however, can trigger serious consequences, including cease-and-desist notices, takedown requests, statutory damages, and injunctions. To mitigate risk, consider obtaining proper licensing, using official public APIs provided by the websites or platforms themselves, or limiting scraping to factual data.

Data privacy laws

Scraping personal information is not specifically regulated by any dedicated “data scraping” law anywhere in the world. In the United States, laws such as the California Consumer Privacy Act (CCPA) and other comprehensive state privacy laws - including the Colorado Privacy Act, Connecticut’s Act Concerning Personal Data Privacy and Online Monitoring, the Utah Consumer Privacy Act, and the Virginia Consumer Data Privacy Act - generally exclude publicly available information from the personal data regime. Public data obtained from openly accessible online sources or widely distributed media is therefore not considered personal under these laws.

In contrast, the EU’s General Data Protection Regulation (GDPR) does not distinguish between public and non-public information. Any data that can identify an individual is considered personal data, regardless of its source. The GDPR requires a valid legal basis for processing, adherence to key principles such as data minimization and purpose limitation, and transparency toward individuals about how their data is collected and used. GDPR also imposes restrictions on transferring personal data outside the EU and sets detailed rules on how such transfers can be lawfully conducted.

Computer fraud and abuse

Automated access can also raise issues under anti-hacking statutes like the Computer Fraud and Abuse Act (CFAA). While scraping publicly available websites is less likely to be covered by this Act, accessing password-protected areas, bypassing authentication, or circumventing technical safeguards constitutes unauthorized access and can carry criminal and civil penalties. If a scraper accidentally accesses protected systems, it’s critical to cease activity immediately, document the incident, and seek legal counsel before proceeding.

Other legal claims

Web scraping can sometimes lead to other types of legal trouble. There's "trespass to chattels," which is basically when your automated requests mess with or damage someone's servers. Then there's "unjust enrichment," where site owners argue you're making money off all the work and resources they put into building their site.

When website owners want to stop scrapers, they usually start with tech solutions – blocking IP addresses, throwing up CAPTCHAs, or limiting how fast you can make requests. If that doesn't work, they might send cease-and-desist letters, file takedown requests, or take you to court. Most of these situations get sorted out without going to trial, but it's still important to know the risks if you want to scrape responsibly.

Copyright and intellectual property issues

When you're scraping websites, you need to think about copyright and intellectual property laws. It's important to know what content is protected and what you can actually use legally. Copyright kicks in automatically for original creative stuff, and it covers way more than just written text. It applies to all kinds of digital content. The key is knowing the difference between basic factual data points, which anyone can use, and creative work, which is off-limits.

Protected online content

Copyright covers tons of digital content – written pieces, photos, videos, audio, logos, website code (HTML/CSS), and even organized collections of data. As soon as something's created and exists in a real form, posted online, saved in a database, or published in a digital report, it may be automatically protected. This protection may even include the creative way data is organized or put together, even if the actual information is just basic facts.

Facts vs creative expression

Plain facts aren't protected by copyright. For example, saying a sports team won a championship is fair game for anyone to share. But the creative way those facts are presented, like well-written articles, charts, or specially organized stats, that's protected. When scraping, stick to pulling out raw data instead of copying how someone wrote about it or formatted it.

Database protection laws

Some countries or regions have extra laws protecting databases. For example, in the EU, there's the Database Directive that gives special rights to people who put serious effort into gathering, checking, or organizing content. These rules protect against someone copying entire databases, so scraping big chunks without permission could break both copyright and database laws.

Fair use and data mining exceptions

Some laws provide exceptions that permit limited copying or use of protected content. In the United States, fair use allows copying for transformative purposes such as research, analytics, or indexing. The EU similarly allows text and data mining for research purposes, and under certain conditions, for commercial use, provided that content owners have not explicitly opted out. Transforming scraped data into new formats, aggregating it for analysis, or using it to generate insights typically falls within these permissible uses.

How to avoid copyright infringement

When scraping information online, the key is to focus on facts and data rather than creative content. This means you can collect numbers, statistics, dates, or other factual details, but avoid copying someone’s writing, artwork, photos, or videos. Once you have the data, transform it into something new, an original report, visualization, or analysis, rather than just republishing it as-is.

Whenever possible, use official public APIs, provided by the websites or platforms themselves, as they often provide a safe, legal way to access data. Also, respect the rules set by websites and honor any content owner opt-outs.

Security is another important consideration. Store scraped data safely and limit who can access it. Finally, keep a record of your sources so you can track where the information came from and show that you’ve used it responsibly.

Following these practices will help you get the data you need without running into copyright issues.

The impact of AI on web scraping legality

Web scraping has been a go-to method for collecting data from the internet for years. But now that we have powerful AI models like ChatGPT and Claude, people are asking some hard questions about whether scraping is legal and how that data should be used. These AI systems learn from massive amounts of data pulled from the web, which has sparked debates about copyright issues, fair use, and whether it's ethical to use people's online content this way.

How AI models use scraped data

AI models train on massive datasets often assembled through web scraping. Models learn patterns, language structures, and knowledge from this training data without storing the original content precisely.

The training process is complex and different from simply copying and pasting content. Large language AI models analyze billions of text examples to identify statistical patterns in how language works. They learn which words tend to appear together, how sentences are structured, and how to generate responses that make sense in context. The original texts aren't retained in their complete form. Instead, the model develops a compressed understanding represented by mathematical weights and parameters. This distinction is important to the legal debates because AI companies argue they're not reproducing copyrighted works but rather learning from them in a transformative way.

Critics counter that even if the content isn't stored word-for-word, the models can sometimes reproduce substantial portions of their training data, and they wouldn't exist without that copyrighted material in the first place.

Ongoing debates and lawsuits

Multiple high-profile lawsuits challenge whether scraping copyrighted content for AI training constitutes fair use. Authors, publishers, and artists argue that their works are being exploited without compensation. AI companies contend that training constitutes transformative fair use similar to search engines.

The legal battles are piling up fast. Major publishers like The New York Times have sued OpenAI and Microsoft, claiming their copyrighted articles were used without permission to train ChatGPT. Groups of authors, including household names, have filed class-action lawsuits arguing that AI companies scraped their books to build commercial products without paying licensing fees or even asking permission. Visual artists have brought similar cases about image-generating AI models.

On the other side, tech companies are making the case that training AI is no different from how search engines index the web or how humans learn by reading. They argue that requiring licenses for every piece of training data would make it practically impossible to develop AI systems.

Regulatory trends

The EU AI Act, which came into force in 2024, introduced requirements for AI model providers. The act demands transparency about training data, including disclosure of copyrighted materials used. The General-Purpose AI Code of Practice and US Copyright Office Guidance expected in 2025 should clarify requirements for using copyrighted data in AI training.

Countries around the globe are starting to step in with regulations. The EU is leading the charge with its AI Act, which takes a risk-based approach to regulating AI systems. Under this framework, companies developing powerful general-purpose AI models have to be transparent about what data they used for training, including any copyrighted content. This means AI developers can't just sweep the question of data sources under the rug. They need to document and disclose it.

Meanwhile, in the US, the Copyright Office has been studying the issue and is expected to release guidance that could shape how American courts interpret fair use in the AI context. Industry groups are also working on codes of practice that could become de facto standards. The challenge is that AI development moves incredibly fast. In contrast, legal and regulatory processes move slowly, creating a gap where companies are often operating in a gray area without clear rules to follow.

How to scrape the web without facing legal challenges

Web scraping exists in a legal gray zone, and that's probably not changing anytime soon. But there's a big difference between scraping responsibly and searching for trouble. Whether you're gathering public data for research, building a product, or training an AI model, following some basic guidelines can help you out.

Read Terms of Service

Terms of service tell you how the site owner expects their platform to be used. Reading through them helps you understand any restrictions on automated access, data usage, or commercial applications.

Pay attention to sections about acceptable use, data rights, and restrictions on automation. This information helps you decide whether your scraping project aligns with how the site wants to be accessed, which affects both your technical approach and risk assessment.

Use official public APIs when available

APIs provide structured access to data with clear documentation and usage guidelines. They're built for programmatic access, which means better performance, more reliable data structures, and less maintenance overhead compared to HTML parsing. When a site offers an API, you get cleaner data extraction, clear rate limits, and defined data schemas. While some APIs have usage fees, they often save development time and provide more stable long-term access to the data you need.

Don’t overwhelm the server

Spacing out your requests prevents server overload and helps your scraper run reliably over time. Aggressive scraping often triggers anti-bot measures, leading to IP blocks and failed requests. By implementing delays between requests and distributing your scraping over time, you get more consistent results and avoid disrupting the site's normal operations. This approach also makes your traffic pattern less distinguishable from regular users, which typically results in fewer blocks.

Identify your scraper

Set up a proper User-Agent string that identifies your scraper and includes contact information. Something like "MyResearchBot/1.0 (+https://yoursite.com/bot-info; [email protected])" works great. Why? It's a simple way to demonstrate you're respecting both the website from which the data is collected and the owner who put their work into building it.

Be extremely cautious about personal information

Collecting only the specific data you need for your project reduces complexity and risk. Personal information like emails, phone numbers, or user profiles requires careful handling under various privacy regulations depending on your location and the data subjects' locations. In most jurisdictions with data protection laws, organizations are obligated to maintain transparency toward individuals regarding the collection and use of their personal data. These laws generally require that data processing be based on a valid legal basis, such as consent, contractual necessity, or legitimate interest, and that fundamental principles, including purpose limitation, data minimization, and accountability, are consistently applied. Furthermore, individuals’ rights to access, rectify, and delete their personal data must be effectively enforced. Often, aggregated or anonymized data serves the same analytical purpose without the additional compliance requirements.

Consult a legal professional

Certain scenarios benefit from professional legal review before proceeding. Scraping in regulated industries like healthcare, finance, or government data often involves sector-specific compliance requirements. Projects involving large-scale personal data collection need privacy law expertise.

If you're building a commercial product or service based on scraped data, legal review helps identify potential issues early. Operating across multiple countries means navigating different legal frameworks. And if you receive formal objections or legal notices about your scraping activities, professional guidance helps you respond appropriately. Early legal consultation on complex projects typically costs far less than addressing problems after they develop.

Bottom line

Web scraping isn't illegal by default, but it operates where multiple laws are applicable, like copyright, data privacy, computer access, and contract law. What matters is the context – what data you're collecting, where it's from, how you're accessing it, and what you're doing with it.

The safest approach combines technical best practices with legal awareness. Collect only publicly available data, respect rate limits, use official, website-owner-provided APIs when available, avoid collecting personal data without consent, and transform scraped information into something new rather than republishing it.

For personal research or small projects using publicly available factual data, you're generally fine. But if you're building a commercial product, operating across borders, collecting personal information at scale, or working in regulated industries, it’s best to get a legal professional on your side.

Collect publicly available data easier

Start a 7-day free trial of Web Scraping API and enjoy worry-free data collection.

About the author

Benediktas Kazlauskas

Content Team Lead

Benediktas is a content professional with over 8 years of experience in B2C, B2B, and SaaS industries. He has worked with startups, marketing agencies, and fast-growing companies, helping brands turn complex topics into clear, useful content.

Connect with Benediktas via LinkedIn.

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.