End-to-End AI Workflows with LangChain and Web Scraping API

AI has evolved from programs that just follow rules to systems that can learn and make decisions. Businesses that understand this shift can leverage AI to tackle complex challenges, moving beyond simple task automation. In this guide, we'll walk you through how to connect modern AI tools with live web data to create an automated system that achieves a specific goal. This will give you a solid foundation for building even more sophisticated autonomous applications.

Justinas Tamasevicius

Last updated: Oct 22, 2025

11 min read

The rise of the AI workforce: from chatbots to autonomous agents

The public imagination has been captured by conversational AI, where Large Language Models (LLMs) act as sophisticated partners in dialogue. This, however, is just the first phase of a much larger tech shift. The next frontier moves beyond simple response generation to a paradigm of autonomous action, introducing AI agents: systems that don't merely respond, but actively reason, plan, and execute multi-step tasks to achieve high-level goals.

While a chatbot is a conversational partner, an AI agent is a digital employee. It’s the engine behind AI workflow automation.

What is AI workflow automation?

At its core, it's the use of intelligent systems to streamline and adapt multi-step processes, moving beyond rigid, pre-programmed instructions.

Unlike traditional automation that follows a rigid, rule-based script, these AI-driven workflows are autonomous. You give the agent a goal, and it independently figures out the necessary steps to accomplish it, learning from data and making dynamic, context-aware decisions.

This is a game-changer because it directly addresses the primary bottlenecks in modern data-intensive projects: manual data collection and the complexity of integrating disparate data sources. By moving from simple, script-based process automation to true autonomy, organizations can unlock data-driven insights in real-time. This leads to huge gains in efficiency and accuracy, freeing up teams from repetitive tasks for more creative and strategic work.

Understanding the core concepts

Before delving into the code, it's important to understand the core concepts that form the basis of modern workflow automation AI.

Traditional vs. AI-powered

The distinction between AI-powered automation and traditional, rule-based automation is a fundamental paradigm shift. Traditional automation, like Robotic Process Automation (RPA), operates like a train on a fixed track – it's highly efficient at executing a predefined sequence of steps but can't deviate from its path. On the other hand, AI workflow automation functions like a self-driving car given a destination; it perceives its environment, makes dynamic decisions, and navigates unexpected obstacles to reach its goal.

This difference comes down to a few key capabilities. AI-driven systems excel at handling unstructured data, such as the text of an email or a news article, whereas traditional automation typically requires structured inputs, like forms or spreadsheets. AI systems can also learn from data patterns to improve their performance and adapt to changing conditions – a feat that's impossible for rigid, rule-based scripts that often break when a website's layout changes.

The following table gives you a clear, side-by-side comparison of these two approaches.

Feature

Traditional automation

AI automation

Core logic

Rule-based, follows a predefined script.

Goal-oriented, uses a reasoning engine to decide steps.

Decision making

Static, based on if-then conditions.

Dynamic, adaptive, and context-aware.

Data handling

Primarily structured data (e.g., forms, databases).

Handles both structured and unstructured data (e.g., articles, emails).

Adaptability

Brittle, breaks when UI or process changes.

Resilient, can adapt to changes and handle exceptions.

Example

A script that copies data from a spreadsheet to a web form.

An agent that reads customer emails, understands intent, and decides whether to file a support ticket or search a knowledge base.

Anatomy of a LangChain agent

The LangChain framework acts as the central nervous system of the AI agent. It provides the cognitive architecture that enables the agent to reason, plan, and orchestrate its actions.

At the heart of our project is a LangChain agent. It's crucial to understand that the agent isn't the LLM itself, but a system that uses an LLM as its central reasoning engine to orchestrate a series of actions.

- The ReAct framework (reason + act). The agent's logic is based on a powerful framework known as ReAct. This pattern is a continuous loop: the agent reasons about the task to form a plan, selects a tool and acts by executing it, and then observes the result to inform its next reasoning cycle. This loop continues until the agent determines the goal has been achieved. It's how an agent breaks down complex, multi-step problems into a manageable sequence of thought, action, and observation.

- Tools. An LLM by itself is a closed system. It can't access real-time information or perform actions in the outside world. LangChain tools fix this. They're functions or interfaces the agent can call to interact with external systems like APIs, databases, or search engines. The real power of an agent comes from this fusion of an LLM's reasoning with the practical capabilities of its tools. The LLM provides the plan ("what" and "why"), and the tools provide the execution ("how").

- LangGraph. The agent in this tutorial is built using createReactAgent, a function that leverages a more advanced library called LangGraph. LangGraph models agent behavior as a graph, where each step is explicitly defined. This graph-based architecture gives you superior control, state management, and reliability for building complex agentic workflows, representing the current best practice in the LangChain ecosystem. For a deeper dive into orchestrating AI agents, check out our guide on AI agent orchestration.

Overcoming the data barrier with Web Scraping API

For an agent to reason about the world, it needs real-time data. Web scraping is the primary way to get this data, but building and maintaining a robust scraping infrastructure is a massive engineering challenge. Manual scraping is filled with difficulties:

- Anti-bot defenses. Modern websites use sophisticated measures to detect and block automated traffic.

- CAPTCHAs. These automated challenges can stop a scraper in its tracks.

- IP rotation. Too many requests from a single IP will quickly get you blocked.

- JavaScript rendering. Many sites load content dynamically, so the raw HTML that a simple scraper gets is often empty. This is especially true for most scraped websites in 2025.

A managed Web Scraping API, like Decodo's one, is the strategic solution to these problems. This serves as the agent's senses, giving it the ability to perceive and interact with the live internet. It handles the immense complexity of web data extraction, like IP rotation, JavaScript rendering, and anti-bot countermeasures, so the agent can reliably retrieve real-time information. You can also explore how Decodo's API integrates with AI-powered workflows through MCP protocol, n8n automation, and AI assistants like Claude and ChatGPT.

Chat models

Chat models like Google's Gemini are the agent's intellect or cognitive engine. As a powerful Large Language Model (LLM), Gemini provides the core reasoning capability within the LangChain framework, allowing the agent to understand user requests, formulate plans, and synthesize information into coherent outputs.

Building the trend analysis agent: step-by-step implementation

Now, let’s build a functional application that acts as an autonomous intelligence agent. While our example focuses on generating a market intelligence report, this same architectural pattern could be adapted for a manufacturing workflow using sensors for predictive maintenance, or for enhancing customer support workflows with intelligent issue resolution. For our project, the goal is clear:

- Input. You provide a topic of interest (e.g., "AI in healthcare").

- Process. The AI agent autonomously searches the web for the most recent and relevant articles on the topic. It then scrapes the full content of these articles for in-depth analysis.

- Output. The agent synthesizes its findings and generates a concise, professionally formatted executive intelligence briefing, highlighting key trends, business impacts, and actionable recommendations.

Setting up your development environment

Before writing any code, let's walk through setting up your development environment. This section serves as a LangChain tutorial for beginners, guiding you through the essential first steps.

Step 1: Initialize a Node.js project

First, create a new directory for the project and initialize it with npm. Open your terminal and run these commands:

This command creates a package.json file, which manages your project's dependencies.

Step 2: Install required dependencies

Our project uses several key packages. Install them using npm:

Here’s a quick rundown of what each package does:

- dotenv – loads environment variables from a .env file so you can keep your API keys secure.

- @langchain/google-genai – the integration package for using Google's Gemini models with LangChain.

- @langchain/langgraph – the library that provides the graph-based architecture for creating robust agents.

- @decodo/langchain-ts – the official package with pre-built LangChain tools for interacting with the Decodo Scraper API.

- readline – a native Node.js module for building the command-line interface (CLI).

Step 3: Set up your API keys

The agent needs credentials for two services:

1. Decodo. Go to the Decodo dashboard to get your Web Scraping API (Advanced) username and password. For a detailed walkthrough, quickly follow our quick start documentation or watch a step-by-step video tutorial. Create a .env file and add them:

2. Google Gemini. Visit Google AI Studio to generate an API key for the Gemini model. Add this key to your .env file as well:

The dotenv package will automatically load these variables when the application starts.

Step 4: Configure TypeScript

You also need to create a tsconfig.json file in your project's root directory and paste the following content:

Getting started

Once you've got your prerequisites ready, it's time to do the real magic.

Step 1: Setup and imports

First, create your main application file, trend-analysis-agent.ts.

At the very top of this new file, we'll add all the necessary imports and set up our environment. This includes loading the dotenv package, importing classes from LangChain and Decodo, and defining a TypeScript interface for our agent's configuration.

Add this code to the top of trend-analysis-agent.ts:

Step 2: Define the agent class and constructor

Next, let's create the main class for our application, TrendAnalysisAgent. This class will hold all the logic for our agent.

We'll also define the constructor, which is the special function that runs when we create a new instance of the class. It will initialize our agent and the command-line interface.

Add this code below your imports:

Step 3: Initialize the agent (tools & model)

The constructor calls a method named initializeAgent. Let's create that now. This method is the "brain" and "hands" of our agent. It will:

- Load and validate API credentials.

- Initialize the tools (web scraper and Google Search).

- Initialize the LLM (reasoning engine).

- Assemble the agent using createReactAgent.

Add this initializeAgent method inside the TrendAnalysisAgent class:

This method handles several critical tasks.

First, it sets up the tools. A critical step is to customize their name and description. This customization isn't a minor tweak. It's the best practice for multi-tool agent design. The agent's reasoning engine (the LLM) doesn't inherently understand what a tool does. It relies entirely on this metadata to make its decisions.

By changing the name to web_content_scraper and providing a detailed description (e.g., "Use this tool FIRST", "Always use this tool AFTER"), we give the LLM a direct instruction manual, which significantly enhances its ability to formulate a correct plan.

Second, it initializes the ChatGoogleGenerativeAI model:

- model: 'gemini-2.5-flash’ specifies the Gemini model, chosen for its speed and cost-effectiveness in multi-step agentic workflows.

- apiKey is securely loaded from our environment variables.

- temperature: 0.3 controls the model's creativity. A low value like 0.3 is chosen to keep the agent's reasoning focused and factual, while still allowing for nuance.

Finally, createReactAgent assembles the agent, taking the model (the brain) and the tools (the hands) and wiring them together into a fully functional, autonomous agent.

Step 4: Implement the core analysis logic

With the agent initialized, we need the main function that uses it. performTrendAnalysis takes the user's topic, constructs the full prompt (using helper methods we'll build next), and calls the agent to get a result.

Add this performTrendAnalysis method inside the TrendAnalysisAgent class:

Step 5: Craft the agent's instructions (prompts)

An agent without instructions is inert. For agentic workflows, a prompt isn't just a question, it's a comprehensive standard operating procedure (SOP) that guides the agent's entire thought process. We'll use an advanced prompting technique, breaking the logic into modular components.

Now, it's time to add the following four helper methods inside the TrendAnalysisAgent class.

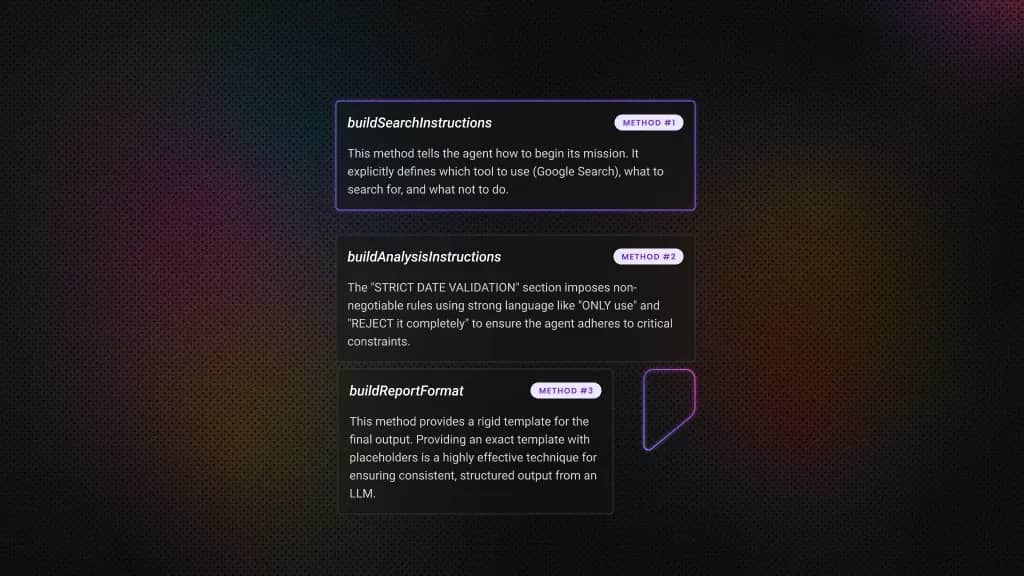

The buildSearchInstructions method

This method tells the agent how to begin its mission. It explicitly defines which tool to use (Google Search), what to search for, and what not to do (it offloads the cognitive burden of date filtering, telling the agent to validate dates after scraping).

The buildAnalysisInstructions method

This is the most complex part of the SOP, giving the agent its rules for analysis. This demonstrates several powerful prompting techniques:

- Guardrails. The "STRICT DATE VALIDATION" section imposes non-negotiable rules using strong language like "ONLY use" and "REJECT it completely" to ensure the agent adheres to critical constraints.

- Conditional logic. "FLEXIBLE SOURCE HANDLING" gives the agent an if-else structure for handling different scenarios, making it more resilient.

- Persona and tone. Instructions like "think like a business consultant briefing a CEO" guide the style of the final output.

The buildReportFormat method

This method provides a rigid template for the final output. Providing an exact template with placeholders is a highly effective technique for ensuring consistent, structured output from an LLM, as it removes ambiguity and forces the agent to organize its findings.

The buildAnalysisQuery method

This top-level prompt, called by performTrendAnalysis, acts as a declarative program for the agent. It defines the overall goal and then breaks it down into a clear, logical sequence of named steps (SEARCH, EXTRACT, ANALYZE, REPORT) that the LLM can follow reliably.

Step 6: Build the CLI and main entry point

To make the agent usable, we'll create the command-line interface (CLI) methods using Node.js's built-in readline module and the final main function to run the script.

Add these 2 final methods inside the TrendAnalysisAgent class:

This CLI code sets up a continuous loop (while (true)) that prompts you for a topic, invokes the agent's main analysis function, prints the result, and then asks if you want to continue. The try...catch block is a safety net: it ensures that if any part of the agent's execution fails, the program will report the error gracefully instead of crashing.

Finally, add this code outside and at the very end of your trend-analysis-agent.ts file. This is the entry point that runs the application.

Complete code

Here is the complete, final code for trend-analysis-agent.ts. You can use this to verify your work from the steps above or as a complete copy-paste reference.

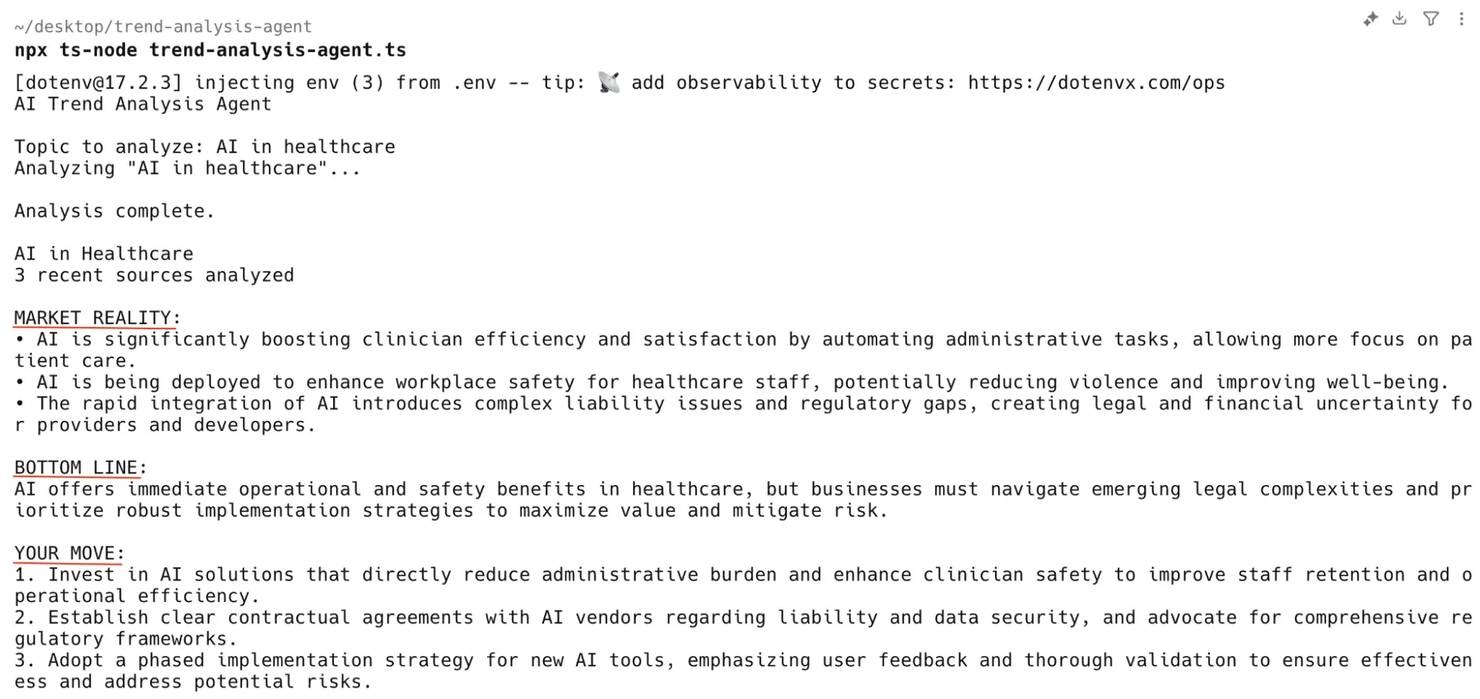

The agent in action

With the code fully combined, it's time to run the agent and see what it can do. This section shows you how to execute the script and, more importantly, how to interpret the agent's internal thought process.

Execution and observation

1. Save the code. Save the complete code into a file named trend-analysis-agent.ts.

2. Run the script. Open your terminal in the project directory and run the application:

Troubleshooting tip from our scraping experts: if you run into issues with the @decodo/sdk-ts package later on, there's a simple fix. Just navigate to node_modules/@decodo/sdk-ts/package.json and remove the line "type": "module". This helps resolve potential module conflicts in some Node.js environments.

3. Interact with the agent. The application will start and prompt you for a topic. Enter a topic of interest, for example, “AI in healthcare”.

4. The agent will begin its work, and after a short time, it will produce a complete executive report:

Bonus: Visualizing the workflow with LangSmith

For more complex agents, reading through console logs can be a hassle. That's where a dedicated observability platform like LangSmith becomes invaluable. LangSmith is a tool from LangChain designed specifically to trace, monitor, and debug LLM applications.

By setting a couple of environment variables in your .env file, all of your agent's activity will be automatically logged to the LangSmith platform.

A run in LangSmith gives you a clear, visual graph of the agent's execution, showing each call to the LLM and each tool execution in sequence. This makes it incredibly easy to see exactly what the agent did, what data it received, and why it made the decisions it did – which is essential for debugging and optimizing agent performance.

Emerging trends and the future of AI automation

The agent we built in this tutorial is powerful, but it's just the beginning. The field of AI workflow automation is evolving at a breathtaking pace, with several key trends pointing toward an even more autonomous future.

Multi-agent systems

The next frontier involves moving from a single agent to collaborative teams of specialized agents. For example, in a sophisticated customer service workflow, a team of different AI models could work together. A conversational AI agent might first interact with the customer, a second agent could then analyze technical logs to diagnose the problem, and a third could perform document summarization to create a concise ticket for a human expert.

However, this increasing complexity introduces some of the key AI workflow automation challenges. Beyond the initial implementation costs, organizations must plan for change management and address serious ethical issues. For instance, bias found in training datasets can negatively impact everything from hiring workflows to loan applications, making careful oversight and robust exception handling for edge cases critical for success.

Frameworks like LangGraph are explicitly designed to facilitate these multi-agent architectures, allowing patterns of collaboration and delegation.

Autonomous workflow composition

Right now, a developer designs the workflow (the "SOP" prompt). An emerging trend is the development of "meta-agents" that can autonomously design the workflow itself based on a high-level business goal. A user could simply state, "Generate a weekly competitive analysis report", and the AI would figure out the necessary steps, select the right tools, and construct the entire automation pipeline.

Decentralized AI

Looking further ahead, there's a growing interest in moving AI systems away from centralized cloud servers and onto decentralized networks. Decentralized AI workflows could offer huge advantages in data privacy, security, and censorship resistance, as computation and data ownership would be distributed across a network rather than controlled by a single company.

Bottom line

This guide demonstrated building a sophisticated, autonomous AI agent. It covered the shift from rule-based to intelligent automation, initializing LangChain, Gemini LLM, and Decodo's web scraping tools, and crafting agent prompts. The key takeaways from this process are transformative for any developer or organization entering the field of AI engineering:

- Agents reason, they don't just execute. The core of an agent is its ability to use an LLM to make decisions within a ReAct loop of reasoning, acting, and observing.

- Tools are the bridge to reality. An agent's capabilities are defined by its tools. Without access to external information and actions, an LLM's intelligence remains latent.

- Prompting is a form of programming. For agents, a well-structured prompt isn't just a query but a declarative program – an SOP that orchestrates complex behavior through natural language.

These principles can help you build AI-enabled workflows that deliver real-time, data-driven insights.

Next steps

To continue your journey into building autonomous AI systems, the following resources are highly recommended:

- Official LangChain.js documentation. The definitive source for all LangChain components, concepts, and integrations.

- LangGraph documentation. Essential reading for moving beyond pre-built agents to designing custom, stateful, and multi-agent workflows.

- Decodo API documentation. Explore the full capabilities of the Web Scraping API, including advanced features and other data extraction tools.

- Decodo Knowledge Hub. Discover more insights by looking into more artificial intelligence blogs.

Get 30% off Web Scraping API

Collect real-time data fast with JavaScript rendering, proxy integration, and anti-bot features.

About the author

Justinas Tamasevicius

Head of Engineering

Justinas Tamaševičius is Head of Engineering with over two decades of expertize in software development. What started as a self-taught passion during his school years has evolved into a distinguished career spanning backend engineering, system architecture, and infrastructure development.

Connect with Justinas via LinkedIn.

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.