Google Removes num=100 Parameter: Impact on Search and Data Collection

In September 2025, Google officially discontinued the num=100 parameter. If you're an SEO professional, data analyst, or someone who prefers viewing all results at once, you've likely already felt the impact on your workflows. In this article, we'll explain what changed, why Google likely made this move, who it affects, and most importantly, how to adapt.

Kotryna Ragaišytė

Last updated: Oct 23, 2025

6 min read

What was the Google num=100 parameter?

The num=100 parameter was an official Google search modifier that let users increase the number of results displayed per page. By changing a URL like google.com/search?q=coffee to google.com/search?q=coffee&num=100, users could view up to 100 results at once instead of the default 10.

This was a fully supported feature for over a decade. Google even included it in the old search settings menu, where users could manually set their “results per page” to a maximum of 100.

This supported use cases like:

- SEO keyword research and rank tracking. Analysts could see where a site ranked across the entire top 100, catching positions beyond page 1 that still delivered meaningful traffic.

- Competitive analysis. Viewing all top 100 results on one page made it easier to spot emerging competitors and SERP patterns.

- Bulk data collection and scraping. Harvesting large sets of search result URLs and snippets for data analysis or machine learning, with minimal requests.

- Power-user efficiency. Some advanced users set their default to 100 results to avoid clicking through multiple pages when researching a topic in depth.

To visualize the difference: by default, you can only see 10 results and have to navigate to the next page to view more. With &num=100, you could scroll through an extended list of results on page one. This meant less clicking and a more comprehensive overview at a glance.

Breaking news: The evolving workaround landscape

After Google removed the num=100 parameter in September 2025, developers began testing possible workarounds.

A few temporary solutions circulated, but Google quickly shut them down. It became clear that large-scale result extraction was no longer something Google would allow through simple query modifications.

The impact came fast. Teams that relied on num=100 for easier data access started facing disrupted workflows. According to Search Engine Land, 77% of sites reported reduced keyword visibility after the change, and many SEO tools saw a decline in desktop impressions.

Why did Google remove the num=100 parameter?

Although Google has not made an official statement, several factors likely influenced the decision:

- Server efficiency. Serving 100 results requires far more processing power than serving 10, which adds up at scale.

- Mobile-first experience. A 100-result page loads slowly and is impractical to scroll through on mobile devices.

- Scraping prevention. num=100 made SERP scraping too efficient, allowing full result extraction in a single call.

- User behavior alignment. Most people don't scroll past the top results, so supporting 100 per page catered to a small minority.

In summary, Google likely removed num=100 to improve system efficiency, align search results with typical user behavior, protect their data from mass scraping, and clean up their metrics.

It's a move that serves Google's interests in maintaining a fast, consistent, and less-scraped search platform, even if it inconveniences a subset of users and tool providers.

Still using num=100?

Get 100 SERP results in one call with Decodo's Web Scraping API.

Impact on different user groups

Let's examine how the removal affected different groups of users and professionals:

SEO professionals

SEOs monitoring hundreds or thousands of keywords now require significantly more requests to capture full ranking distributions. What once required 1,000 queries may now need 10,000. This increases scraping time, infrastructure use, and the likelihood of blocks.

SEO platforms had to quickly adjust their tracking engines, often at higher operational cost, which may eventually impact pricing. Some users also experienced apparent drops in impressions as reporting structures changed, even when rankings stayed similar.

Data analysts & researchers

Academic and market research teams that relied on script-based bulk extraction now face higher friction. Scripts that once fetched 100 results per query must now paginate across multiple calls.

This complicates pipelines, increases processing time, and disrupts historical consistency, as new datasets are no longer directly comparable to past collections.

Web scraping operations

Perhaps those feeling the biggest pain are the folks running web scraping operations – whether that's an in-house data engineering team at a company, or an individual developer maintaining a scraping script.

Instead of fetching all results in one request, they now must paginate using parameters like start=0, start=10, start=20, and so on. This multiplies request volume, increases bandwidth and proxy consumption, heightens detection risk, and raises the likelihood of blocks.

Advanced users & productivity enthusiasts

Even individuals who used num=100 for convenience lost a preferred workflow. Scanning long lists of results in one view is no longer supported, forcing a return to clicking through pages. There is no native substitute in Google settings, and productivity extensions that relied on it must be reworked or retired.

Overall, the impact of killing num=100 reverberated through technical and professional circles. SEO agencies, software developers, data scientists, and advanced search users all had to recalibrate.

In the short term, teams scrambled to implement quick fixes, such as adding pagination logic or reducing the scope of data collection.

But the long-term question loomed larger: How do we ensure reliable access to the data we need when Google can change the rules overnight? This leads us to consider the various options available for obtaining comprehensive Google search results.

Workarounds and alternative approaches

If you've been affected by Google's num=100 change, the big question is: What now? Luckily, we have the answer you need. First, let's start by laying out your options.

Understanding your options

You have multiple paths depending on scale, resources, and urgency:

- Manual or ad-hoc methods. Manually clicking through pages or using browser tools. Suitable only for one-off needs.

- Unofficial tricks. Hidden parameters or endpoints that are not supported by Google. Usually short-lived and risky.

- DIY scraping with pagination. Writing scripts to paginate using start= values and scrape SERPs. Works, but requires maintenance and faces detection issues.

- Official Google custom search API. Google's supported method for getting results through JSON. Reliable but costly at scale and limited to 10 results per call.

- Third-party SERP APIs. Tools like Decodo's Web Scraping API that handle proxy rotation, pagination, and parsing for you. Paid but designed for scale and consistency.

Each option carries trade-offs in terms of time, reliability, scalability, cost, and maintenance effort.

Manual pagination (limited effectiveness)

The simplest fallback is manually paging through results. You run the query and click through pages 1 to 10 to reach 100 results. You could also write a lightweight macro to assist.

There are some pros:

- Easy to do in a browser

- No direct cost

- Works within Google's intended usage

But there are also some drawbacks:

- Extremely time-consuming beyond a few queries

- Automation risks detection and blocks

- Data may shift between pages for volatile queries

- Not practical for recurring or large-scale collection

This method is best for infrequent use or small personal research tasks.

Undocumented alternative endpoints (volatile)

After num=100 was removed, some developers located internal Google endpoints used by other Google interfaces. While these may temporarily return additional results, they are fragile, unsupported, and often violate Google's terms. Google actively blocks these once discovered.

This path is typically used by hobbyists testing ideas rather than businesses that need reliable, long-term data access.

Browser automation (complex and resource-heavy)

Some users turned to browser automation using tools like Selenium or Puppeteer to simulate human browsing, run queries, click pagination, and extract results.

The pros of this method:

- High accuracy and realistic SERP rendering

- Can capture dynamic elements or infinite scroll behavior

- Flexible for interactive elements

The cons for this:

- Much slower than API-based methods

- Requires substantial infrastructure to run at scale

- Prone to CAPTCHAs and blockers

- Requires ongoing maintenance as Google's UI changes

Browser automation is the best option for small projects with technical resources or temporary use when an immediate workaround is required.

Official Google Custom Search API (limited scale)

Google's Custom Search JSON API provides a structured, approved way to retrieve SERP-like data. You create a Custom Search Engine (CSE) configured to search the full web, then query it with an API key.

For users choosing this method, there are some wins:

- Officially supported and stable

- Returns structured JSON (no HTML scraping needed)

- Safe from being blocked when used within quota

Still, there are quite a few drawbacks:

- Limited free quota (typically 100 queries per day)

- Paid usage becomes expensive at higher volumes

- Restricted to 10 results per call, requiring pagination

- May not fully match live Google rankings

- Not designed for high-volume SEO or competitive analysis tools

This method is the best for small-scale applications, prototypes, internal tools, or limited daily querying until a scalable solution is adopted.

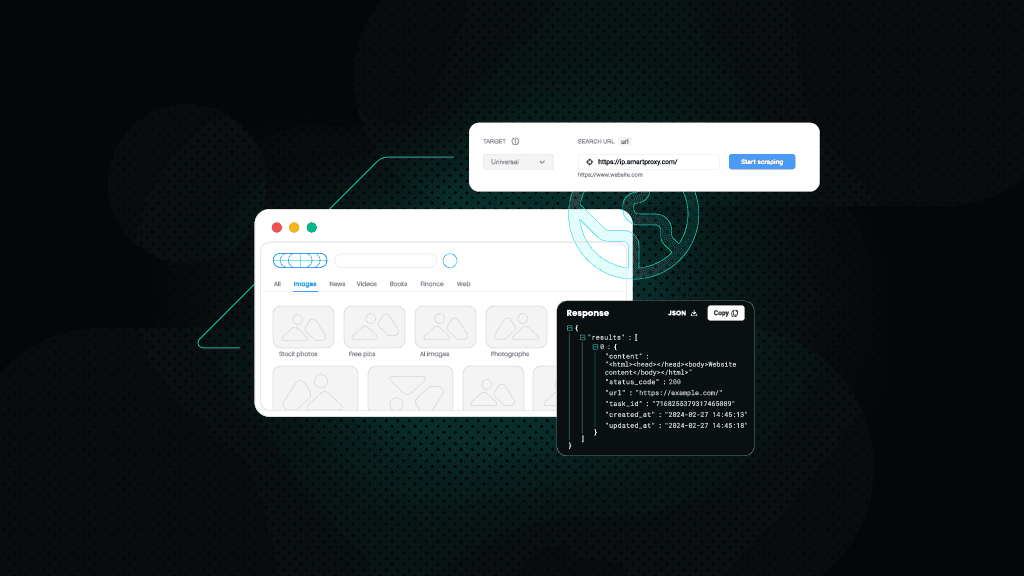

Professional SERP APIs (Recommended for businesses)

For most serious users and businesses, the most practical long-term solution is using a professional SERP API. These third-party services are designed specifically to deliver search results at scale without requiring you to manage proxies, handle CAPTCHAs, or maintain scraping infrastructure.

APIs like Decodo's Web Scraping API handle proxy rotation, CAPTCHA solving, pagination, timing, and rate limits in the background. Instead of parsing HTML manually, you receive structured JSON or CSV with titles, URLs, snippets, and SERP features.

You can request large result sets and specify location or device preferences, often in a single call. When Google changes its layout or logic, the provider updates its backend so your workflow continues without modification.

Power users can collect real-time data from SERP easier and unlock these advantages:

- Not dependent on Google's removed parameters. Professional APIs do not rely on unsupported tricks like num=100. When Google removed that parameter, these providers adjusted their backend logic automatically, allowing users to continue retrieving 100 results with no code changes.

- Automatic proxy rotation and CAPTCHA handling. Providers maintain large pools of residential and mobile proxies from multiple regions. They detect blocks, rotate IPs, and solve CAPTCHAs so you receive clean responses without disruptions.

- High success rates and low latency. Enterprise-grade APIs are designed for performance and scale, typically achieving 99%+ success rates while returning full result sets in seconds, even under high request volumes.

- Structured output. Results are provided in clean JSON or CSV, with titles, URLs, snippets, rankings, and often special SERP elements such as Featured Snippets, People Also Ask boxes, and image carousels. No HTML parsing or DOM maintenance is required.

- Pagination abstraction. If you request 100 or 200 results, the provider handles the pagination loop automatically and returns a single combined dataset.

- Geographic and device targeting. You can simulate searches from specific cities or countries and choose between mobile or desktop views. User agents and localized IPs are handled for you.

- Scalable and supported. Professional APIs are built to handle thousands or millions of requests daily. Many offer uptime guarantees, customer support, and SLAs that make them suitable for critical workflows.

This method is the best for business-critical operations requiring scale and reliability. If you run an SEO SaaS, or a marketing agency tracking thousands of keywords, or any application where you need lots of Google data reliably and regularly, professional SERP APIs are the go-to solution. For a detailed comparison, check out our guide on choosing the best web scraping services.

Approach

Reliability

Scalability

Dev Effort

Cost at scale

Ideal use

Manual pagination

1/5

1/5

2/5

High

Personal

Unofficial endpoints

1/5

2/5

2/5

Medium

Testing only

Browser automation

3/5

2/5

4/5

High

Small projects

Google API

4/5

3/5

2/5

Medium

Low volume

Pro SERP APIs

5/5

5/5

1/5

Low

Enterprise scale

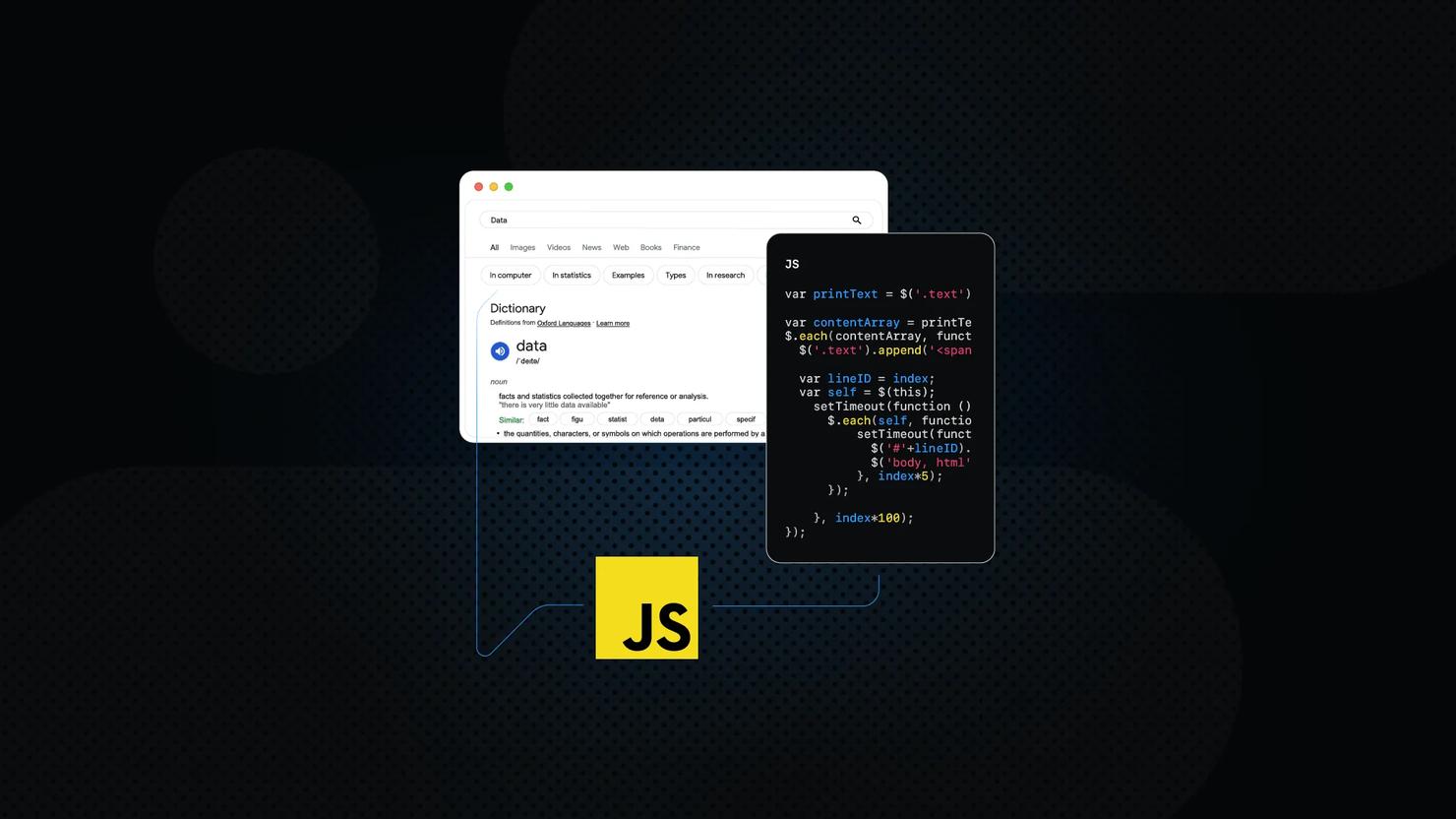

Code comparison: Three approaches

Let's look at how different approaches handle the same task: getting 100 search results for "coffee".

Approach 1: The old way (no longer works)

First, here's what we all used to do. A simple, beautiful one-liner that's now completely broken:

This approach died in September 2025. The num=100 parameter is ignored, and you'll only get 10 results back.

Approach 2: Manual pagination (inefficient)

So what's the "free" alternative? You could paginate through results manually, but watch how quickly this gets complicated:

This technically works, but it's a maintenance nightmare. You're making 10x more requests, parsing complex HTML that changes without warning, and you'll still hit CAPTCHAs that kill your script.

Approach 3: Decodo Web Scraping API (recommended)

Now here's the professional approach – clean, reliable, and actually simpler than the original num=100 method:

Notice what you don't have to deal with anymore? No pagination loops to write, no HTML parsing to maintain, no CAPTCHA handling to implement, and no rate-limiting delays to manage. The API handles all this complexity behind the scenes so you can focus on what matters. Just one request that returns exactly what you need in a clean, structured format.

The performance difference is stark: manual pagination requires 10 requests and 30 seconds, while Decodo's Web Scraping API delivers the same results in one request, in 2 seconds, with 99.86% reliability, making it faster and fundamentally more dependable.

Extracting additional rich search results from Google

So far, we've focused on standard organic listings (formerly 10 or 100 blue links). However, modern Google SERPs include a wide range of rich results and enhanced features that significantly influence visibility and user behavior. Extracting these requires different handling than scraping basic link listings.

What are Google's rich search results?

Google's search results now include a variety of interactive and visually distinct elements beyond traditional organic positions:

- Featured snippets appear at the top of the page and display direct answers using text, bullet lists, or tables.

- People Also Ask (PAA) boxes show expandable related questions that load additional answers dynamically.

- AI snapshots (SGE) provide AI-generated summaries or multi-source overviews at the top of the SERP.

- Image packs, video carousels, and top stories add visual and media-based elements.

- Local packs, typically including eCommerce results and other locality-based listings.

From an SEO perspective, rich results impact visibility. You might rank number 1 organically, but if a huge featured snippet or map pack is above you, you get less attention.

Conversely, if you can occupy some of these features (like getting your site in a featured snippet or in the PAA), that's valuable. For researchers, these elements are part of the user experience and can be worth analyzing (e.g., how often does Google provide a direct answer vs. just links?).

How to extract rich results: Methods and tools

Standard scraping methods or basic “organic-only” APIs typically miss rich elements due to several technical challenges:

- Many rich features load asynchronously through JavaScript and are not present in the initial HTML.

- Google regularly changes and obfuscates class names, structures, and identifiers to reduce scraping accuracy.

- Rich result layouts vary by device type, region, and query intent.

- Features like "People Also Ask" require interaction because clicking one question can trigger the loading of additional related content.

- Google experiments frequently, meaning formats can change without warning.

As a result, traditional HTML scraping with tools like Beautiful Soup or static HTTP fetchers often fails to detect or correctly parse rich results.

This is where specialized SERP APIs earn their keep. Instead of wrestling with HTML parsing, professional APIs like Decodo's Web Scraping API provide dedicated response fields for each rich result type.

Implementation tips and best practices

If you decide to go after rich results, here are some tips:

- Understand the response structure. Look at how the API represents a featured snippet or a PAA. For instance, a featured snippet might come as part of the first result or as a separate object with snippet_text and a snippet_link.

- Monitor SERP layout changes. Google can change how these features appear. For example, they might increase the number of PAA questions shown, or change the HTML structure of a featured snippet. If using an API, pay attention to their changelogs or announcements. If doing it yourself, you'll need to update your parser when these changes happen.

- Compliance and ethics. Be aware that some rich data is often aggregated from various sources. Ensure you use it within fair use or data guidelines, especially if redistributing. If in doubt, consult a legal professional on your data collection practices.

Best practices for the post-parameter era

When Google removed the parameter in September 2025, it highlighted the risk of depending on undocumented features. To stay resilient, your data workflows should adapt intentionally rather than react under pressure.

#1. Audit current dependencies

List all tools, scripts, and workflows that previously relied on num=100. Separate essential processes from convenience-based ones. Clarify your actual data depth needs; many use cases may only require the top 30 or 50 results. This prevents overbuilding and keeps cost and complexity manageable.

#2. Evaluate solution requirements

Determine how many queries you run daily or monthly and what downtime would cost in delays, insights, or client trust. Factor in the total cost of ownership, including developer time, maintenance burden, and scale limitations. Assess how much technical capacity your team has for DIY alternatives versus managed solutions.

#3. Future-proof your infrastructure

Design systems with abstraction layers that allow easy switching between data sources without rewriting core logic. Add fallback logic to degrade gracefully when disruptions occur. Build in monitoring so you can respond proactively when providers or platforms make changes.

#4. Test before committing

Use free trials from SERP API providers to benchmark reliability, data accuracy, and speed. Run test queries alongside existing workflows to compare results. Validate alignment with your reporting needs before fully migrating.

#5. Stay informed

Subscribe to reliable SEO news sources like Search Engine Land, Search Engine Journal, and Search Engine Roundtable. Follow updates from Google Search Central. Join SEO and data scraping communities on Slack, Reddit, or Discord to detect changes early and learn tested solutions from other practitioners.

Wrapping up

Google's removal of the num=100 parameter in September 2025 is part of a broader pattern we've seen before and will see again. Tech platforms are increasingly locking down data access while standardizing user experiences, and this won't be the last parameter Google changes.

That said, there's a clear path forward. For occasional personal use, manual pagination might still suffice. But for business operations, professional SERP APIs are the only reliable solution.

The key is to build systems with abstraction layers that let you swap data sources without rewriting your entire codebase, and invest in infrastructure that adapts to change rather than scrambling for the next quick fix each time a parameter disappears.

If you're looking for inspiration on what to build with reliable SERP data, check out our creative web scraping project ideas or learn about the difference between web crawling and web scraping to better understand which approach fits your needs.

Get 100 SERP results with one request

Unlock real-time data from SERP with Decodo's Web Scraping API.

About the author

Kotryna Ragaišytė

Head of Content & Brand Marketing

Kotryna Ragaišytė is a seasoned marketing and communications professional with over 8 years of industry experience, including 5 years specialized in tech industry communications. Armed with a Master's degree in Communications, she brings deep expertize in brand positioning, creative strategy, and content development to every project she tackles.

Connect with Kotryna via LinkedIn.

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.