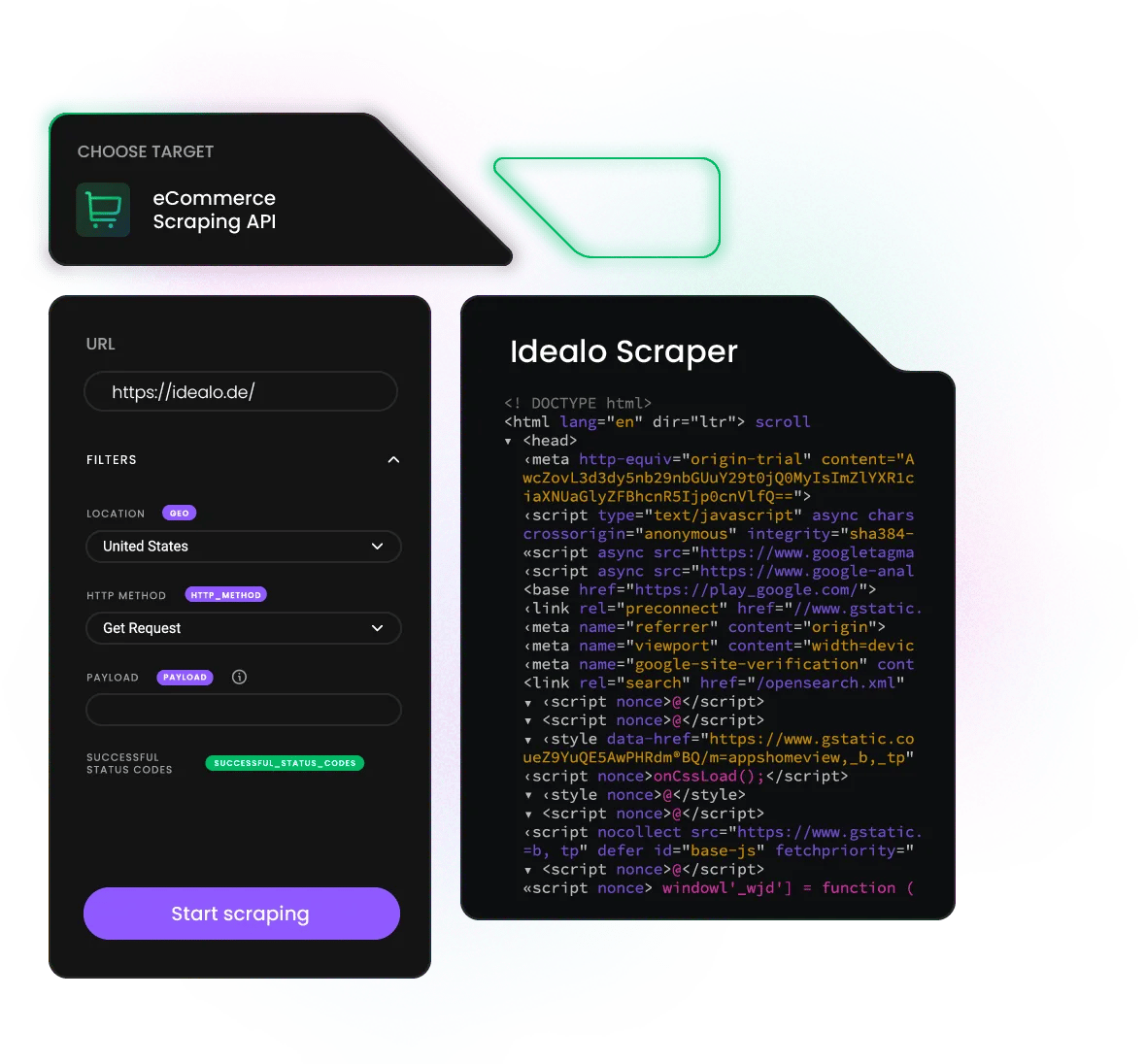

Idealo Scraper API

Monitor product listings, pricing, and inventory changes hassle-free using the Idealo Scraper API* – a few clicks is all it takes to bypass geo-restrictions, CAPTCHAs, and IP blocks.

* This scraper is now a part of Web Scraping API.

14-day money-back option

Zero

CAPTCHAs

99.99%

success rate

195+

locations

Task

scheduling

7-day

free trial

Be ahead of the Idealo scraping game

Extract data from Idealo

Our Web Scraping API is a powerful data collector that combines a web scraper, a data parser, and a pool of 125M+ residential, mobile, ISP, and datacenter proxies.

Here are some of the key data points you can extract:

- Product titles

- Product pricing

- Product descriptions

- Retailer names and offers

- Ratings and reviews

- Shipping options and costs

- Product categories and specifications

What is an Idealo scraper?

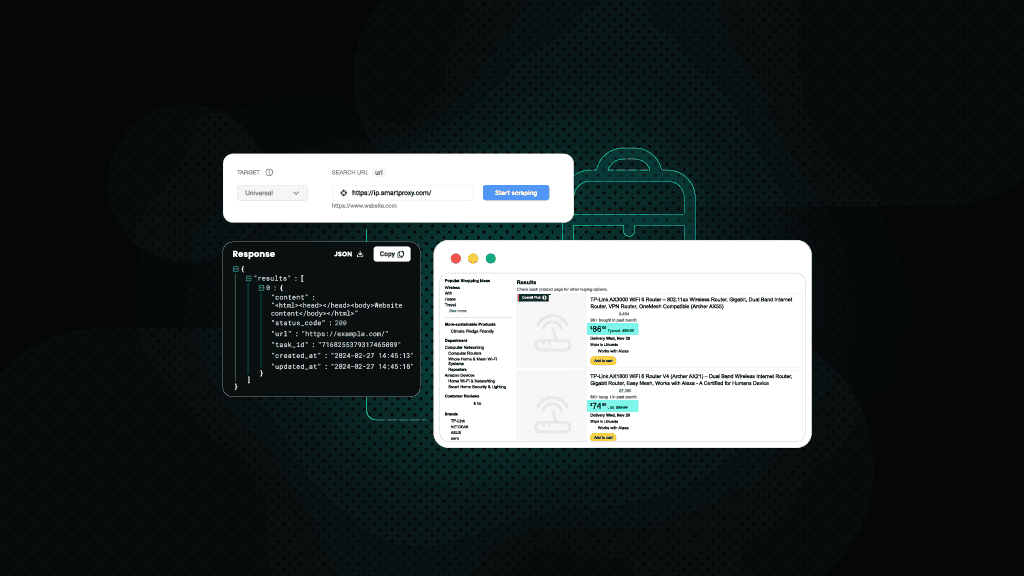

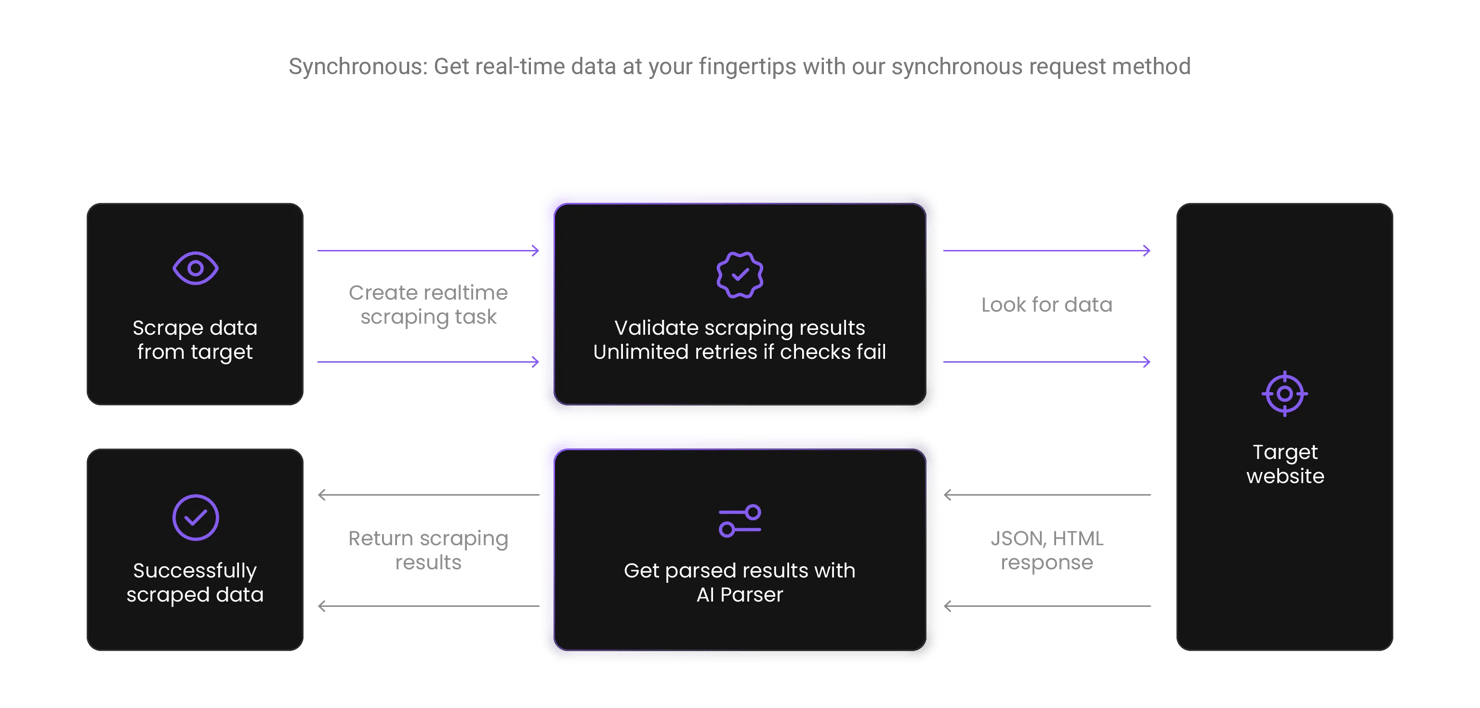

An Idealo scraper is a tool that extracts data from the Idealo website. With our Idealo scraper API, you can send a single API request and receive the data you need in raw HTML format. Even if a request fails, we’ll automatically retry until the data is delivered – you're only paying for successful requests.

Designed by our experienced developers, this tool offers you a range of handy features:

- Built-in scraper and parser

- JavaScript rendering

- Integrated browser fingerprints

- Easy real-time API integration

- Vast country-level targeting options

- CAPTCHA handling

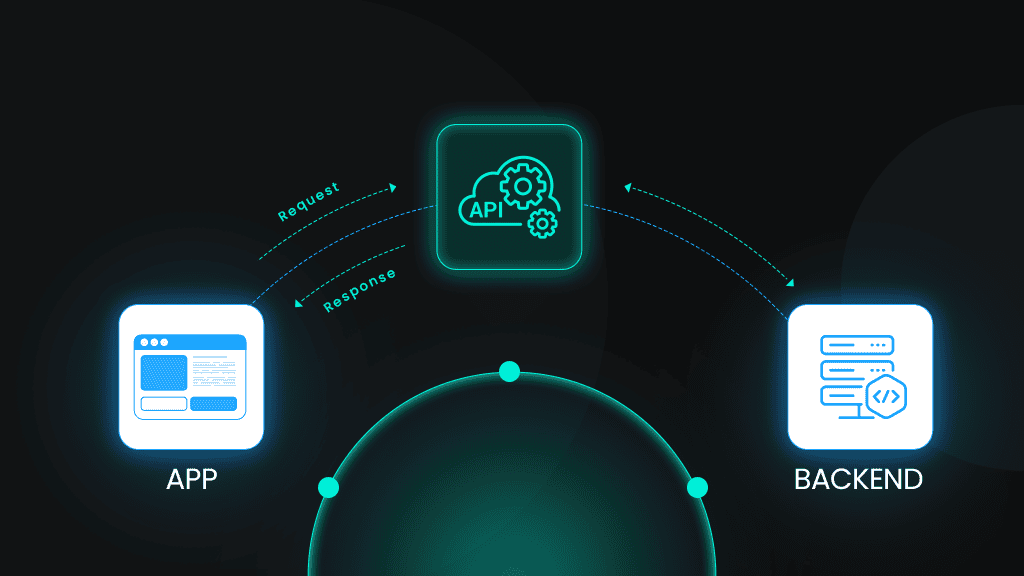

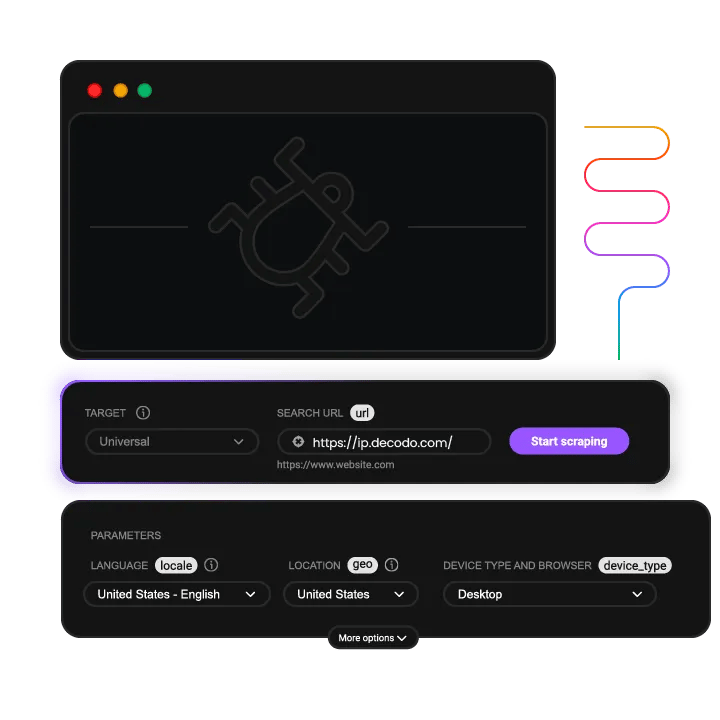

How does Idealo API work?

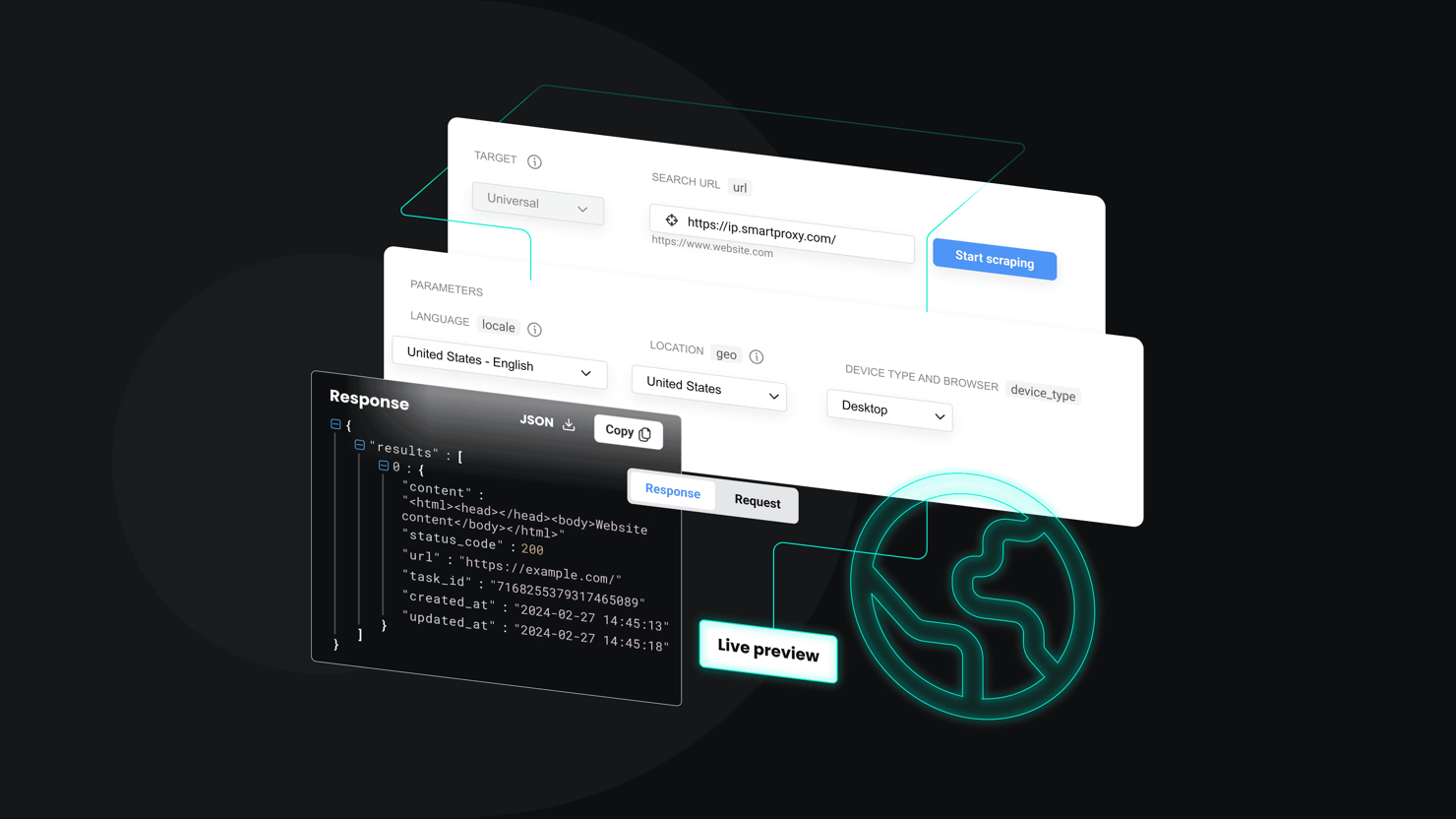

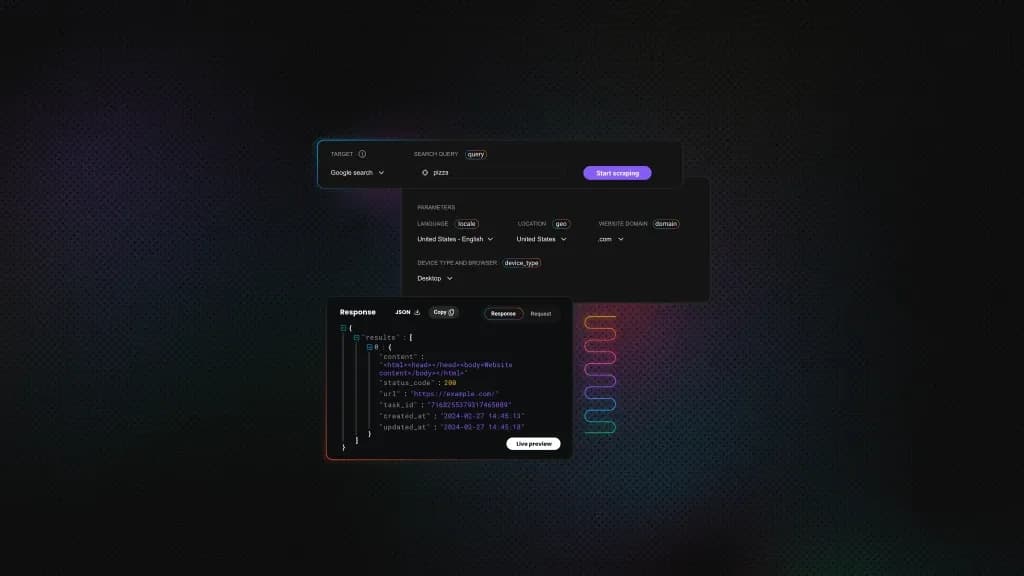

The Idealo scraper API simulates real user behavior to bypass anti-bot systems and extract accurate data from the website. Designed with ease of use in mind, it offers a simple setup process, ready-made scraping templates, and code examples, so even non-developers can start collecting data quickly. Users can fully customize their workflows, manage API settings, and get data in HTML.

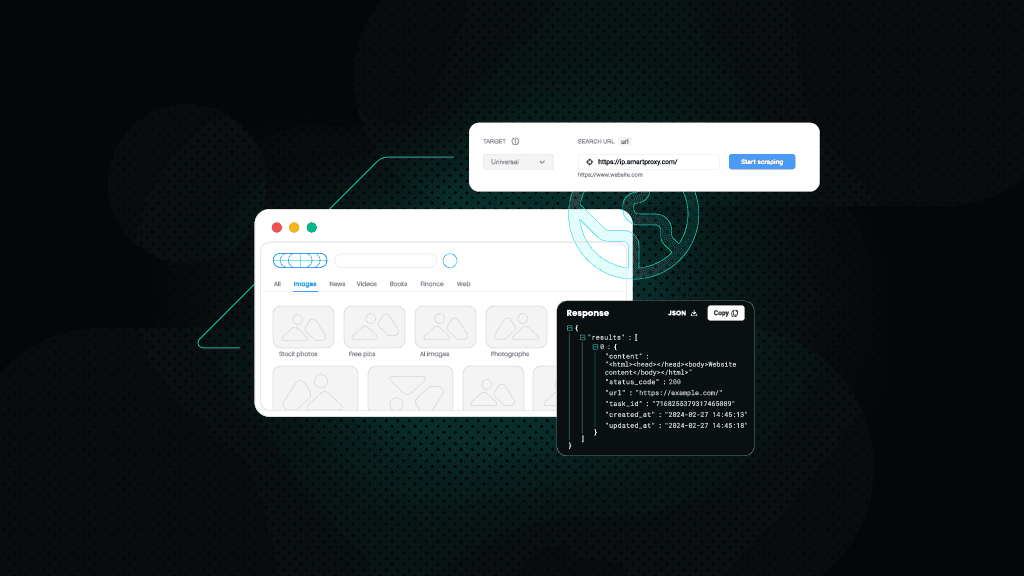

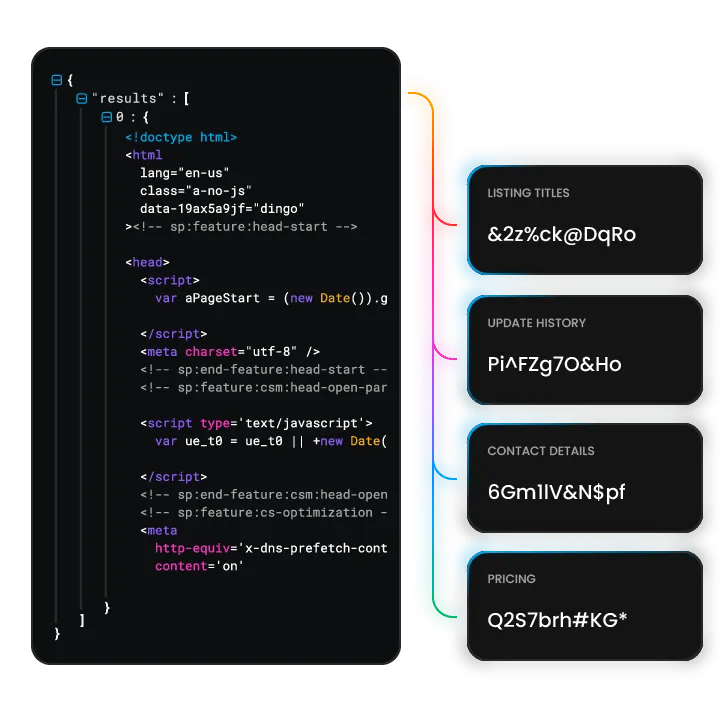

Get data in JSON with AI Parser

Structured data at your fingertips in just a few clicks.

Scrape Idealo with Python, Node.js, or cURL

Our Idealo API supports all popular programming languages for hassle-free integration with your business tools.

Collect data effortlessly with Idealo API

Scrape Idealo with ease using our powerful Web Scraping API. From built-in proxies to integrated browser fingerprints, experience seamless data collection without blocks or CAPTCHAs.

Accurate results

Receive real-time HTML results within moments after sending your scraping request.

Guaranteed 100% success

Pay only for successfully retrieved results from your Zillow queries.

Real-time or on-demand results

Choose between real-time results or schedule data delivery for later.

Advanced anti-bot protection

Leverage integrated browser fingerprints to stay undetected while collecting data.

Easy integration

Set up the Zillow scraper API in minutes with our quick start guide and code examples.

Proxy integration

Bypass CAPTCHAs, IP blocks, and geo-restrictions with 125M+ IPs built into the API.

API Playground

Test your Zillow data scraping queries in our interactive playground.

7-day free trial

Try our scraping solutions risk-free with a 7-day free trial and 1K requests.

Find the right Idealo products data scraping solution for your use case

Explore our Idealo API and choose the solution that best matches your needs.

Core

Advanced

Essential scraping features to unlock targets efficiently

Premium scraping solution with high customizability

Success rate

100%

100%

Anti-bot bypassing

Proxy management

API Playground

Task scheduling

Pre-build scraper

Ready-made templates

Advanced geo-targeting

Premium proxy pool

Unlimited threads & connections

JavaScript rendering

Explore our pricing plans for any Idealo scraping demand

Collect real-time data from any Idealo page and stay ahead of the competition.

23K requests

$1.25

$0.88

/1K req

Total:$20+ VAT billed monthly

Use discount code - SCRAPE30

82K requests

$1.2

$0.84

/1K req

Total:$69+ VAT billed monthly

Use discount code - SCRAPE30

216K requests

$1.15

$0.81

/1K req

Total:$179+ VAT billed monthly

Use discount code - SCRAPE30

455K requests

$1.1

$0.77

/1K req

Total:$349+ VAT billed monthly

Use discount code - SCRAPE30

950K requests

$1.05

$0.74

/1K req

Total:$699+ VAT billed monthly

Use discount code - SCRAPE30

2M requests

$1.0

$0.7

/1K req

Total:$1399+ VAT billed monthly

Use discount code - SCRAPE30

Need more?

Chat with us and we’ll find the best solution for you

With each plan, you access:

99.99% success rate

100+ pre-built templates

Supports search, pagination, and filtering

Results in HTML, JSON, or CSV

n8n integration

LLM-ready markdown format

MCP server

JavaScript rendering

24/7 tech support

14-day money-back

SSL Secure Payment

Your information is protected by 256-bit SSL

What people are saying about us

We're thrilled to have the support of our 130K+ clients and the industry's best.

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Featured in:

Decodo blog

Build knowledge on our solutions and improve your workflows with step-by-step guides, expert tips, and developer articles.

Most recent

How To Find All URLs on a Domain

Justinas Tamasevicius

Last updated: Feb 09, 2026

16 min read

Frequently asked questions

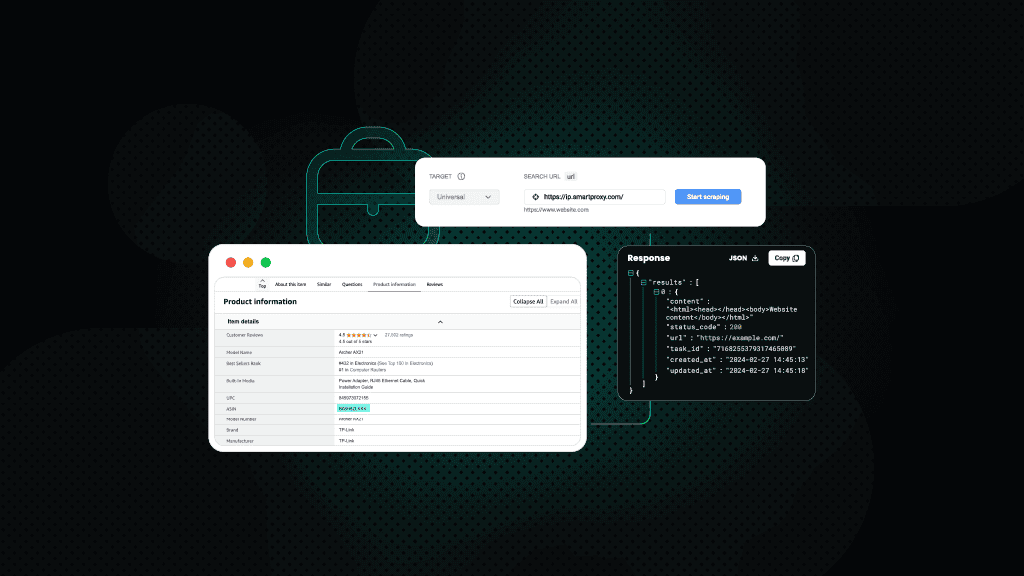

What types of data can the Idealo API extract?

Our Idealo scraper API can extract a wide range of product-related data points, including:

- Product pricing

- Product descriptions

- Retailer names and offers

- Ratings and reviews

- Shipping options and costs

- Product categories and specifications

Data from Idealo can be useful for monitoring pricing changes across multiple retailers, market benchmarking, and assortment optimization.

What data formats does the Idealo API provide?

Web Scraping API responses are retrieved in HTML format by default. HTML is the raw web page code, which contains all the visible and valuable product data, but it’s unstructured and requires parsing to extract meaningful information. Developers typically use CSS selectors, XPath, or automated parsing tools to identify the specific data points they need, such as pricing, product names, or stock status.

You can also parse this HTML using our free AI Parser to instantly convert it into structured JSON format. which is widely used for web data processing or for training various AI tools and agents. JSON is lightweight, readable, and integrates smoothly with Python, JavaScript, and most modern programming environments. The structure makes it easy to map extracted data into databases, dashboards, or third-party tools.

Can I use the Idealo API for commercial projects?

Absolutely! You can use our Web Scraping API for commercial projects, provided you follow Idealo’s terms of service. Many businesses use Idealo data to track competitor prices, automate repricing, or identify market trends. Since Idealo doesn’t offer an official public API for this, most users opt for third-party tools that collect the data in a reliable and ethical manner. Just double-check that your setup is compliant and can handle things smoothly at scale.

What are the available pricing plans for the Idealo API?

Idealo doesn't publish pricing plans for an official API. However, the pricing for Decodo’s Web Scraping API is flexible and affordable for both individuals and fast-paced eCommerce businesses. You can get started with a 7-day free trial and 1K requests, and then choose from two pricing options:

- Cost-efficient Core subscription with the most essential web scraping features.

- Powerful Advanced subscription with a range of features that help to collect data from even the most advanced websites.

How reliable is the Idealo API service?

Reliability depends on how you're accessing the data. If you're using a Web Scraping API, you’re getting 99.99% uptime and 100% success rate. With our scraping solution, you also gain access to over 125 million IPs, allowing you to bypass CAPTCHAs and IP blocks with ease, making data extraction simple and effective.

Where can I find documentation and troubleshooting resources?

To get the most out of your Web Scraping API, you can explore our quick start guide, extensive documentation, or GitHub for advanced code examples and effective data collection strategies.

Get Idealo Scraper API for Your Data Needs

Gain access to real-time data at any scale without worrying about proxy setup or blocks.

14-day money-back option