Effortless Proxy Management With Scrapoxy

Seamlessly integrate Scrapoxy for easy proxy management and IP rotation, ensuring private browsing and secure, scalable web scraping.

115M+

ethically-sourced IPs

#1

response time

99.86%

success rate

99.99%

uptime

<0.6s

response time

What is Scrapoxy?

Scrapoxy is a powerful proxy aggregator that enables centralized management of multiple proxies within a single interface. It generates a pool of private proxies from multiple proxy services, intelligently routes traffic, and handles proxy rotation for you.

User-friendly interface

Simplify the management of proxy instances with an intuitive user interface, making it easy to set up, configure, and monitor proxies efficiently.

Ban management

Overcome bans by injecting proxy names into HTTP responses, allowing scrapers to notify Scrapoxy to remove problematic proxies from the pool when bans are detected.

Traffic monitoring

Monitor traffic, measure incoming and outgoing data, and track key metrics like request counts, active proxy numbers, and requests per proxy.

Why residential proxies?

A residential proxy serves as a mediator, allowing users to get an IP address from an authentic desktop or mobile device connected to a local network. Due to its origin, residential proxies are a perfect match for overcoming geo-restrictions, bypassing CAPTCHAs, managing multiple accounts, and conducting web testing with the CapSolver platform.

Decodo offers top-notch residential proxies with an extensive IP pool of over 55M IPs across 195+ locations. With an unparalleled responsive rate, clocking in at under 0.5 seconds, a success rate of 99.68%, and an affordable entry point with Pay As You Go, Decodo is an excellent deal for hustlers and fast-growing companies.

Set up Decodo proxies with Scrapoxy

To get started, you'll need 3 things: Docker, Scrapoxy, and proxies. Follow each step below to set everything up.

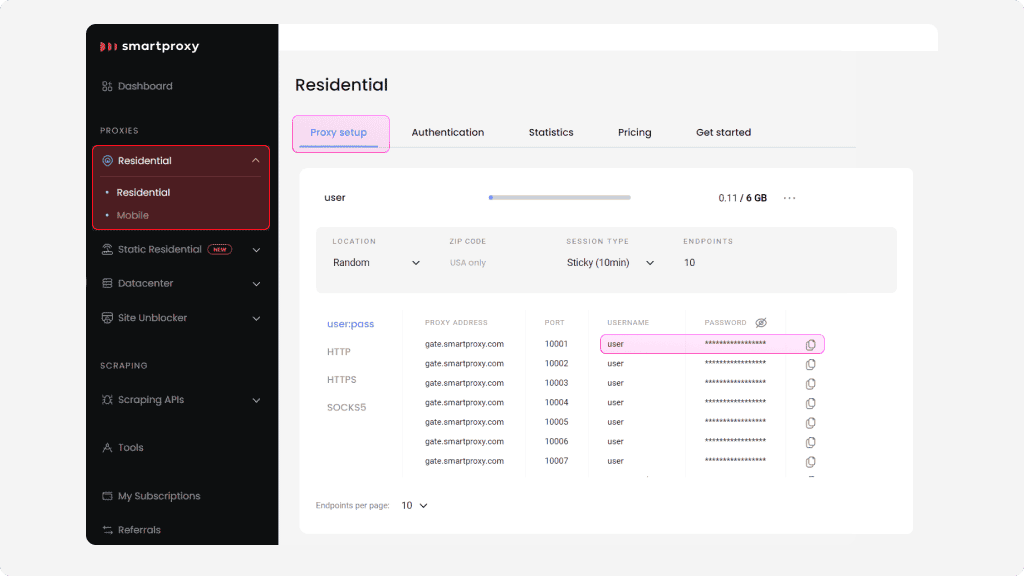

Getting residential proxies

Log in to your Decodo dashboard, find residential proxies by choosing Residential under the Residential column on the left panel, and select a plan that suits your needs. Then, open the Proxy setup tab and copy the username and password. Save it, as you'll need to use it later.

Installation

To use Scrapoxy, you'll need to install Docker first. Then, follow these steps:

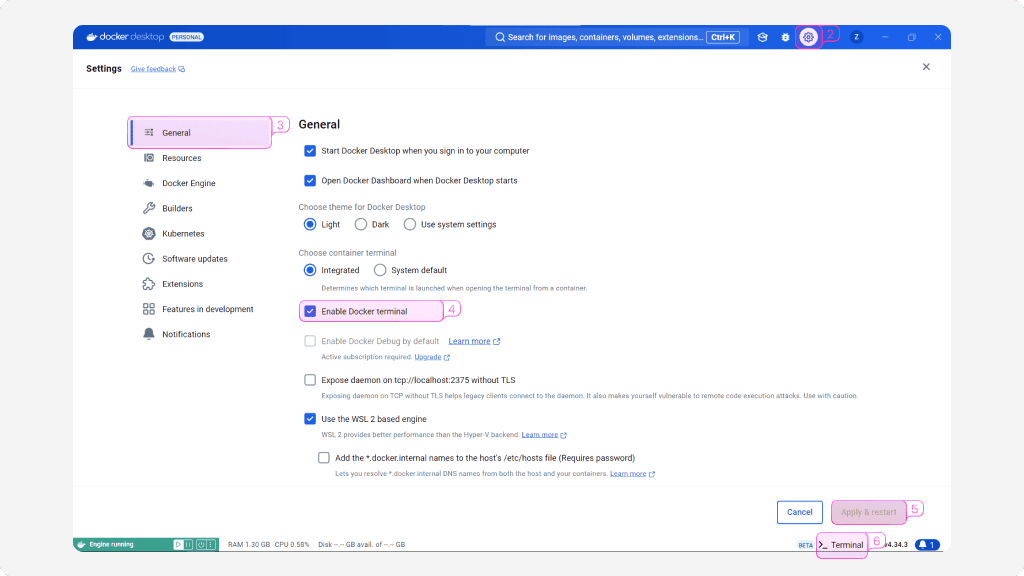

- Launch the Docker Desktop application.

- Open the Settings menu.

- Go to the General tab.

- Enable Docker terminal.

- Click Apply & restart.

- Launch the Terminal from the bottom-right corner and enter the following command (replace admin, password, secret1, and secret2 with your custom values):

7. Navigate to http://localhost:8890 and log in with your username and password you provided in the command just earlier (not the proxy authentication information).

You can also use NPM. Check out the official documentation for instructions on how to set it up.

Configuration

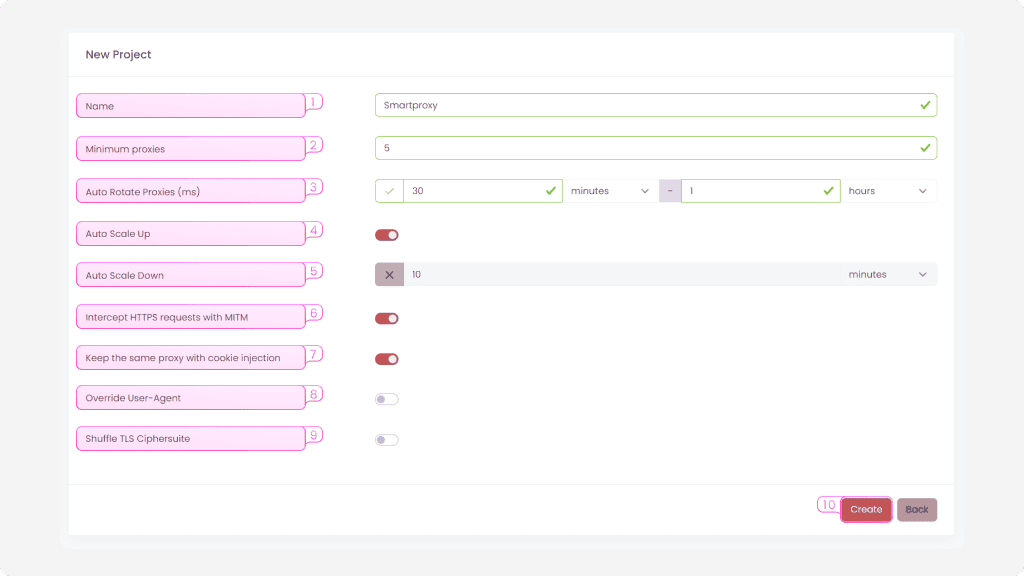

After logging in, you'll be prompted to create a new project. Enter the following information and select options:

- Name. Create a custom name for your project.

- Minimum proxies. Set the minimum amount of proxies that should be used in this project.

- Auto Rotate Proxies (ms). If enabled, proxies will randomly rotate within a provided interval.

- Auto Scale Up. If enabled, all proxies will be started upon sending a request.

- Auto Scale Down. If enabled, all proxies will be stopped when no requests are sent.

6. Intercept HTTPS requests with MITM. If enabled, Scrapoxy will intercept and modify HTTPS requests and responses.

7. Keep the same proxy with cookie injection. If enabled, Scrapoxy will inject a cookie to maintain the same proxy for a single browser session.

8. Override User-Agent. If enabled, the header will be overridden with the value assigned to a proxy instance. This ensures all requests within the instance have the same User-Agent header.

9. Shuffle TLS Ciphersuite. If enabled, Scrapoxy will assign a random TLS cipher suite to each proxy instance to avoid TLS fingerprinting.

10. Once you're done setting up, click Create.

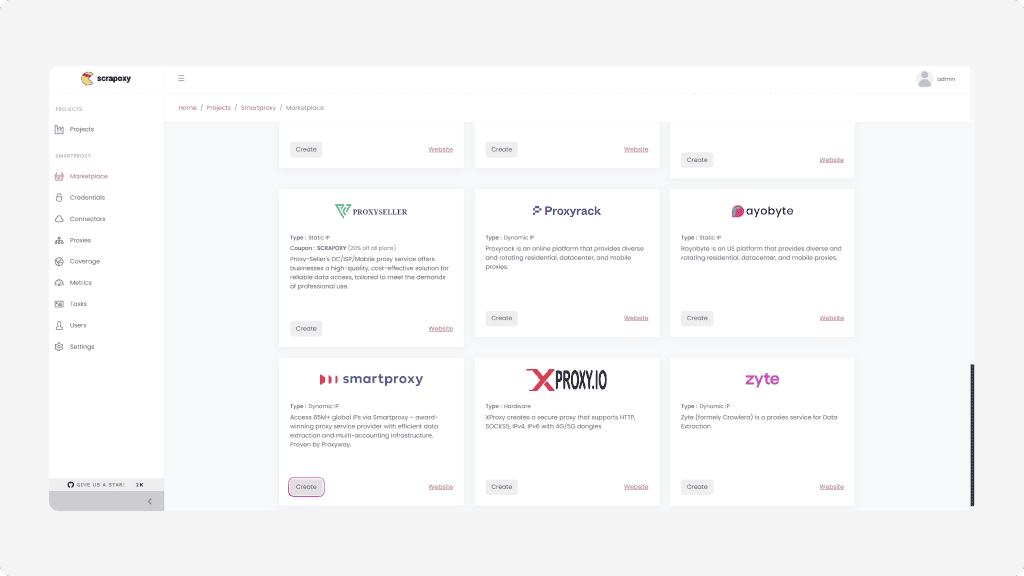

The project is set up, and you'll need to choose a provider from the Marketplace. Find Decodo in the list and click Create.

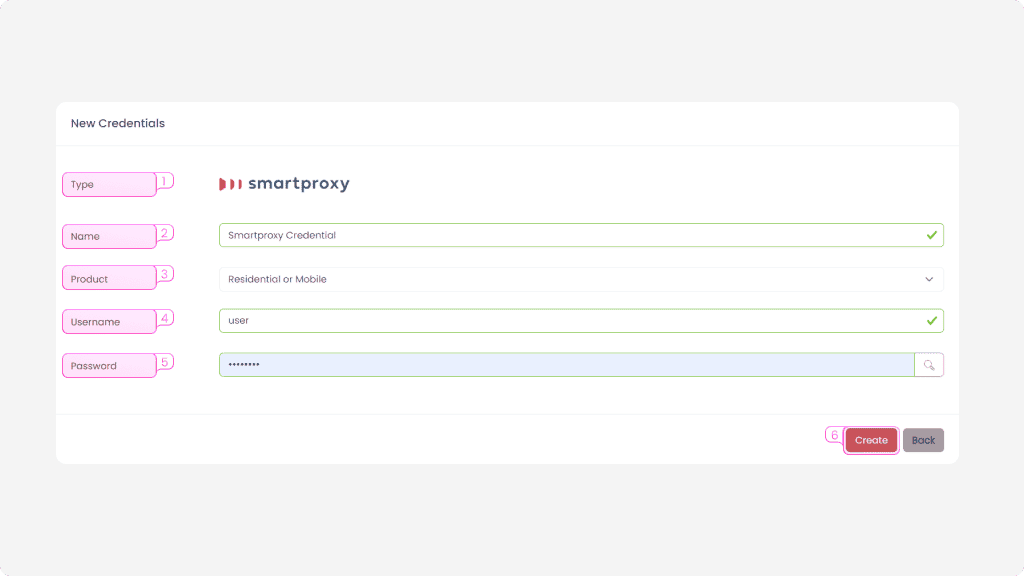

In the next step, enter the credentials for Decodo:

- Name. Create a custom name for the credentials. This can help differentiate between them if you're using several products.

- Product. Select the product you're using from the dropdown. In our example case, select Residential or Mobile.

- Username. Enter the username you previously saved from the Decodo dashboard.

- Password. Enter the password you previously saved from the Decodo dashboard.

- Click Create to save the credentials.

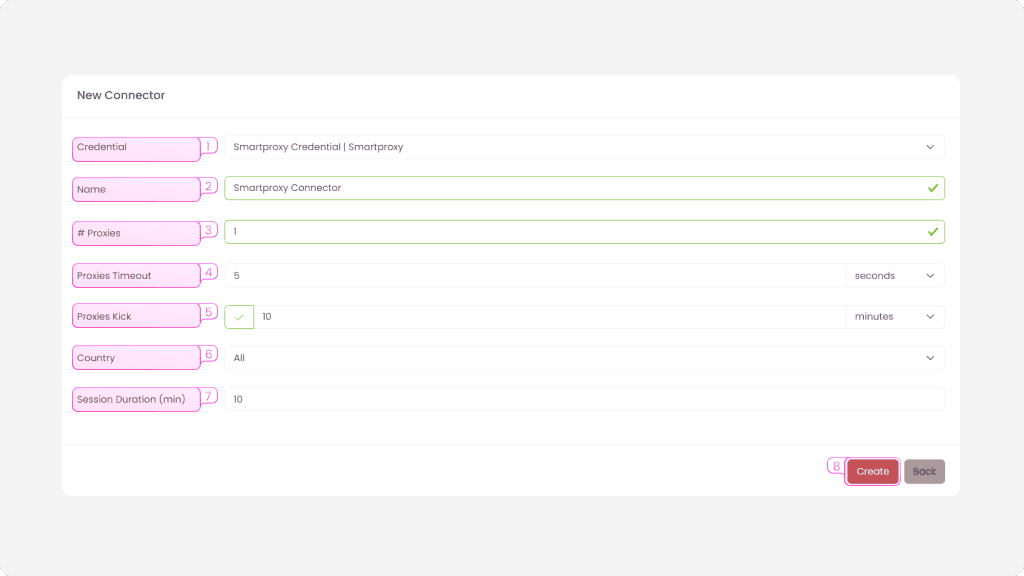

The final step is to create a Connector:

- Credentials. Select the credentials that you've just created previously from the dropdown list.

- Name. Enter a custom name for the Connector.

- # Proxies. The maximum number of proxies that the connector can provide and that you intend to use. You can adjust this later.

- Proxies Timeout. Set the time to attempt to connect to a proxy server before considering it offline.

- Proxies Kick. If enabled, set the maximum duration for a proxy to be offline before being removed from the pool.

- Country. Select a specific country to use proxies from or leave it at All.

- Session Duration (min). Set the duration of the session.

- Click Create.

- Once created, you'll see it in the list of Connectors. Enable it by toggling the Start/Stop this connector button.

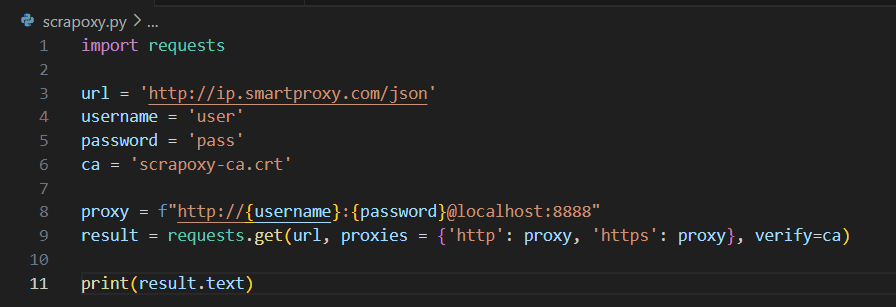

Now that everything's ready, you can integrate Scrapoxy into your code and see how it works. Here's an example of how to use Python together with the requests library:

Replace the username and password with the credentials from the project Settings in the Scrapoxy interface.

You must also download the CA certificate from Settings and place the file in the same directory as the script or write a full path to it in the ca variable. The endpoint should always be localhost:8888 unless configured otherwise.

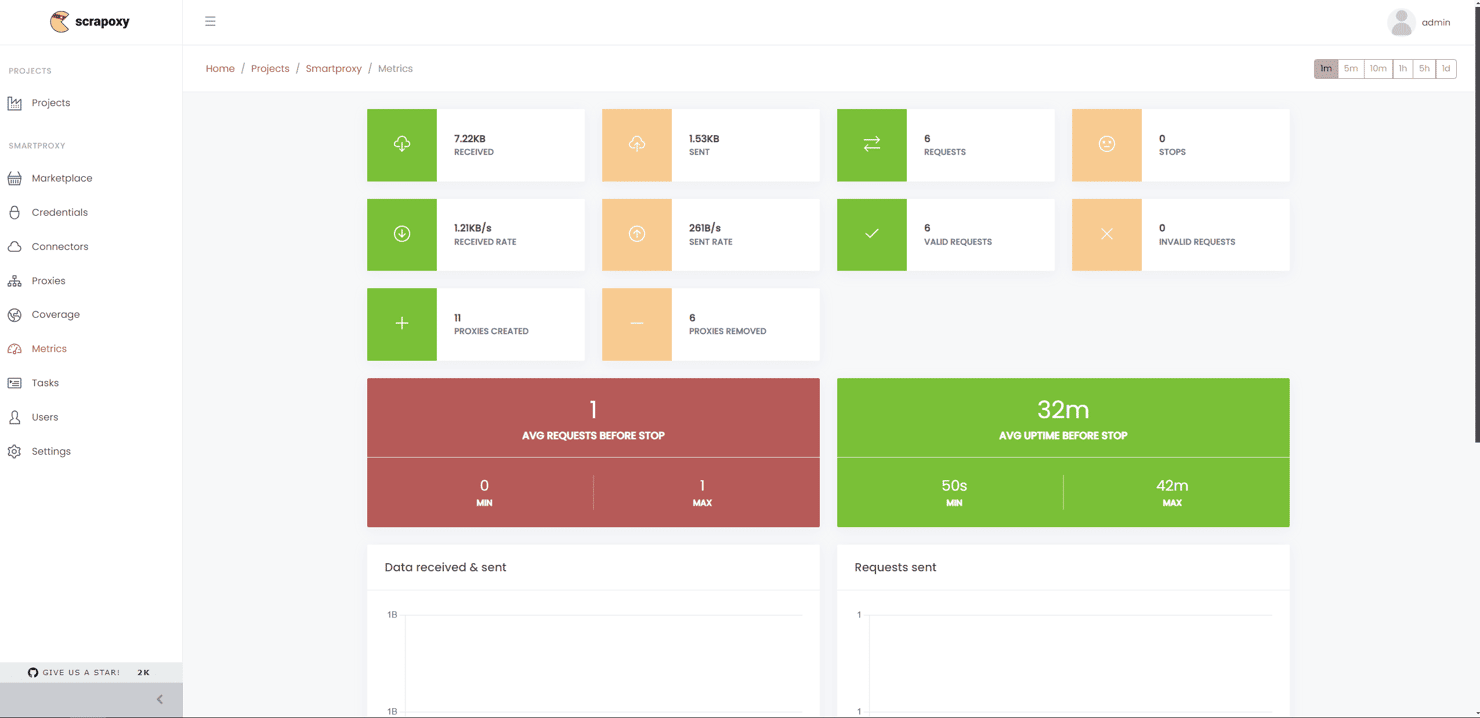

To view and manage your proxies, you can use the Proxies, Coverage, and Metrics tabs in Scrapoxy. These provide information about active proxies, what countries they're located in, upload and download speeds, the number of requests, and their status.

For more information, check out the official documentation.

Configurations & Integrations

Easy Decodo proxy setup with popular applications and free third-party tools. Check out these guides to get started right away.

Buy residential proxies for any demand

100 GB

$1.5

/GB

Total:$450$148.5+ VAT billed monthly

Use discount code - NOIDEA67

With each residential proxy plan, you access:

115M+ ethically-sourced IPs in 195+ locations

HTTP(S) & SOCKS5 support

Continent, country, state, city, ZIP code, and ASN-level targeting

Rotating and sticky session options

<0.6s avg. response time

99.86% success rate

99.99% uptime

Seamless integration with scraping tools and bots

24/7 tech support

14-day money-back

SSL Secure Payment

Your information is protected by 256-bit SSL

What people are saying about us

We're thrilled to have the support of our 130K+ clients and the industry's best

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Featured in:

Explore our other proxy line products

What are proxies?

A proxy is an intermediary between your device and the internet, forwarding requests between your device and the internet while masking your IP address.

Residential Proxies

from $1.5/GB

Real, physical device IPs that provide a genuine online identity and enhance your anonymity online. Learn more

ISP Proxies

from $0.27/IP

IPs assigned by Internet Service Providers (ISPs), offering efficient and location-specific online access with minimal latency. Learn more

Mobile Proxies

from $2.25/GB

Mobile device based IPs offering anonymity and real user behavior for mobile-related activities on the internet. Learn more

Datacenter Proxies

from $0.020/IP

Remote computers with unique IPs for tasks requiring scalability, fast response times, and reliable connections. Learn more

Site Unblocker

from $0.95/1K req

A powerful application for all proxying activities offering dynamic rendering, browser fingerprinting, and much more. Learn more

Decodo Blog

Build knowledge on residential proxies, or pick up some dope ideas for your next project - our blog is just the perfect place.

Most recent

What is Data Scraping? Definition and Best Techniques (2026)

The data scraping tools market is growing significantly, valued at approximately $875.46M in 2026. The market is projected to grow more due to the increasing demand for real-time data collection across various industries.

Vytautas Savickas

Last updated: Jan 30, 2026

6 min read

Frequently asked questions

What is Scrapoxy?

Scrapoxy is a powerful proxy aggregator that manages and rotates proxies, helping developers scale web scraping operations while minimizing the risk of bans.

What is proxy scraping?

Proxy scraping involves using proxies to collect data from websites without revealing the scraper's real IP address, allowing for anonymous and distributed web scraping while avoiding blocks or rate limits.