Web Scraping at Scale Explained

Scraping projects usually start simple: a Python script, the Beautiful Soup parsing library, and a list of URLs. That's enough for small jobs. Once you're past a few hundred thousand pages, you start hitting problems: timeouts, IP bans, parsers returning empty fields because someone changed a div to a span. At that point, it's not a coding problem anymore, it's an infrastructure problem. This guide covers the architecture, proxy management, anti-bot evasion, pipelines, costs, compliance, where the industry is headed, and build vs. buy decisions.

Justinas Tamasevicius

Last updated: Feb 18, 2026

10 min read

TL;DR: What you need to know about web scraping at scale

Web scraping at scale is an infrastructure problem, not a coding problem. Once you're past tens of thousands of pages, you need coordinated layers: a request orchestrator, a proxy routing layer, a rendering layer for JavaScript-heavy sites, resilient parsers, a data pipeline with validation built in, and monitoring across all of them.

Anti-bot systems now update multiple times per week, and a single defense upgrade on a target site can increase your costs 10-50x overnight. Proxy bandwidth prices have dropped sharply, but the average cost per successful payload has risen because the main costs are now retries, rendering, and evasion, not bandwidth.

Most experienced teams adopt a hybrid build-vs-buy model: custom parsers and business logic in-house, proxy routing and anti-bot evasion delegated to managed infrastructure.

What does "web scraping at scale" mean?

Scraping "at scale" isn't defined by a single number. It's what happens when volume, concurrency, distribution, and reliability all have to work at the same time. Any team serious about web data treats it as an ongoing operation, not a one-off extract.

In practice, large scale web scraping means millions of requests per day across hundreds or thousands of parallel workers, distributed across regions. A 2% failure rate across 1M requests means 20K data gaps your downstream systems have to handle.

At this level, success rate matters more than speed. A scraper that finishes in 2 hours but loses 15% of its data is worse than one that takes 4 hours at 99.2% completeness.

High volume web scraping demands managing rate-limited queues, dynamic throttling, connection pooling, and multi-region failover simultaneously, all held together by clear SLOs (service-level objectives) for freshness, field completeness, and error budgets.

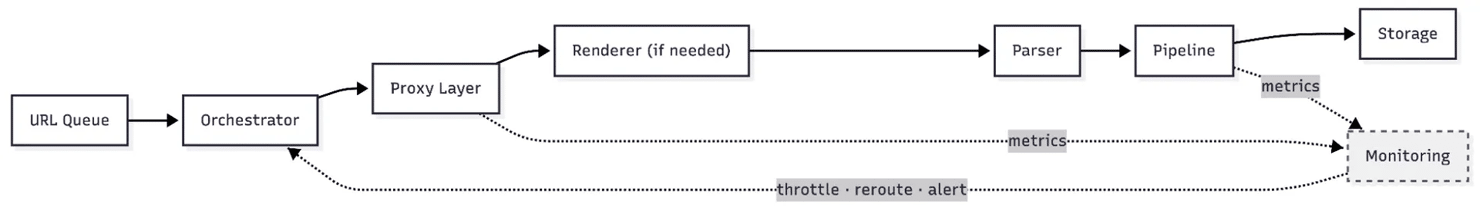

High-level architecture of a scalable scraping system

When volume grows from thousands of pages to millions, your scraping system begins to resemble any other distributed service: modular, fault-tolerant, and complex to operate. Most production systems share the same core components, even if implemented differently. It matters less who wrote the scraper and more who's responsible for the data coming out the other end.

Request orchestrator

The orchestrator is your control plane. It manages the URL queue, assigns tasks to workers, tracks job state, and enforces scheduling logic: deciding what gets scraped, when, and how aggressively.

In modern stacks, this runs on distributed task queues like Celery, Bull, or cloud-native alternatives like AWS Step Functions. It needs to support priority-based scheduling (high-value URLs first), deduplication (don't re-scrape unchanged pages), and dynamic concurrency adjustment based on target-site response patterns.

More advanced systems now use ML models to optimize recrawl frequency per URL. A static blog post doesn't need daily refreshes, but an eCommerce product page with volatile pricing might need hourly updates.

Proxy layer

The proxy layer sits between your orchestrator and the open web. It handles IP rotation, session management, and geographic targeting. At scale, this is typically the largest variable cost, and the layer where misconfiguration has the highest impact.

Your orchestrator should never care which specific IP a request uses. It requests a connection from the proxy layer that matches the target's requirements.

Rendering layer

Not every page needs a browser, and spinning one up when you don't have to add unnecessary compute costs. Static HTML pages can be fetched with lightweight HTTP clients at a fraction of the cost.

But JavaScript-heavy sites require a headless browser. Production teams typically run rendering clusters with Playwright under Kubernetes, keeping memory predictable by recycling browser contexts between jobs. When a target exposes JSON endpoints or serves partial HTML, the system should skip rendering entirely.

Parser

The parser handles actual data extraction, turning raw HTML or JSON into structured records. At scale, parsers need to be versioned, testable, and resilient to minor DOM changes.

AI-driven extraction now supplements traditional CSS/XPath selectors. Across 3,000 pages, a McGill University study found AI extraction hit 98.4-100% accuracy, with semantic approaches maintaining performance even when page structures changed, compared to rigid selectors that broke on every redesign.

But AI extraction has a critical limit: numerical precision. In testing, LLM-based scrapers regularly confuse VAT-inclusive and VAT-exclusive pricing, misread currency formats, and confuse unit vs. total prices, with error rates high enough to be unusable for mission-critical data.

The cost gap is wide: leading LLM extraction tools cost $666-$3,025 per 1M pages, compared to $1-5 per 1M for deterministic scrapers (ScrapeOps, 2025). Most teams use a hybrid: deterministic code for high-stakes numerical fields, AI for layout resilience and unstructured content.

For the AI tier specifically (layout-resilient extraction of text, categories, descriptions, and other non-numerical fields), tools like Decodo's AI Parser generate structured JSON from any HTML using natural-language prompts, without writing selectors. Teams building agentic workflows can also plug Decodo into their LLM stack via the LangChain integration, so agents can trigger scrapes on their own. For more on using LLMs for extraction, see our guide to ChatGPT-based web scraping.

Data pipeline

The gap between "scraper returned HTML" and "data is in the warehouse, ready to use" is bigger than most teams expect. Closing that gap is what the pipeline does: deduplication, schema enforcement, format standardization, and storage. Stream-oriented architectures (Kafka, Kinesis) are replacing batch processing for time-sensitive use cases.

The pipeline also needs lineage tracking. When something breaks, you need to trace the failure back to its origin and trigger automatic backfills.

Monitoring

Without observability, scraper issues can go undetected for days, gradually degrading data quality. Your monitoring layer should track success rates, response times, block rates, and data completeness, all in real-time.

Monitor shifts in median or p95 latency, not just hard failures.

Handling dynamic and JavaScript-heavy websites

Most websites now render content client-side. A plain HTTP GET returns a near-empty shell.

Your scraper receives no useful data unless it executes JavaScript. The signals that a page requires rendering: empty or minimal HTML responses, AJAX/XHR calls that load data after page load, content that appears only after scrolling (infinite scroll), and pages protected by JavaScript challenges.

Headless browsers and when to skip them

Playwright is now the most popular choice for scraping dynamic content as of early 2026, overtaking Puppeteer and Selenium in scraping-specific workflows. Its multi-browser support (Chromium, Firefox, WebKit), built-in auto-wait mechanisms, and network interception API make it well-suited for scraping.

Selective rendering cuts the most cost: avoid headless browser overhead on pages that don't require it. Before spinning up a headless instance, check whether the data you need is available in an API response, an embedded JSON-LD block, or server-rendered HTML. Many sites include structured data in <script type="application/ld+json"> tags that's machine-readable and far cheaper to extract.

For pages that genuinely require rendering, manage your browser fleet like any other compute resource: set memory limits, recycle contexts after each job, and scale rendering pods independently of your crawl workers.

Production teams now use a hybrid scraping pattern: launch a headless browser only to pass the initial JavaScript challenge, extract the session cookie it generates, then immediately kill the browser and hand that cookie to a lightweight HTTP client (like rnet or curl_cffi) for all subsequent requests. This cuts RAM usage and response times while still passing challenge-based defenses.

We cover the rendering decision in more detail in our guides to scraping dynamic content, JavaScript web scraping, and Playwright-based web scraping.

Anti-bot systems and why scale triggers them

Scale is one of the strongest signals to anti-bot systems. A human browsing a site makes maybe 50-100 requests per session. A production scraper makes 50K. Even with perfect headers and rotating proxies, the statistical footprint of automated traffic is different from organic behavior.

Automated traffic now accounts for 51% of all web traffic, surpassing human activity (Imperva). AI crawlers alone generate more than 50B requests to Cloudflare's network every day. And AI crawlers are now among the user agents most often fully blocked in robots.txt files (Known Agents, formerly Dark Visitors).

Defenders now respond with full automation. Two days of unblocking efforts used to give two weeks of access... Now, it's become the other way around.

Anti-bot systems now reconfigure their detection mechanisms continuously. One vendor deployed more than 25 version changes over a 10-month period, often releasing updates multiple times per week, with ML models that adapt in as little as a few minutes. You can't keep up manually at that pace.

Rate-based detection

This is the simplest detection layer. If you're sending requests to a domain faster than any human could browse it, you're flagged. Modern systems like Cloudflare's Bot Management don't just count requests per IP; as of 2025-2026, they've introduced per-customer defense systems that use ML models automatically tuned to each website's specific traffic patterns.

The rate limit threshold isn't static. It adapts based on the site's normal traffic baseline.

IP reputation

Every IP has a reputation score with major anti-bot providers: datacenter (lowest trust), residential (high), mobile (highest, due to Carrier-Grade NAT sharing IPs across many real users).

IP reputation alone isn't enough. No single detection signal works in isolation; modern anti-bot systems layer fingerprinting, behavioral analysis, and IP reputation together, and proxies alone don't determine the outcome.

But IP reputation still matters. A datacenter IP still triggers blocks before other detection layers even evaluate the request. Clean residential IPs are the prerequisite. They pass IP reputation checks so your fingerprint and behavioral stealth get evaluated.

The proxy investment serves one purpose: clearing the first filter so that fingerprint and behavioral signals get evaluated.

Browser fingerprinting

Anti-bot systems analyze hundreds of browser characteristics, from canvas fingerprints and WebGL renderers to the order of HTTP/2 header frames, to build a unique device signature.

At the network layer, TLS fingerprinting via JA3 hashes has been a reliable detection signal for years. JA3's successor, JA4, is now a top concern for its ability to fingerprint clients with even greater precision across TLS, HTTP, and other protocols.

Standard automation tools like Selenium or Puppeteer carry distinct fingerprints that differ from real browsers. Tools like Camoufox, SeleniumBase UC Mode, and Playwright with stealth plugins patch many of these detectable signs, but stealth tools and detection systems keep evolving against each other.

Evasion now extends beyond patching JavaScript APIs (the earlier Puppeteer-extra-stealth approach) to addressing automation protocols at different levels. The Chrome DevTools Protocol (CDP) is a key detection vector. Tools like Patchright and rebrowser-patches fix specific CDP leaks (such as the detectable Runtime.enable command) while still using CDP as their core communication layer. Pydoll and Selenium Driverless eliminate the WebDriver layer entirely and connect to Chrome via CDP directly, removing driver-related detection signals. Nodriver takes this further by minimizing CDP usage itself, avoiding the protocol patterns that anti-bot systems flag. BotBrowser ships a custom browser built with modified internals.

At the HTTP level, curl_cffi addresses TLS fingerprinting without a browser. It impersonates real browser TLS/JA3 and HTTP/2 fingerprints at the network layer, so automated HTTP requests look indistinguishable from browser traffic. Useful for static pages where headless browsers are unnecessary overhead.

Commercial antidetect browsers have also added automation hooks, making advanced evasion more accessible. Decodo's X Browser is a free option included with any proxy subscription, useful for quick manual testing and multi-profile validation before committing to a full antidetect platform.

Behavioral analysis

Of all detection layers, behavior-based systems are the hardest to beat. Simple scraping setups fail here. Systems like DataDome and HUMAN Security analyze interaction patterns throughout the session. Real humans move mice in erratic, non-linear paths; bots produce straight lines or no movement at all. Humans scroll in bursts with pauses; bots scroll at constant rates.

Human inter-click intervals are irregular; bots cluster at uniform intervals. Humans pause on content; bots don't pause. These signals combine with fingerprinting to build a real-time trust score that adjusts continuously. A session that starts trusted can be flagged mid-way if behavioral patterns degrade.

Even fingerprint-perfect browsers with no behavioral simulation are still detected. Production scrapers targeting behavioral-analysis-protected sites need to inject realistic interaction noise: randomized mouse paths, variable scroll timing, simulated reading pauses. Several commercial antidetect browsers now bundle behavioral simulation, but detection evasion rates vary widely across vendors.

For high-value targets, teams build custom behavioral profiles matched to each target site's interaction patterns. This requires per-target calibration and ongoing maintenance as sites update their models.

Some APIs handle behavioral evasion for you. Decodo's Site Unblocker, for example, integrates as a proxy endpoint that manages fingerprint rotation, JavaScript rendering, and anti-bot challenges per request. From the caller's side, it's a normal HTTP call that returns rendered HTML.

Machine identity

Beyond fingerprinting, bot mitigation systems now distinguish between verified bots (search engine crawlers), AI bots (training crawlers, search agents, user-action agents), and unverified scrapers. Whether your scraper can present a verifiable machine identity now determines whether you get through on major platforms.

"Know Your Agent" initiatives are spreading. In 2026, unsigned or unverifiable agents already receive heightened scrutiny from major anti-bot providers. Verified or attested bots get preferential routing; unverified agents get more friction. For scraping engineers, crawler design is shifting. Instead of just hiding, scrapers increasingly need to identify themselves and state what they're doing.

Teams building AI agent workflows can connect through infrastructure like Decodo's MCP Server, which gives AI agents and LLMs web access through managed proxies. It covers anti-bot evasion, rendering, and structured output. For more on the ecosystem, see top MCP servers for AI workflows.

Common defenses

Once anti-bot systems flag your traffic, they need to act on it. These are the most common responses you will run into at scale:

- CAPTCHAs. Cloudflare Turnstile is replacing traditional image CAPTCHAs in 2025-2026. Unlike reCAPTCHA, Turnstile often runs invisibly in the background using fingerprinting and cryptographic proof-of-work.

- JavaScript challenges. Inline scripts that test for browser capabilities, execute timing-based checks, or inject hidden elements that only bots would interact with.

- Data cloaking. Instead of blocking scrapers outright, systems like Cloudflare's AI Labyrinth (launched March 2025, available even on free-tier plans) return AI-generated fake pages with plausible-looking content, introducing synthetic data into your results rather than denying access.

Your scraper still receives a 200 OK response, valid HTML, and realistic-looking data, but the content is entirely synthetic. This changes what "success" means. Instead of just checking for block pages, extraction pipelines now need to verify that extracted data is real. We cover detection and mitigation strategies in our guide to bypassing AI Labyrinth.

Variable protection within a single site

Anti-bot protection now varies within the same website. High-demand pages that scrapers target most get aggressive protection, while lower-value listing pages stay lightly protected. Indeed.com, for example, increased protection on individual job posts while leaving job listing pages at lower security.

Sites now restrict internal API endpoints (particularly GraphQL) separately. Non-standard URL formats (e.g., /product/123456/reviews vs. the full SEO-friendly URL) trigger higher security settings. Sites don't need to stop you. They only need to make scraping expensive enough to be uneconomical.

You may need different proxy strategies, rendering approaches, and cost profiles for different page types on the same domain, and your orchestrator needs to route based on page type.

Most teams start with URL-pattern-based classification (listing pages get datacenter proxies, detail pages get residential) and then refine based on observed response behavior. If a URL pattern starts returning elevated CAPTCHA rates, the system automatically reclassifies it to a higher proxy tier.

This works as a manually curated config at dozens of domains; at hundreds, it needs to be automated, with the orchestrator learning protection levels from its own success/failure metrics per URL pattern.

For a deeper look at how these detection layers interact, see our anti-bot systems guide and CAPTCHA bypass guide.

Proxy management at scale

Proxy management is often the most expensive layer, and the one with the most impact in web scraping at scale. Selecting a provider and configuring credentials is the easy part. The hard part is building a routing layer that selects the right proxy type for each request, monitors IP health in real time, and adapts to target-site defenses dynamically.

A large, diverse proxy pool is the foundation – thin pools mean repeated IPs, detectable rotation patterns, and faster bans. But at production scale, how the pool is used matters as much as its size. The best results come when your provider's infrastructure combines deep IP coverage with intelligent routing: selecting the right proxy type, location, and rotation strategy per request, based on each target's defense profile. You can build this routing layer yourself on top of raw proxies, but most teams at scale let the provider handle it.

Rotation vs. sticky sessions

Rotating proxies assign a new IP for every request, ideal for stateless scraping like product catalog harvesting or SERP collection. Sticky sessions maintain the same IP for a defined duration (typically 10 minutes to 24 hours), essential for multi-step workflows: login flows, cart interactions, paginated results where the server validates session cookies. For the implementation details, see how to get a new IP for every connection.

Residential vs. datacenter vs. mobile

Proxy type

Trust level

Speed

Cost (approx. early 2026)

Best for

Datacenter

Low (easily flagged)

Very fast

$0.30-$1/GB

Low-protection sites, bulk jobs

Residential

High (real ISP IPs)

Variable

$3-$15/GB

eCommerce, general scraping

ISP

High (datacenter speed + residential trust)

Fast

$2-$8/GB

Sustained sessions, account ops

Mobile

Highest (carrier CGNAT)

Variable

$3-$20/GB

Hardest targets, mobile-only content

Most teams start with residential as their default and escalate to mobile only when residential stops working on a specific target. Mobile proxies cost enough more that you should confirm the need before committing a budget.

No single proxy type works for everything. The teams with the best success rates match proxy type to target protection level per request, whether that's handled by their own orchestrator or by a provider with the right pool breadth.

Decodo offers 125M+ IPs across residential, ISP, datacenter, and mobile types in 195+ locations, and hit a 99.86% success rate in Proxyway's 2025 benchmark. Having all proxy types available under one provider simplifies this matching and avoids managing multiple vendor relationships.

Datacenter proxies have lost ground on heavily protected targets. Most of the top eCommerce sites that were easily scrapable with datacenter proxies 5 years ago now require residential IPs or headless browsers. The pattern holds across travel, real estate, and other heavily protected verticals.

That said, datacenter proxies remain the most cost-effective option for high-volume jobs on sites with lighter protection: bulk data collection, monitoring, and targets where speed matters more than stealth. The key is knowing which targets still accept them, and having residential or mobile proxies ready for the targets that don't.

Geo-targeting at scale

Many scraping use cases are inherently geographic. SERP scraping requires results from specific countries and cities, eCommerce monitoring needs pricing from target markets.

Two problems emerge at scale that aren't visible during prototyping. First, IP density drops sharply outside Tier 1 markets. Getting city-level residential IPs in smaller European or Southeast Asian cities can mean pools thin enough that rotation patterns become detectable.

Second, geo-IP database accuracy: the databases that targets use to verify your location don't always agree with your proxy provider's classification. A request your provider labels "São Paulo" might resolve to a different region in the target's geo-IP lookup.

At volume, this creates silent data quality issues. Your Brazilian pricing data might reflect a different market. Build geo-verification into the pipeline: compare scraped prices against known regional benchmarks and flag anomalies that could indicate geo-targeting drift. For quick manual spot-checks, Decodo's Chrome proxy extension or Firefox add-on let you visually inspect target pages from any proxy location in seconds, useful for debugging geo-targeting discrepancies without spinning up a full scraper.

Use cases that demand advanced proxy management

- SERP scraping. Search engines are among the most aggressive at detecting automated traffic. You need residential or mobile proxies with city-level targeting and per-request rotation. At volume, most teams use a SERP Scraping API that handles proxy rotation, rendering, and parsing.

- eCommerce monitoring. Price and availability tracking across Amazon, Walmart, or regional marketplaces requires high-volume residential proxies with session persistence for pagination. Dedicated eCommerce scraping APIs with built-in parsers for major retailers reduce the per-target setup time.

- High-security platforms. Sites with advanced protection combine fingerprinting, behavioral analysis, and rate limiting. Mobile proxies from real carrier networks are frequently necessary for sustained access.

Residential proxies are the baseline for production-scale operations – we explain why in our guide to rotating proxies.

To skip proxy management entirely, Decodo's all-in-one Web Scraping API combines proxies, rendering, and parsing in one request.

Concurrency, rate limits, and retry logic

Once you can get past defenses, the next problem is how hard to push each target. Too aggressive and you burn proxies and trigger blocks. Too conservative and your crawl takes forever. Getting this balance right is mostly about feedback loops.

Why "faster" ≠ better

Opening 10,000 concurrent connections to a target doesn't give you 10x the data. It triggers rate limiters, exhausts your proxy pool, and often overloads the target site's edge servers.

The optimal concurrency for any given target is the highest throughput that maintains a stable success rate. This number varies widely: a well-provisioned CDN-fronted retailer might tolerate 500 concurrent sessions; a small government portal might start blocking at 10.

Backoff strategies

When you receive a 429 (Too Many Requests), 503 (Service Unavailable), or a CAPTCHA challenge, immediate retries typically make the problem worse.

A common backoff pattern: first retry at 2-5 seconds, second at 8-15 seconds, third at 30-60 seconds, with random jitter to prevent retry storms from synchronized workers. After 3 failures, route the URL to a dead-letter queue for manual review or a different proxy strategy.

Adaptive throttling

Hardcoding rate limits per domain works at small scale, but it's imprecise. More precise systems monitor the target's response characteristics in real-time and adjust dynamically.

Track the rolling success rate over the last 100 requests: if it drops below 95%, reduce concurrency by 20%. If response latency rises above 2x baseline, slow down. If you see a sudden increase in CAPTCHAs, pause the domain entirely for 30-60 seconds before resuming at a lower rate.

At scale, this logic needs to work across hundreds of domains simultaneously, each with independent throttle state, distributed across a fleet of workers. The per-domain state has to be shared. If Worker A gets throttled on example.com, Workers B through F need to know before they send additional requests. Without this coordination, teams can lose 30-40% of their request budget on avoidable failures.

A centralized state store (Redis sorted sets or similar) for per-domain metrics is the most common solution. Each worker reports response outcomes and reads current throttle levels before dispatching. The alternative, in-memory per-worker state with no coordination, leads to overcrawling during backoffs and undercrawling during recovery.

Retry patterns that work

- Idempotent retries. Ensure that retrying a failed request doesn't produce duplicate data downstream. Assign a unique job ID and deduplicate at the storage layer.

- Proxy rotation on failure. If a request fails on one IP, retry on a different IP from a different subnet. Same-IP retries rarely succeed after a block.

- Cascading fallback. For targets where datacenter proxies still work, try datacenter first (cheapest). If blocked, escalate to residential. If still blocked, use mobile. Each tier costs more but has a higher success probability. For targets you know require residential or higher, skip the cheaper tiers to avoid wasting requests and triggering rate limiters with failed attempts.

- Circuit breakers. If a domain's failure rate exceeds a threshold (e.g., >50% failures over 5 minutes), stop all requests to that domain.

Implementing retry logic correctly is non-trivial. Our guides on Python requests retry patterns and proxy error codes walk through the details.

Data pipelines – from raw HTML to usable data

For teams running production scraping long-term, the most time-consuming problems tend to be in the pipeline, not in extraction. Turning inconsistent crawler output into clean, validated data consumes most of the engineering hours.

Cleaning and normalization

Once your parsers deliver structured output (whether deterministic selectors, AI extraction, or a hybrid of both), the work shifts from extraction to normalization: standardizing inconsistent currency formats, locale-specific dates, Unicode artifacts, and HTML entities into clean, uniform records.

A 3-tier data model works well: a raw staging layer (exact copies of scraped responses), a refined layer (cleaned, deduplicated, standardized), and an analytics-ready layer (business rules applied, ready for reporting or model training).

Validation

Validation catches bad data before it reaches your downstream systems.

Every record should pass through type constraints, range constraints, completeness constraints, and freshness constraints. Flag spikes in null values or sudden schema changes as early warnings. Some production teams have built automated schema drift detection that alerts engineers when a target site's DOM structure changes, often before the scraper itself fails.

The most common failure pattern: a target site updates its layout and your scraper keeps running with no errors, but the output is wrong. Compare current output against a known-good baseline on a rolling schedule. Teams that run automated checks against reference snapshots detect layout changes within hours instead of waiting for a downstream complaint.

The industry is moving toward auto-recovering pipelines: architectures where AI agents explore sites, propose extraction plans, and adapt when sites change. This isn't production-standard yet for most teams, but building detection and automatic parser fallback into your pipeline now means you're ready when it is.

Storage formats and delivery

The right storage format depends on downstream use: JSON Lines for streaming, Parquet for analytics, direct writes for real-time. Most teams output to multiple destinations simultaneously.

Most teams underinvest in lineage metadata. Every record should carry its source URL, crawl timestamp, parser version, and transform version. This matters for debugging, but it also matters for compliance. New regulations are starting to require documented data provenance for AI training datasets, and building lineage from the start is far cheaper than adding it later. If you're collecting web data specifically for AI and LLM training, provenance tracking isn't optional. Enterprise buyers already ask for it before they sign. For a practical example of how this fits together, see our guide to building a production RAG pipeline with LlamaIndex and web scraping.

Event-driven lineage tagging (attaching metadata at each pipeline stage as records flow through) scales better than batch tagging retroactively, and makes audits much easier to handle.

For the building blocks behind each of these stages, see our guides on parsing, data cleaning, and saving scraped data.

Monitoring, observability, and failure handling

Without observability, your scraping system isn't production-grade. You need to see when things start breaking before your downstream consumers notice.

Teams that scale successfully treat crawlers as production services (with SLOs, alerting, and on-call rotations), not scripts running in the background.

What to track

At the request level: success rate, response time distributions (p95, p99), and block rate, segmented by domain and proxy type.

At the data level: field completeness (percentage of expected fields present and non-null) and proxy health (per-IP success rates, ban frequency, rotation velocity). The most neglected metric is pipeline lag: the time from crawl to data availability in your warehouse. This is often the first metric to degrade under load, and the one downstream systems are most sensitive to.

Error categorization

Not all failures are equal. Categorize them:

- Transient. Network timeouts, temporary rate limits – retry with backoff.

- Structural. Parser failures due to DOM changes – requires a code update.

- Systemic. Proxy pool exhausted, rendering cluster overloaded – requires infrastructure intervention.

- Expected. robots.txt disallows, explicit blocks – respect or reroute.

Auto-recovery

Production scraping systems need to recover automatically. When a domain starts returning elevated error rates, the system should automatically reduce concurrency, rotate to a different proxy tier, and if errors persist, quarantine the domain for investigation.

When a parser starts returning empty fields, revert to a previous parser version and alert the engineering team. IP bans are among the most common triggers – here's how to fix "Your IP Has Been Banned" errors.

The 3 webs – where scraping is headed

The open web is splitting into 3 distinct access models. Each one requires different infrastructure, and your scraping system needs to handle all of them.

The Hostile Web escalates defenses against all automation. Over 1M Cloudflare customers now block AI crawlers. Since enabling blocking by default in July 2025, Cloudflare has blocked 416B AI bot requests in 5 months (CEO Matthew Prince, WIRED, December 2025). Microsoft discontinued its public Bing Search API in mid-2025, directing developers toward a paid agent builder. For these targets, multi-layered anti-bot infrastructure is required.

The Negotiated Web is driven by economic pressure. Publishers facing declining search traffic are adopting licensing, attestation, and pay-per-crawl mechanisms. Emerging standards like ai.txt, llms.txt, and Really Simple Licensing (RSL) attempt to make crawl permissions machine-readable.

The Invited Web actively serves AI agents. Major companies have launched open protocols for AI shopping agents: Stripe and OpenAI co-developed the Agentic Commerce Protocol, Google and Shopify co-developed the Universal Commerce Protocol, and Visa (with Cloudflare) released the Trusted Agent Protocol.

These sites actively expose machine-first interfaces with real-time data. Some targets that used to require evasion now offer structured API access, if you can present a verifiable agent identity.

A single access strategy won't cover all targets anymore. Your infrastructure, from proxy selection to identity management to protocol support, needs to match each data source's regime.

For teams navigating this shift, Decodo's MCP Server lets AI agents access web data through managed infrastructure without building custom scrapers. See our setup guide for instructions.

Legal, ethical, and compliance considerations at scale

Compliance is rarely the reason teams start scraping, but at production scale, it's part of the infrastructure, and it's much easier to build in from the start than to retrofit. The basics (respecting robots.txt, honoring rate limits, avoiding authenticated content without permission, and using transparent User-Agent strings) should be defaults in any production system.

robots.txt as infrastructure

robots.txt works best when you treat it as a configuration input, not optional guidance. Your orchestrator should parse robots.txt for every target domain before dispatching requests, cache the directives with a reasonable TTL, and enforce them automatically.

At scale, this needs to be systematic. Manually checking robots.txt for hundreds of domains isn't viable. Build it into the crawl pipeline as a mandatory check.

Responsible scraping defaults

Rate limiting per domain, rotating IPs to avoid overloading individual servers, and backing off when targets respond with errors (429s, 503s) are baseline responsible practices. Production systems should also avoid scraping behind login walls or paywalls without explicit permission, and should remove or flag personally identifiable information (PII) before data enters your pipeline.

Auditability at scale

At scale, compliance needs to be verifiable. Build jurisdiction-aware routing into your orchestrator so requests targeting EU domains automatically apply data minimization. Run automated PII detection on scraped output. Maintain immutable audit trails that log every endpoint hit with a timestamp.

Data provenance rules are getting stricter. Enterprise procurement teams already ask for lineage docs (source URL, crawl timestamp, parser version) before they sign.

Review quarterly (at minimum) where legal and engineering jointly assess whether target sites have changed their access terms or technical controls.

For a deeper look at the legal side, see our guide to web scraping legality.

Build vs. buy – when managed infrastructure makes sense

At scale, managing web scraping infrastructure in-house costs more than most teams expect.

Most teams do both, and from what we see, the share they buy tends to grow over time.

Building in-house gives you full control: custom parsers, tailored retry logic, and no vendor lock-in. But it also means maintaining a proxy pool, a rendering cluster, a monitoring stack, a compliance layer, and an on-call rotation.

Published case studies quantify this: one large eCommerce operation runs approximately 4,000 spiders targeting around 1,000 websites, requiring 18 full-time crawl engineers and 3 dedicated QA engineers, with 20-30 spider failures per day.

Consider an even larger operation: 10K+ precisely geolocated spiders for digital shelf analytics, supported by 50 web scraping specialists, with each new spider taking 6-8 days to build (Fred de Villamil, NIQ). These numbers reflect the operational overhead of enterprise web scraping before any business logic.

Indirect operational costs add up: proxy spend at scale costs $3-$15/GB for residential IPs, rendering clusters add compute costs that scale with volume, and the engineering time to maintain parsers against constantly-changing targets is often the largest line item. The cost of orchestrating has become higher than the cost of the components themselves.

Bandwidth costs have fallen significantly since 2018, but the average cost per successful payload has doubled, tripled, or risen as much as 10x depending on the target. ScrapeOps calls this "Scraping Shock". Costs don't rise gradually. They jump in sharp steps when a target upgrades its defenses.

Moving from datacenter proxies to residential is roughly a 5-15x cost jump per GB. Adding headless browser rendering multiplies compute costs further. Combining residential proxies with headless rendering can reach 25x baseline.

Full anti-bot bypass with CAPTCHA solving can reach 30-50x your baseline cost. At some point, each dataset has a threshold where extraction cost exceeds data value, so cost-per-success optimization matters as much as access.

Managed scraping APIs charge per successful result, not per attempt. That means retry waste, proxy escalation, and rendering costs are the provider's problem, not yours. When a target upgrades its defenses, your per-result price stays flat even if the provider's cost-per-request tripled behind the scenes.

Residential proxies use bandwidth-based pricing for teams that want to manage their own scraping logic, with per-GB rates that decrease at higher volumes. The tradeoff is control. Managed APIs are simpler to run, but you can't customize how they handle edge cases.

When choosing a provider, ask: can you see per-domain success rates, not just aggregate uptime? Are all proxy types (datacenter through mobile) available under one account, or will you need multiple vendors? Does the provider charge for failed requests, or only for successful results? How transparent are they about IP sourcing – do they use opt-in consent, or could their network get shut down?

That last question isn't hypothetical. In January 2026, Google disrupted a major residential proxy network of ~9M devices spanning 10+ brands, after finding it facilitated botnets through malware-laden SDKs embedded in free apps. Teams relying on those providers lost access overnight. If your provider gets taken down, your pipeline goes with it.

Build in-house where your specific domain knowledge matters, buy managed infrastructure for volume, uptime, and geo coverage.

For managed scraping, Decodo's Web Scraping API supports sync and async modes, batch jobs, and multiple output formats (HTML, JSON, CSV, Markdown). Pricing and free trial.

All in all

Here are the key takeaways:

- Scale = systems, not scripts. Once your operation crosses into tens of thousands of pages, you're building distributed web scraping infrastructure. Systems that are modular, observable, and fault-tolerant, with automated recovery, handle constantly-changing websites better, and the earlier you design for it, the less you'll rebuild.

- Anti-bot evasion is now a layered problem. Rate limits, IP reputation, browser fingerprinting, behavioral analysis, and machine identity each require different countermeasures. Anti-bot systems update multiple times per week and vary protection levels within a single site. No single technique covers all layers – you need automated, adaptive infrastructure that matches each target's defense profile per request.

- Data quality matters as much as data access. AI extraction provides layout resilience, but LLM-based parsing costs 130-600x more than deterministic approaches at scale. With data cloaking systems like Cloudflare's AI Labyrinth now returning plausible-looking fake data instead of block pages, validation is required infrastructure, not optional.

- The web is fragmenting. Hostile, negotiated, and invited access regimes are replacing the old "open web" assumption. Protection now varies within individual sites. Your scraping infrastructure needs to support all 3 regimes and adapt per-page, not just per-domain.

- Scraping costs escalate in steps, not slopes. When a target upgrades its defenses, your costs don't rise 10% – they jump to a different tier entirely. Design your pipeline to switch cost tiers so these jumps don't break your budget.

- Compliance needs to be built in, not added later. Enterprise buyers are already auditing data provenance before they sign. Build audit trails, PII detection, and robots.txt respect into your pipeline from the start.

- QA scales with extraction. Invest in automated validation, anomaly detection, and schema enforcement at the same rate you invest in extraction – teams that skip this are the ones that stop scaling.

Web scraping infrastructure requirements keep increasing, and the teams that maintain reliable data access treat their scraping layer like their databases, APIs, and production services. That's the approach we take at Decodo, whether you build in-house, buy managed infrastructure, or combine both.

If you're just getting started or want to go deeper: web scraping fundamentals · Decodo scraping platform · residential proxy infrastructure

Skip the hassle with Web Scraping API

Extract structured data from any website without CAPTCHAs, IP blocks, or complex setup.

About the author

Justinas Tamasevicius

Head of Engineering

Justinas Tamaševičius is Head of Engineering with over two decades of expertize in software development. What started as a self-taught passion during his school years has evolved into a distinguished career spanning backend engineering, system architecture, and infrastructure development.

Connect with Justinas via LinkedIn.

All information on Decodo Blog is provided on an as is basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Decodo Blog or any third-party websites that may belinked therein.