AI-Ready Proxy & Scraping Solutions

The most efficient way to test, launch, and scale your web data projects.

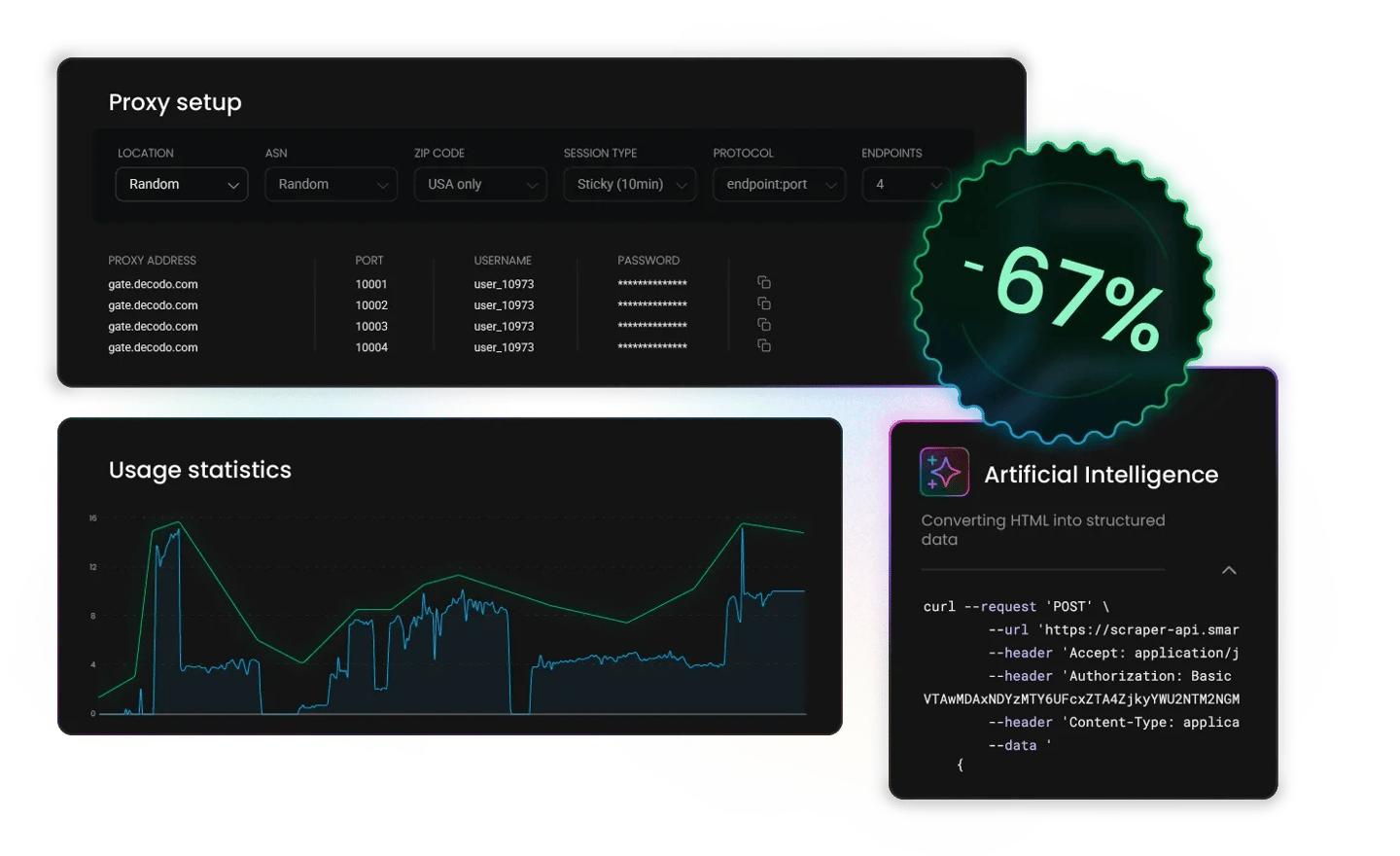

67% Off Residential Proxies

Use the code NOIDEA67 and choose ethically-sourced IPs from global locations.

AI Integrations

Connect Decodo with your favorite AI and automation tools like n8n, MCP server, or LangChain, and turn web data into live, intelligent workflows.

Free Trials

Unlock all the advanced features and take a test drive of our solutions with free trials.

IP Replacement

Take full control and instantly swap blocked or low-performing IPs from your workflows with no tickets or downtime.

<0.2s. avg. speed

125M+ IPs

99.99% uptime

195+ locations

LLM-compatible

Trusted by:

Smartproxy is now Decodo

Our evolution from proxy infrastructure to a complete data access and automation platform called for a new name. The same trusted team and solutions you've relied on since 2018 are now part of Decodo, delivering even better tools for your web data projects.

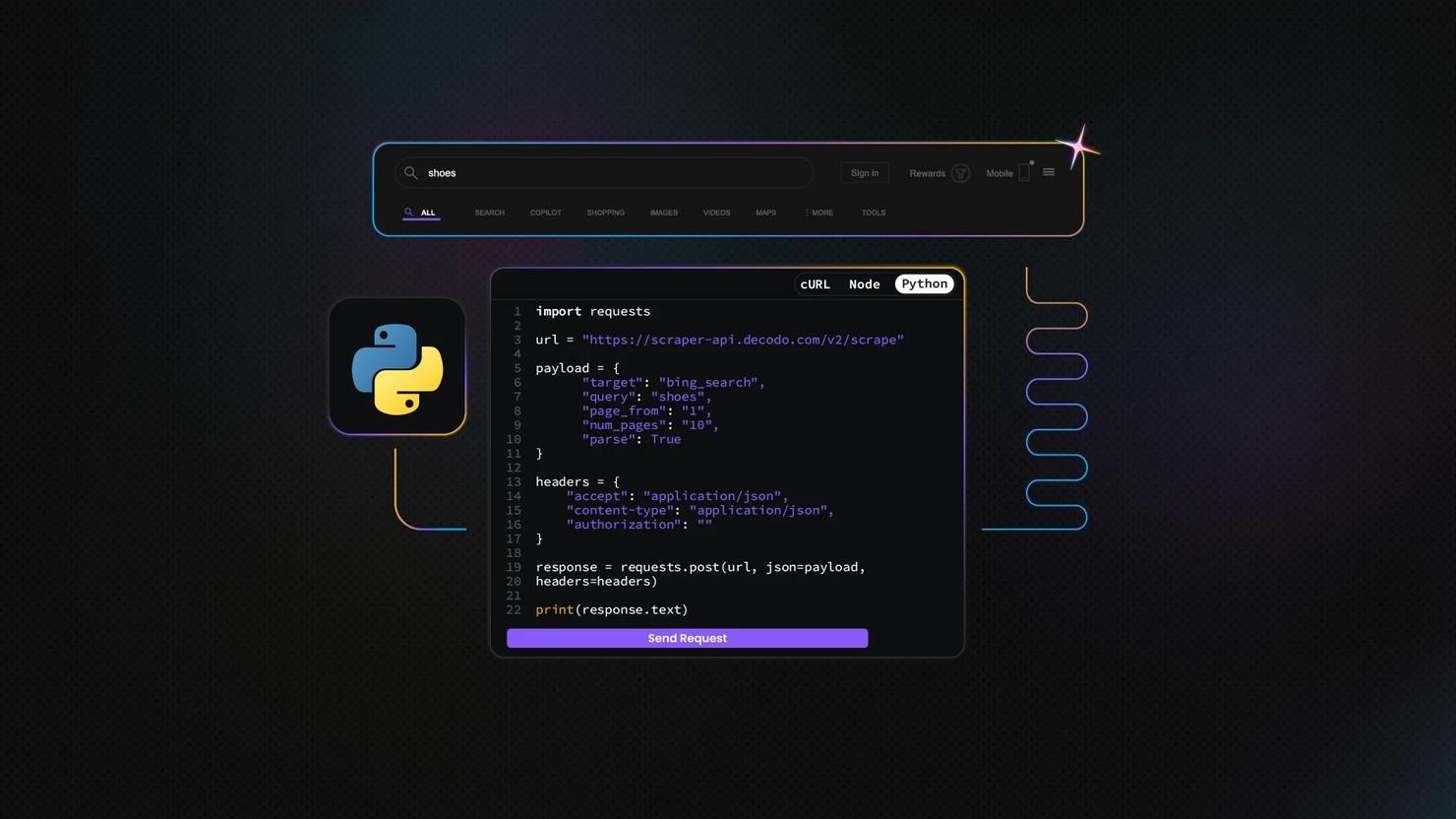

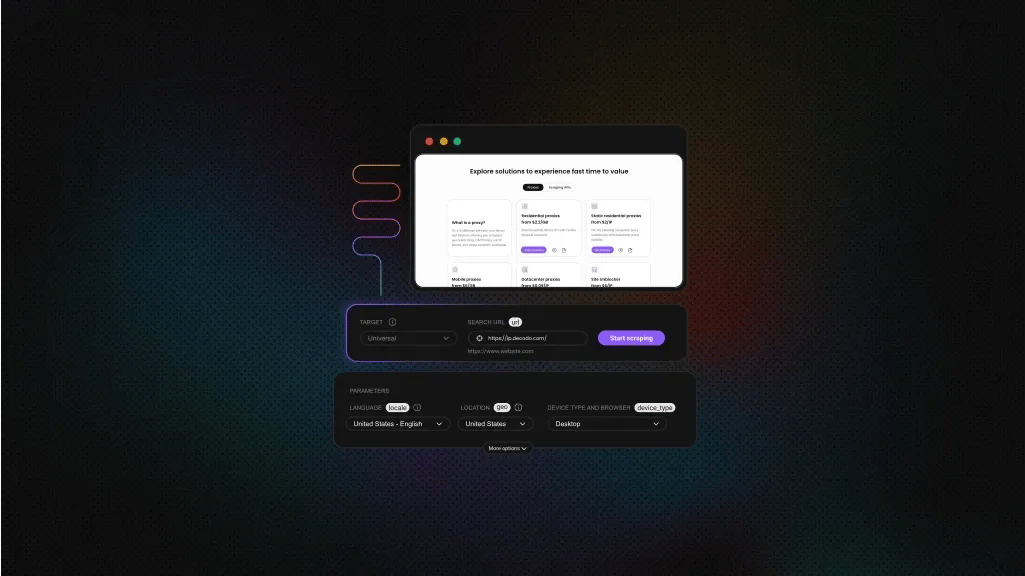

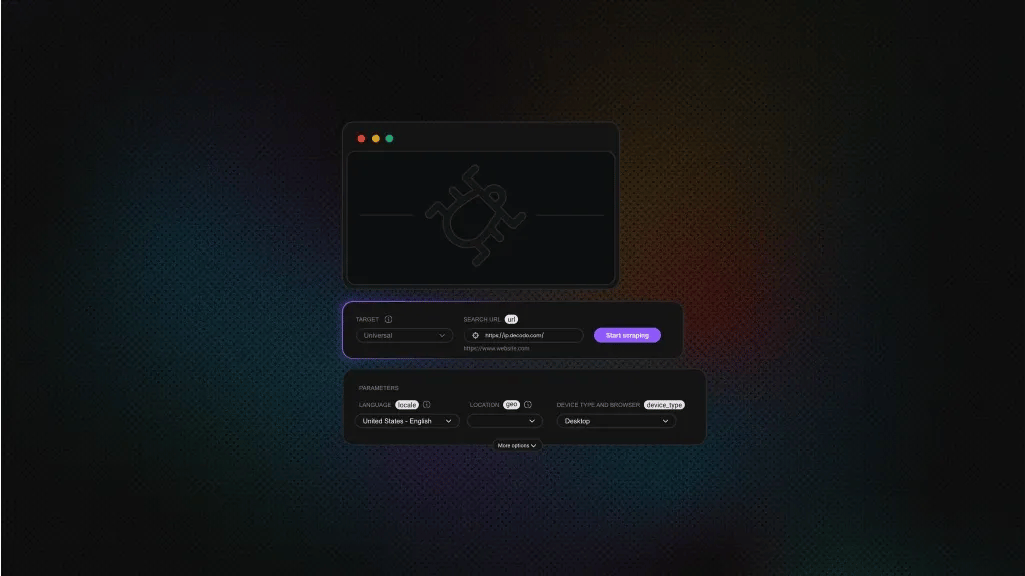

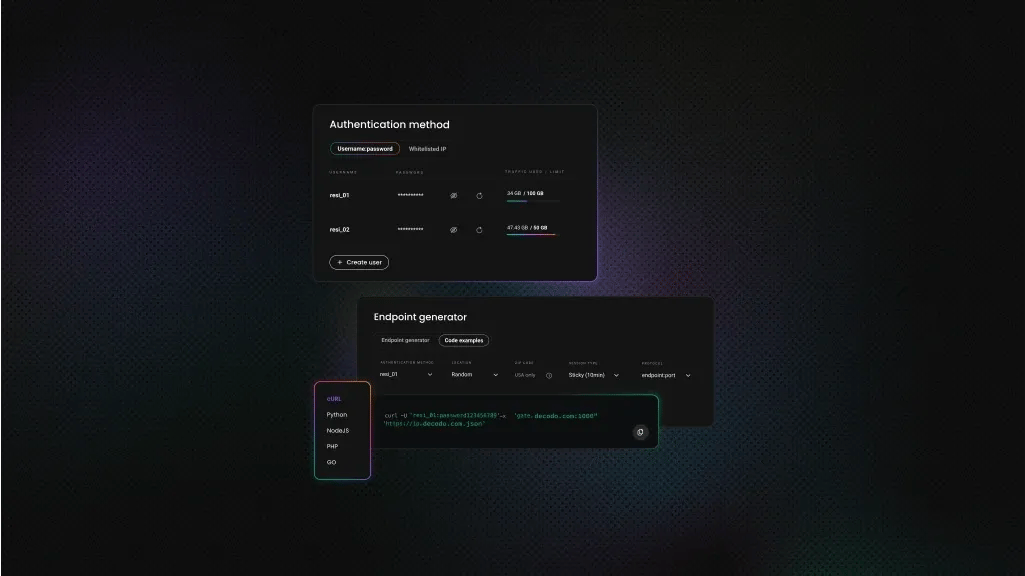

Start collecting data with the right solution, fast

Choose from proxies, scraping APIs, or Site Unblocker, built for quick onboarding and automation-friendly workflows.

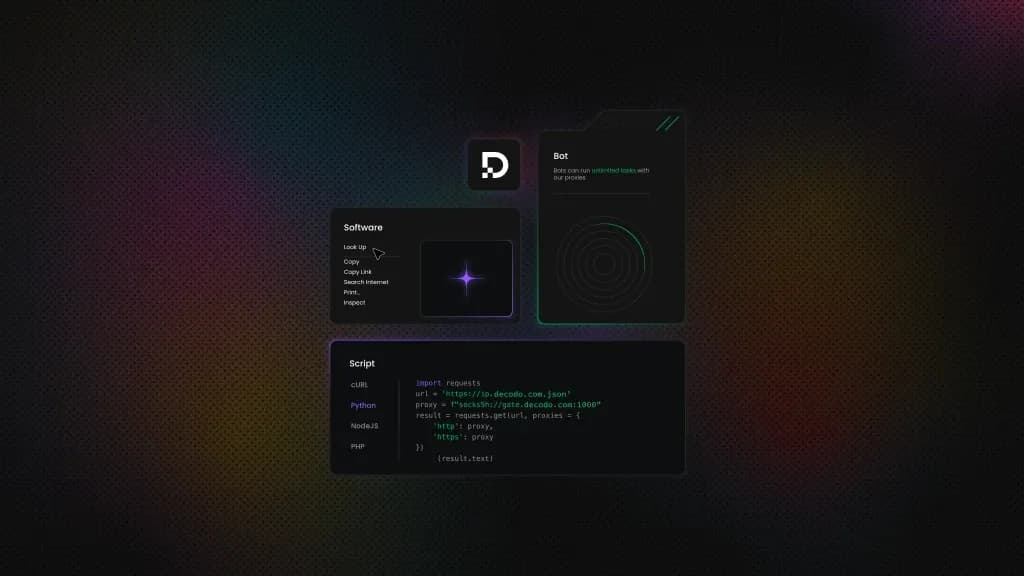

What is a proxy?

A proxy acts as an intermediary between your device and the internet. As traffic is routed through alternative IPs, you’re avoiding geo-restrictions, CAPTCHAs, and IP blocks, unlocking access to any target with maximum anonymity.

Residential proxies

from $1.5/GB

Real household IP addresses connected to local networks, offering genuine residential locations and user-like behavior. Learn more

Static residential proxies

from $0.27/IP

ISP-issued static IPs from premium ASNs that combine residential authenticity with datacenter-like stability. Learn more

Mobile proxies

from $2.25/GB

Real smartphone IPs from 3G/4G/5G carrier networks, providing genuine mobile traffic footprints. Learn more

Datacenter proxies

from $0.020/IP

High-speed IP addresses from enterprise-grade data centers, offering lightning-fast response times. Learn more

Site Unblocker

from $0.95/1K req

An advanced proxy solution engineered to bypass anti-bot defenses and automatically handle CAPTCHAs or IP bans. Learn more

Discover ethically-sourced IPs worldwide

Access 125M+ proxy IPs across 195+ countries. Use state-, country-, city-, and ASN-level targeting for restriction-free web data collection and market research.

Learn with Knowledge Hub

Find advanced scraping tutorials, proxy setup, and integration guides.

Start building AI-ready data pipelines

Power your LLMs, agents, and analytics pipelines with real-time, structured data – without the setup headaches.

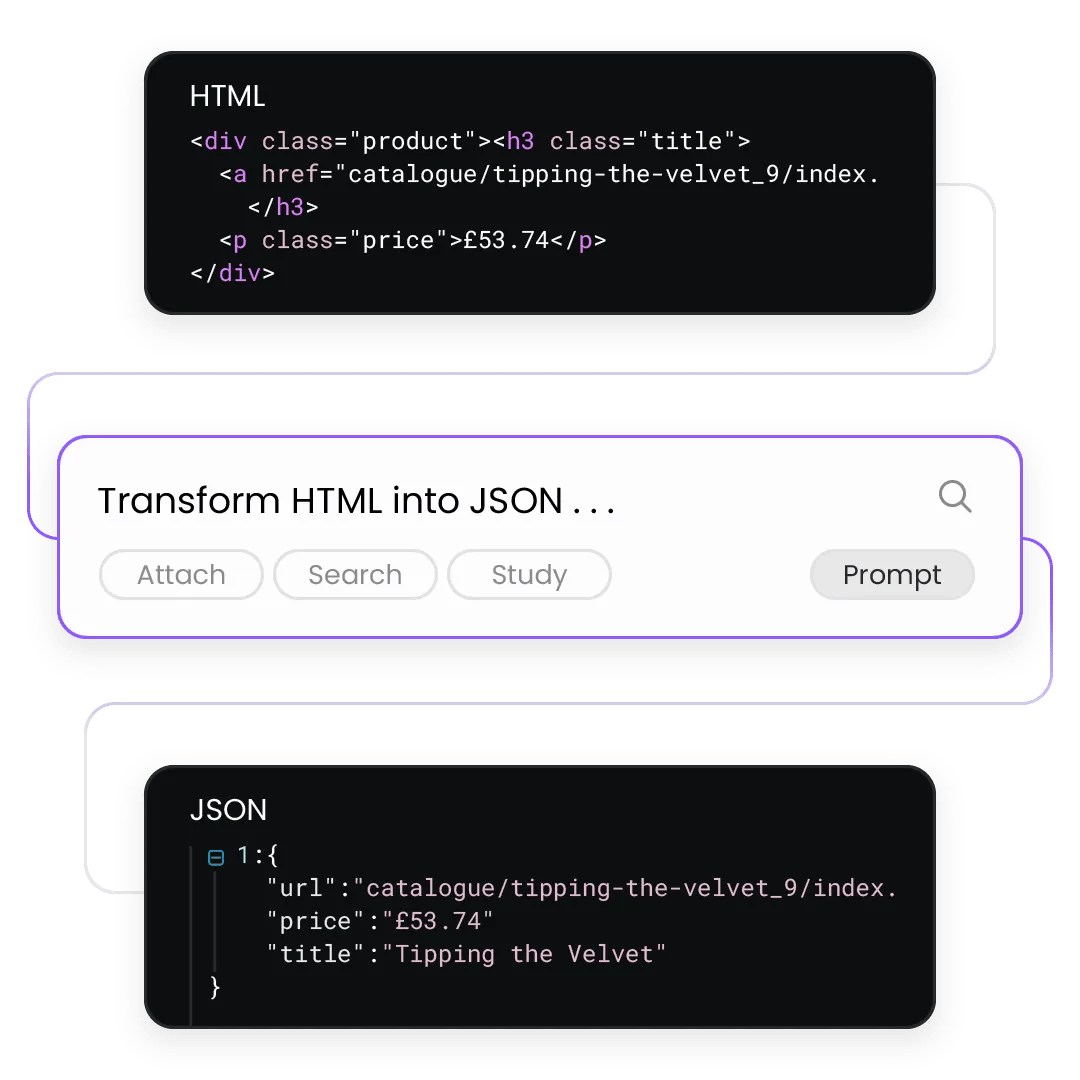

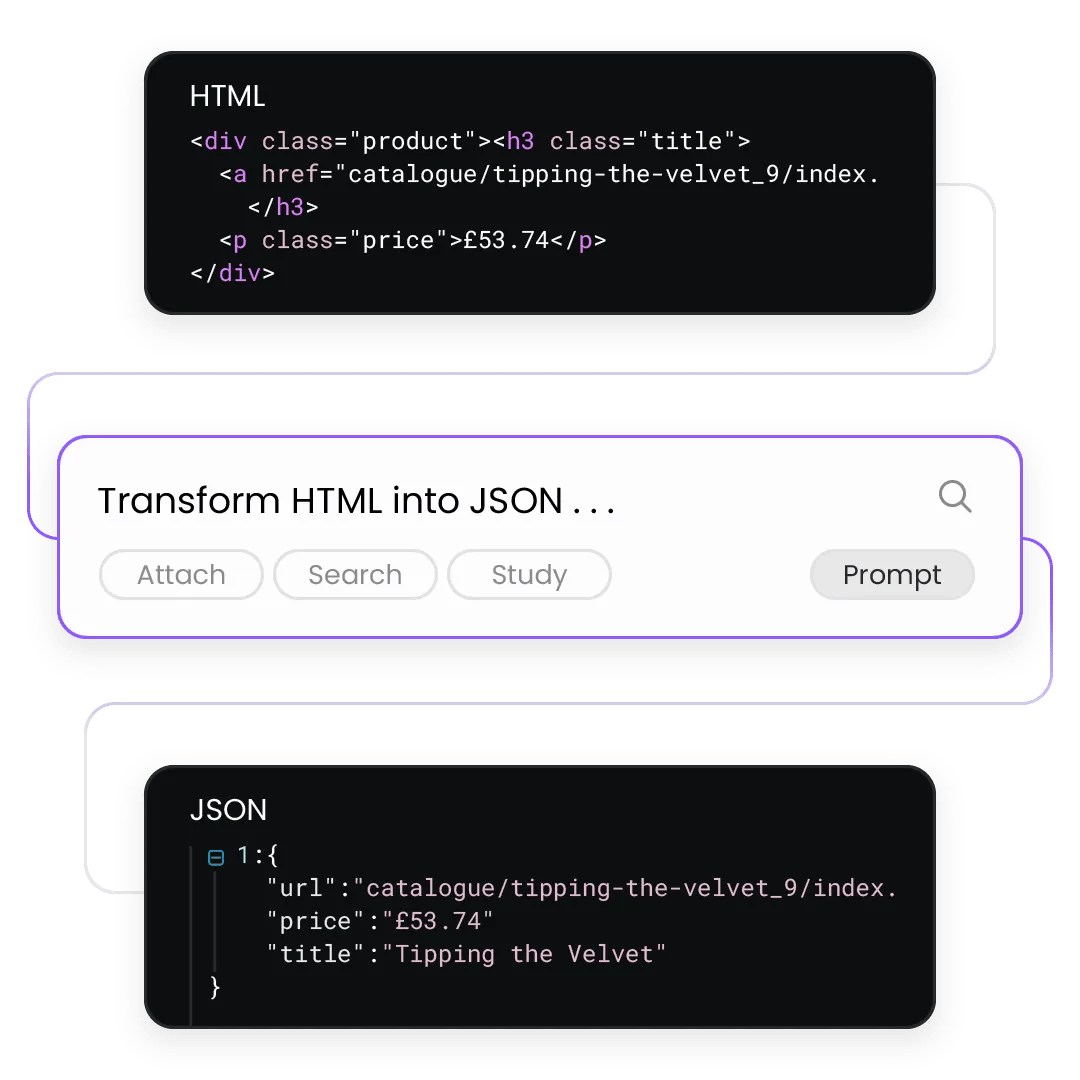

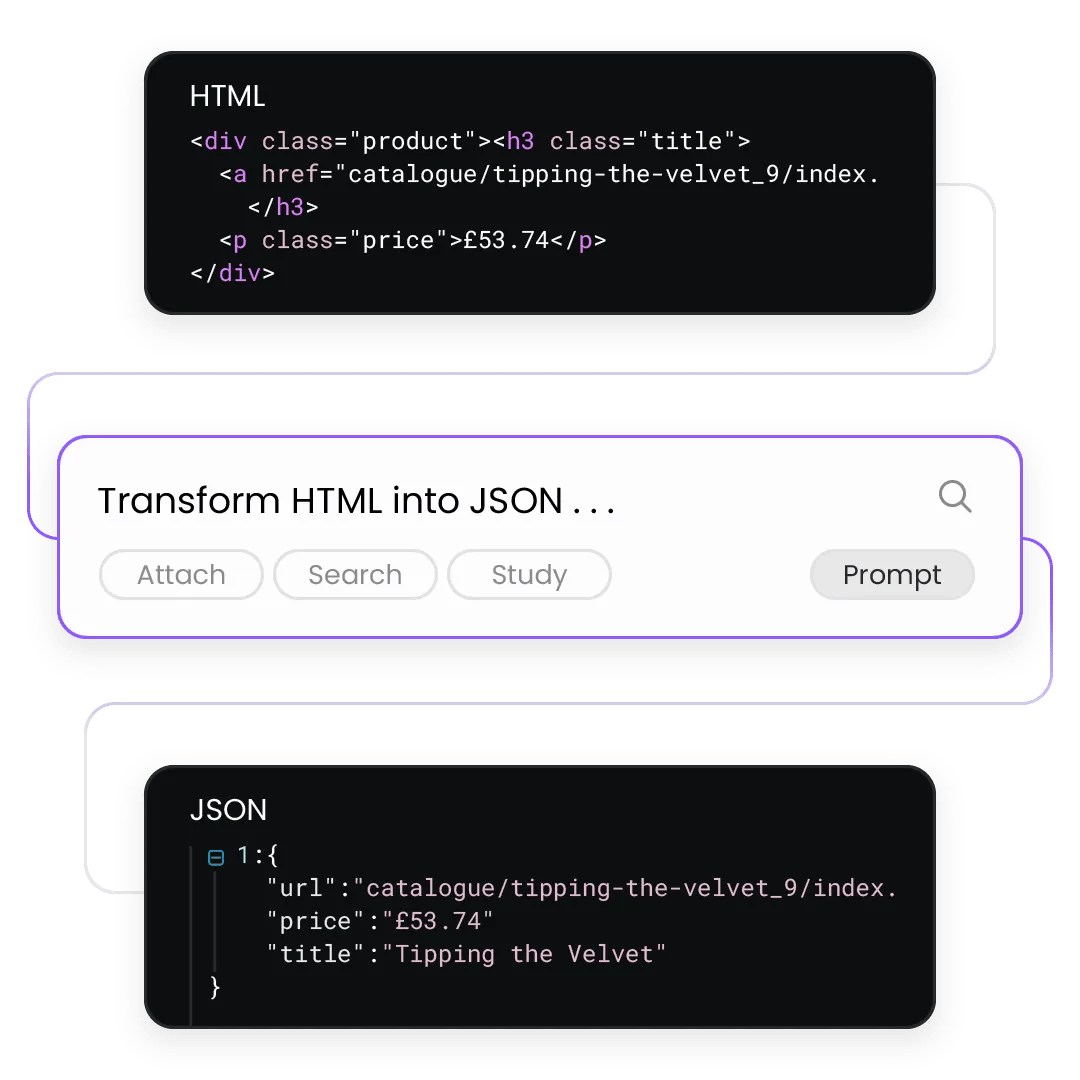

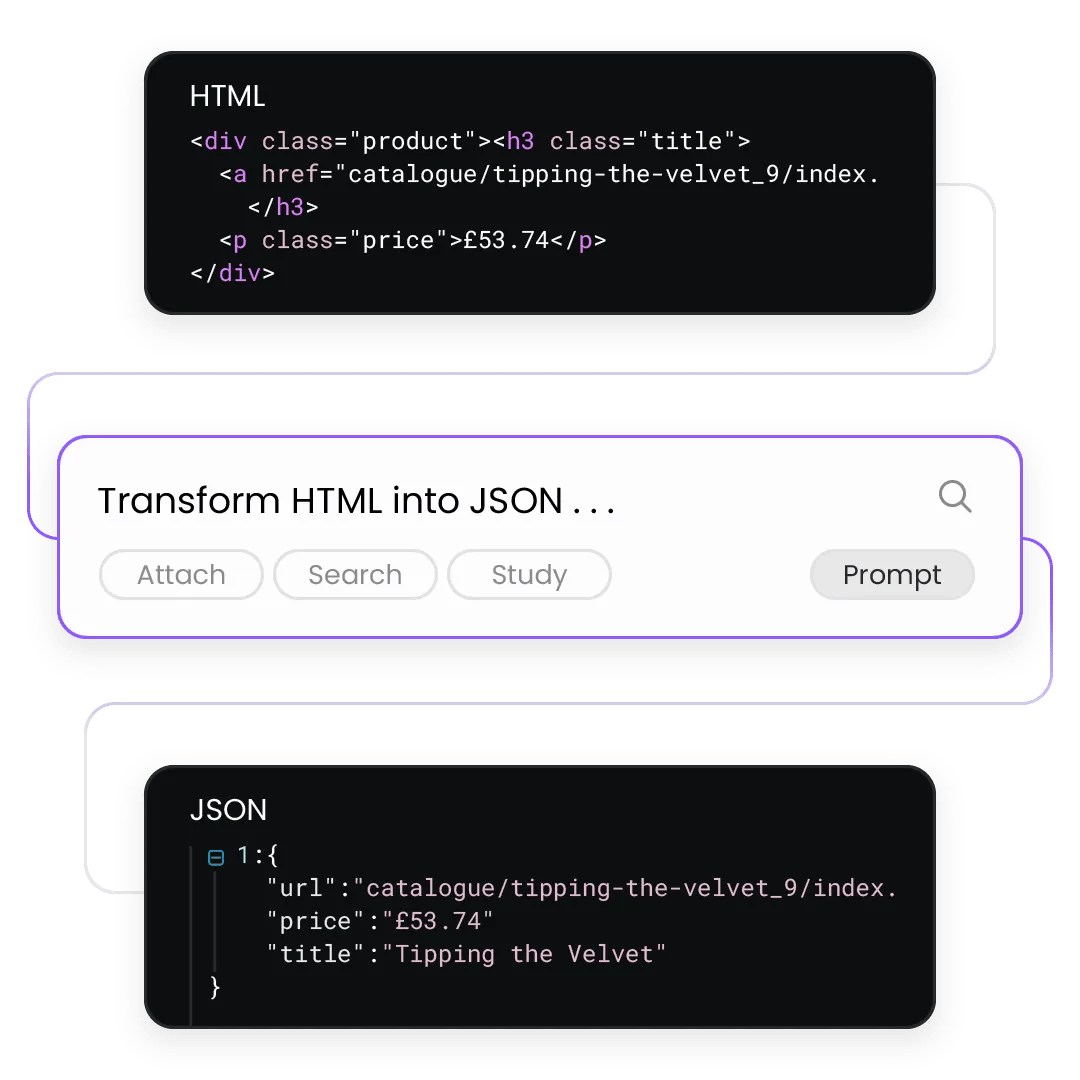

AI Parser

Turn messy HTML into structured JSON with a single prompt – perfect for powering AI models, analytics dashboards, or any workflow that needs clean, ready-to-use data.

MCP server

Connect AI models and agents directly to Decodo’s scraping tools – enabling real-time browsing, scraping, and data delivery inside your AI workflows.

Markdown output

Get clean, developer-friendly outputs in Markdown – ideal for documentation, Jupyter notebooks, and AI pipelines that need human-readable and machine-parseable results.

N8n integration

Plug Decodo (formerly Smartproxy) into your automation stack with our official n8n node – build no-code AI workflows that scrape, parse, and deliver data in minutes.

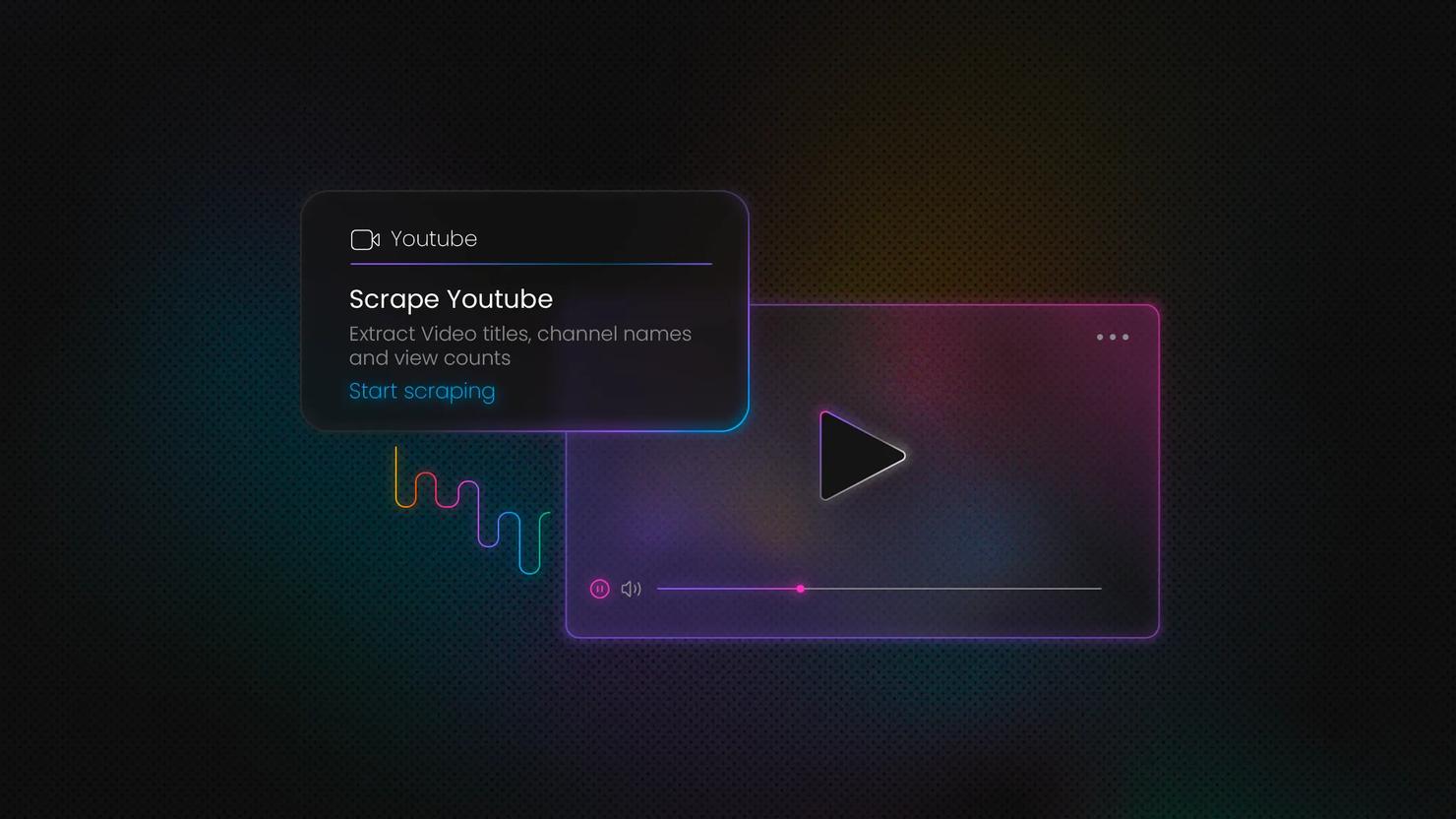

Discover how our solutions power your use cases

From AI model training to SEO monitoring and ad verification, our products adapt to your needs with scale, precision targeting, and anti-block resilience built in.

Artificial intelligence

Fuel AI models with clean, structured training data. Use high-speed proxies, AI Parser, and Scraping APIs to automate large-scale data pipelines without hitting CAPTCHAs or geo-blocks. Learn more

Multi-accounting

Run and manage unlimited eCommerce or social accounts safely. Sticky proxies, session control, and our free X Browser help avoid bans while keeping each profile separate. Learn more

Price aggregation

Track competitor and market prices in real time. Our proxies and Web Scraping API deliver localized, accurate data to power smarter pricing models and dashboards. Learn more

SEO marketing

Audit SERPs and localized content at scale. Combine proxies with our Web Scraping API to monitor keyword rankings, backlinks, and page performance across regions. Learn more

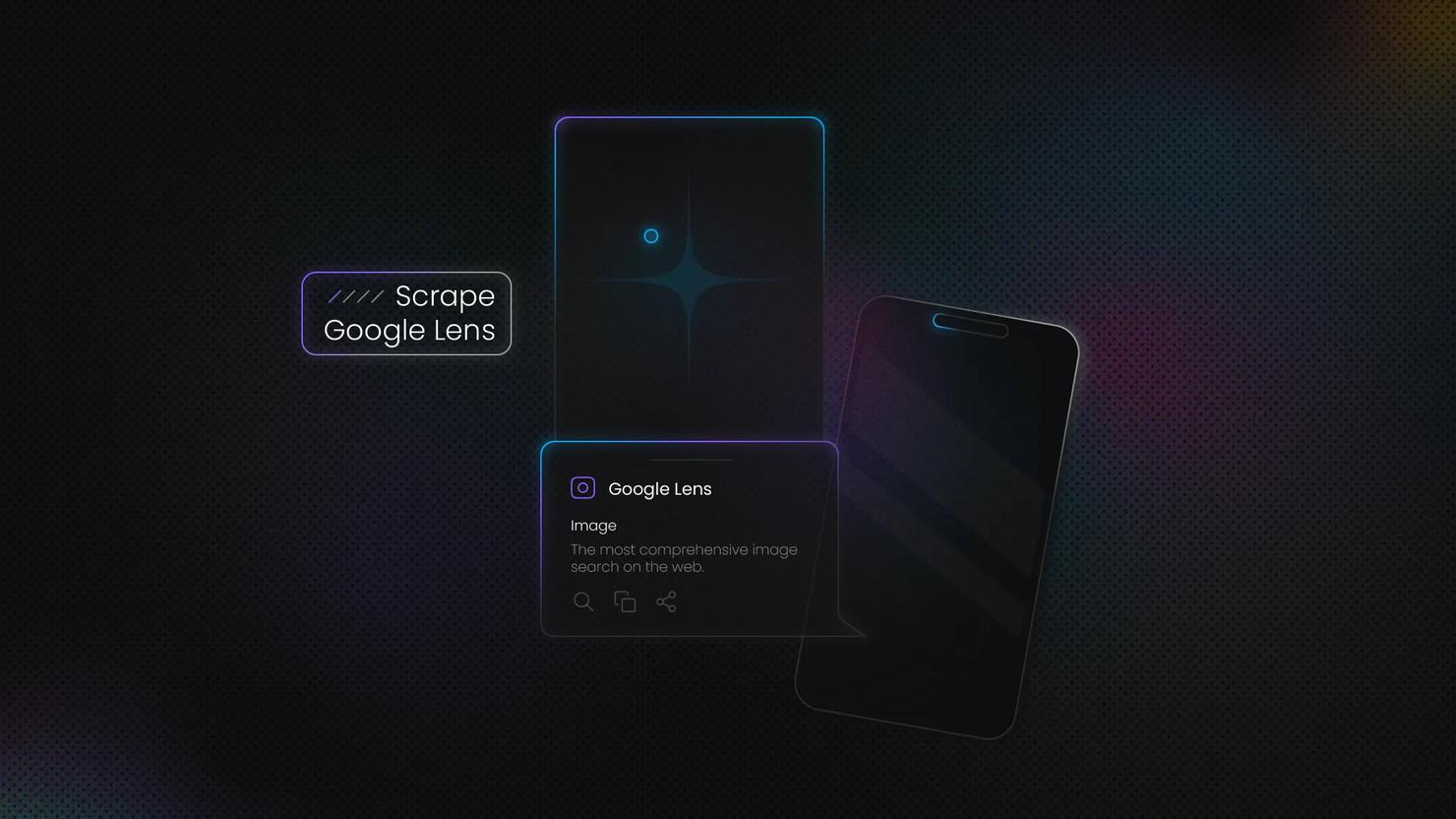

Web scraping

Collect public web data at scale with zero hassle. Decodo’s (formerly Smartproxy) Site Unblocker and Web Scraping API handle CAPTCHAs, IP bans, and JavaScript rendering for reliable data delivery. Learn more

AdTech

Validate ad placement, monitor competitors, and fight fraud. Top-quality proxies with precise geo-targeting ensure accurate ad testing across devices and locations. Learn more

Get free tools to power up your data collection

Use our complimentary solutions to simplify setup, manage sessions, and speed up testing without writing a single line of code.

Learn what people are saying about us

We're thrilled to have the support of our 130K+ clients and the industry's best.

Attentive service

The professional expertise of the Decodo solution has significantly boosted our business growth while enhancing overall efficiency and effectiveness.

N

Novabeyond

Easy to get things done

Decodo provides great service with a simple setup and friendly support team.

R

RoiDynamic

A key to our work

Decodo enables us to develop and test applications in varied environments while supporting precise data collection for research and audience profiling.

C

Cybereg

Read our blog

Build knowledge on our solutions, or pick up some bright ideas for your next project.

Most recent

What is Data Scraping? Definition and Best Techniques (2026)

The data scraping tools market is growing significantly, valued at approximately $875.46M in 2026. The market is projected to grow more due to the increasing demand for real-time data collection across various industries.

Vytautas Savickas

Last updated: Jan 30, 2026

6 min read

Start Your Web Scraping and Proxy Journey

Seamlessly test, launch, and scale your data collection projects with reliable, easy-to-use, and affordable proxy infrastructure.

14-day money-back option